This repository contains the code base for the paper "Domain Generalization via Imprecsie Learning" accepted at ICML 2024.

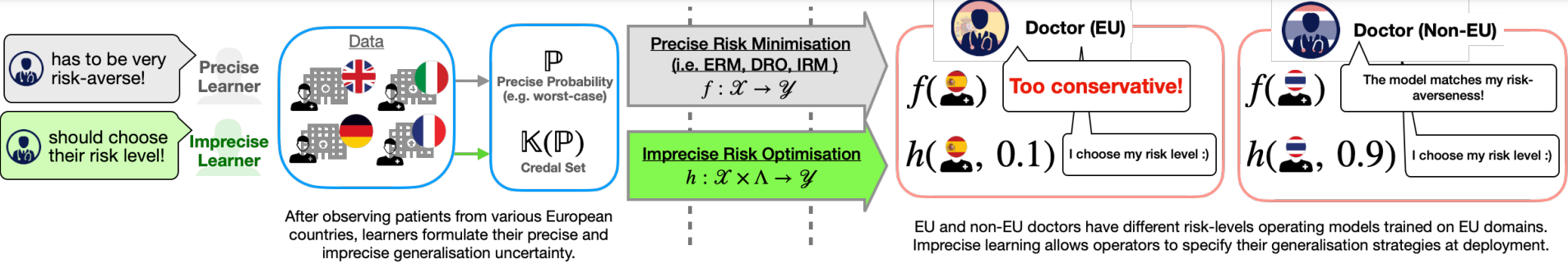

The paper presents a novel approach to domain generalization by contending that domain generalisation encompasses both statistical learning and decision-making. Thus without knowledge of the model operator's notion of generalisation, learners are compelled to make normative judgments. This leads to misalignment amidst institutional separation from model operators. Leveraging imprecise probability, our proposal, Imprecise Domain Generalisation, allows learners to embrace imprecision during training and empowers model operators to make informed decisions during deployment.

To install the necessary dependencies, please follow these steps:

-

Clone the repository:

git clone https://github.com/muandet-lab/dgil.git cd dgil -

Create a virtual environment:

python -m venv dgil_env source dgil_env/bin/activate # On Windows, use `dgil_env\Scripts\activate`

-

Install the required packages from file inside CMNIST folder:

pip install -r requirements.txt

To run the experiments, you can use the provided scripts. Below is an example of how to train and evaluate the model on a specific dataset:

-

Train the model:

python train_sandbox.py --config configs/experiment_config.yaml

-

Evaluate the model:

python evaluate.py --config configs/experiment_config.yaml --checkpoint path/to/checkpoint.pth

The codebase supports benchmarking on CMNSIT and some more simulations.

We welcome contributions from the community. If you encounter any issues or have suggestions for improvements, please open an issue or submit a pull request.

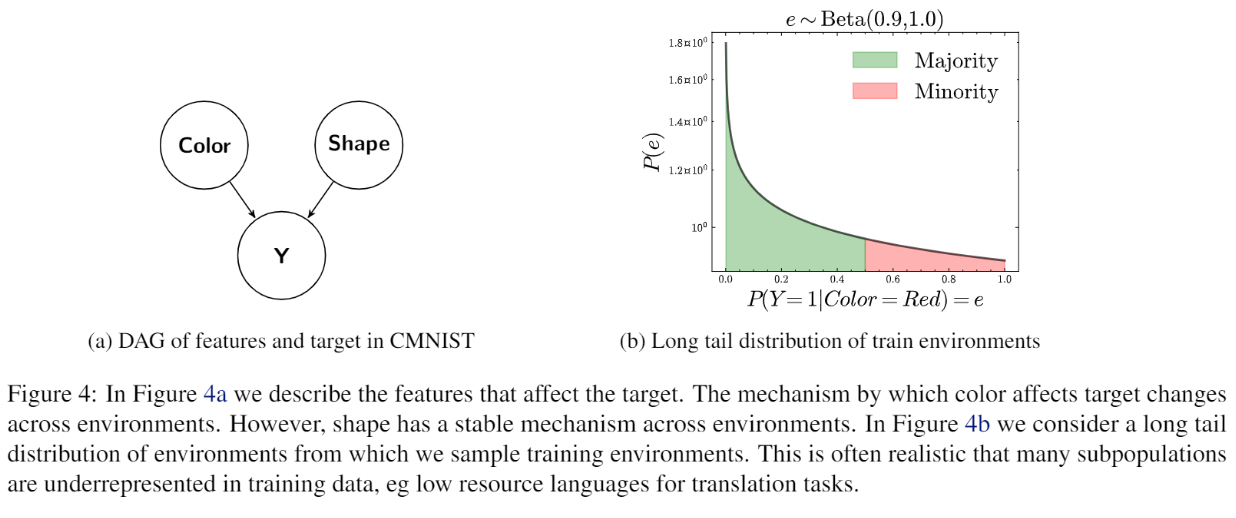

We performed experiment on modified version of CMNIST where we design our experimental setup as shown below

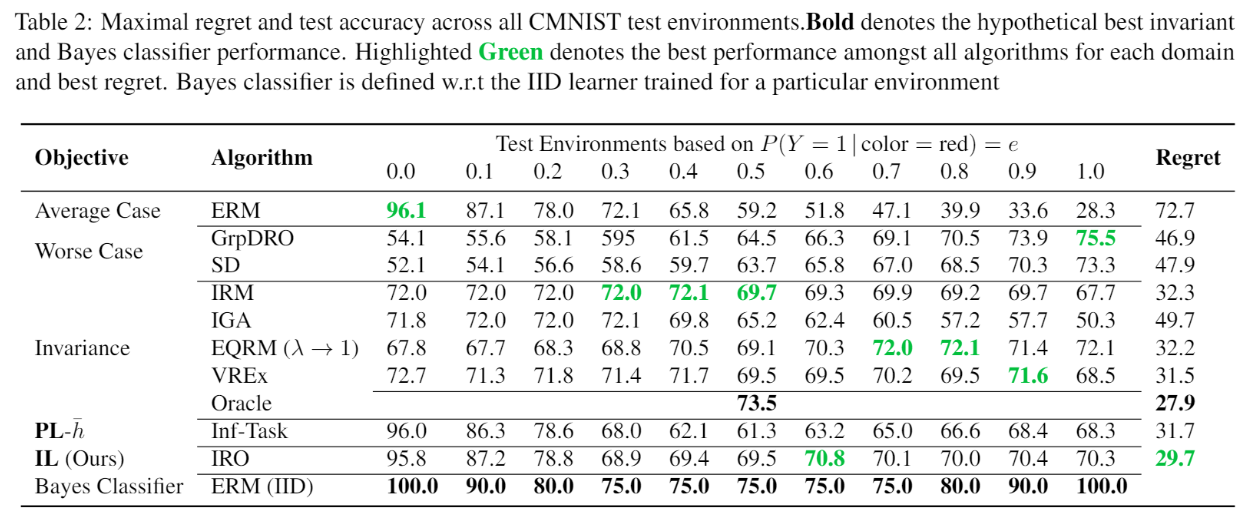

While average case learners and worst case learners perform well for majority and minority environments respectively, DGIL obtains the lowest regret across environments.

If you use this code in your research, please cite our paper:

@inproceedings{singh2024domain,

title={Domain Generalisation via Imprecise Learning},

author={Singh, Anurag and Chau, Siu Lun and Bouabid, Shahine and Muandet, Krikamol},

booktitle={Proceedings of the International Conference on Machine Learning (ICML)},

year={2024}

}For any questions or inquiries, please contact the authors: