This repository is the official PyTorch implementation of U-shape Transformer for Underwater Image Enhancement. (arxiv, Dataset, video demo, visual results). U-shape Transformer achieves state-of-the-art performance in underwater image enhancement task.

🚀 🚀 🚀 News:

-

2021/11/25 We released our pretrained model, You can download the pretrain models in BaiduYun with the password tdg9 or in Google Drive.

-

2021/11/24 We released the official code of U-shape Transformer

-

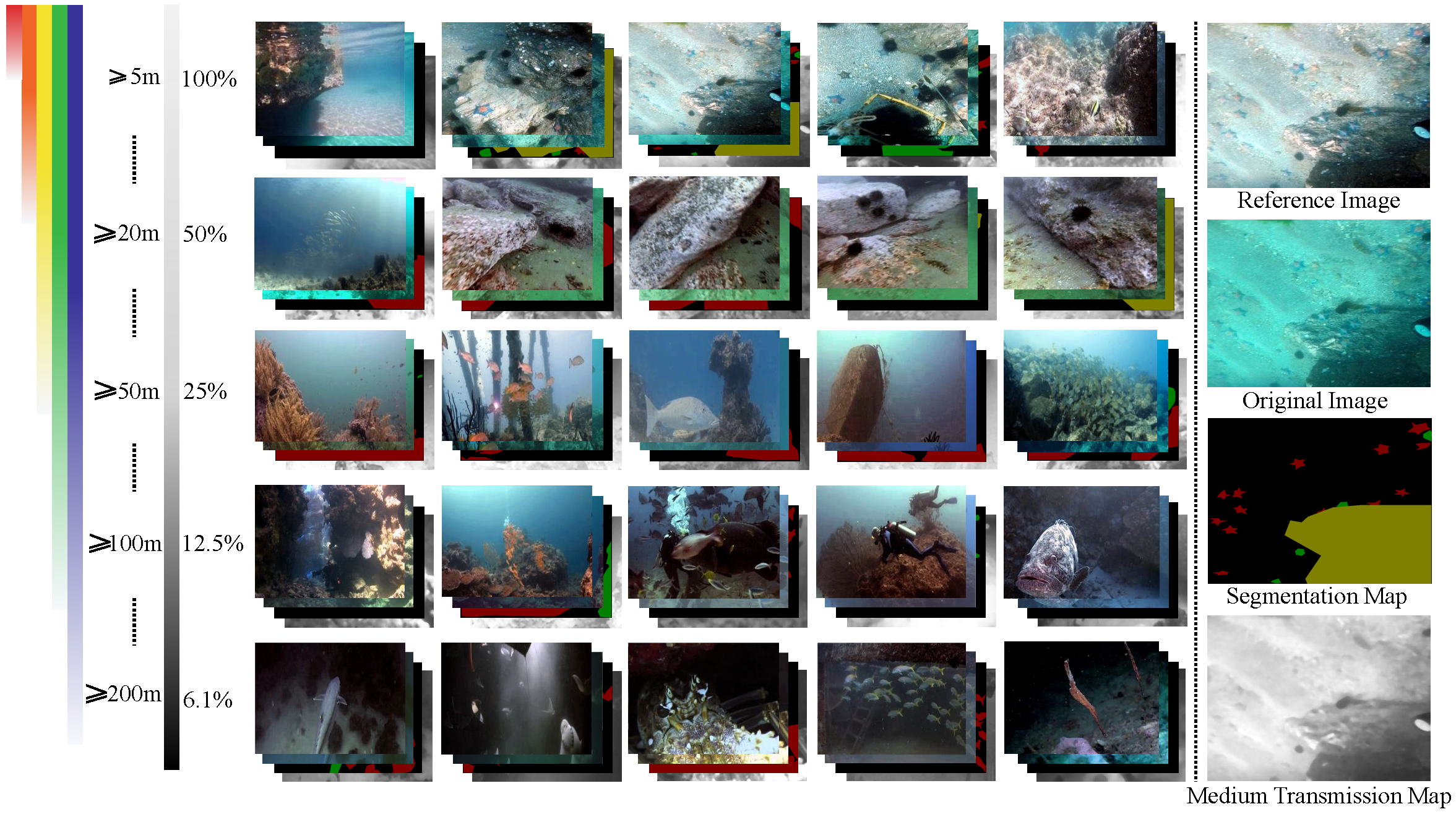

2021/11/23 We released LSUI dataset, We released a large-scale underwater image (LSUI) dataset, which involve richer underwater scenes (lighting conditions, water types and target categories) and better visual quality reference images than the existing ones. You can download it from [here]. Please contact

bian@bit.edu.cnto obtain the download password, and leave your name and organization, we will reply within 48 hours.

The light absorption and scattering of underwater impurities lead to poor underwater imaging quality. The existing data-driven based underwater image enhancement (UIE) techniques suffer from the lack of a large-scale dataset containing various underwater scenes and high-fidelity reference images. Besides, the inconsistent attenuation in different color channels and space areas is not fully considered for boosted enhancement. In this work, we constructed a large-scale underwater image (LSUI) dataset , and reported an U-shape Transformer network where the transformer model is for the first time introduced to the UIE task. The U-shape Transformer is integrated with a channel-wise multi-scale feature fusion transformer (CMSFFT) module and a spatial-wise global feature modeling transformer (SGFMT) module, which reinforce the network's attention to the color channels and space areas with more serious attenuation. Meanwhile, in order to further improve the contrast and saturation, a novel loss function combining RGB, LAB and LCH color spaces is designed following the human vision principle. The extensive experiments on available datasets validate the state-of-the-art performance of the reported technique with more than 2dB superiority.

If you need to train our U-shape transformer from scratch, you need to download our dataset from LSUI (Please contact bian@bit.edu.cn to obtain the download password, and leave your name and organization, we will reply within 48 hours.), and then randomly select 3879 picture pairs as the training set to replace the data folder, and the remaining 400 as the test set to replace the test folder.

Then, run the train.ipynb file with Jupiter notebook, and the trained model weight file will be automatically saved in saved_ Models folder. As described in the paper, we recommend you use L2 loss for the first 600 epochs and L1 loss for the last 200 epochs.

Environmental requirements:

-

Python 3.7 or a newer version

-

Pytorch 1.7 0r a newer version

-

CUDA 10.1 or a newer version

-

OpenCV 4.5.3 or a newer version

-

Jupyter Notebook

Or you can install from the requirements.txt using

pip install -r requirements.txt

For your convience, we provide some example datasets (~20Mb) in ./test. You can download the pretrain models in BaiduYun with the password tdg9 or in Google Drive.

After downloading, extract the pretrained model into the project folder and replace the ./saved_models folder, and then run test.ipynb. The code will use the pretrained model to automatically process all the images in the ./test/input folder and output the results to the ./test/output folder. In addition, the output result will automatically calculate the PSNR value with the reference image.

We achieved state-of-the-art performance on underwater image enhancement task. Detailed results can be found in the paper or our project page

@misc{peng2021ushape,

title={U-shape Transformer for Underwater Image Enhancement},

author={Lintao Peng and Chunli Zhu and Liheng Bian},

year={2021},

eprint={2111.11843},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

This project is released under the MIT license. The codes are designed based on pix2pix. We also refer to codes in UCTransNet and TransBTS. Please also follow their licenses. Thanks for their awesome works.