This repo contains benchmarks for batch inference benchmarking using Ray, Apache Spark and Amazon SageMaker Batch Transform.

We use the image classification task from the MLPerf Inference Benchmark suite in the offline setting.

- Images from ImageNet 2012 Dataset

- PyTorch ResNet50 model

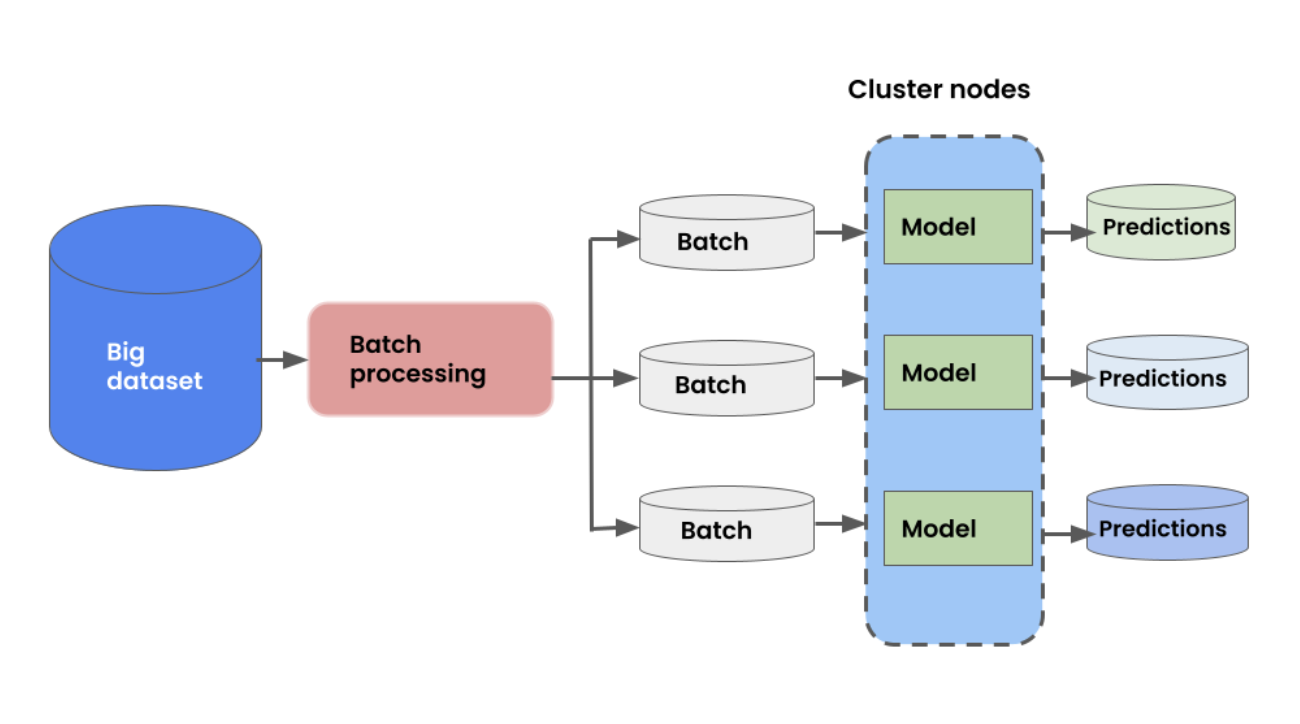

The workload is a simple 3 step pipeline:

Images are stored in parquet format (with ~1k images per parquet file) and are read from S3 from within the same region.

In-memory dataset size is 10 GB. 10 GB dataset. The compressed on-disk size is much smaller.

All experiments used PyTorch v1.12.1 and CUDA 11.3

- 1

gd4n.12xlargeinstance. Contains 48 CPUs and 4 GPUs. - Experiments were all run on the Anyscale platform.

- Uses Ray Data nightly version.

- Code

We tried 2 configurations. All experiments were run on Databricks with the Databricks Runtime v12.0, and using the ML GPU runtime when applicable.

-

Config 1: Creates a standard Databricks cluster with a

g4dn.12xlargeinstance.- This starts a 2 node cluster: 1 node for the driver that does not run tasks, and 1 node for the executor. Databricks does not support running tasks on the head node.

- Spark fuses all stages together, so total parallelism, even for CPU tasks, is limited by the # of GPUs.

- Code

-

Config 2: Use 2 separate clusters: 1 CPU-only cluster for preprocessing, and 1 GPU cluster for predicting. We use DBFS to store the intermeditate preprocessed data. This allows preprocessing to scale independently from prediction, at the cost of having to persist data in between the steps.

Additional configurations were tried that performed worse which you can read about in the spark directory.

SageMaker Batch Transform with 4 g4dn.xlarge instances. There is no built-in multi-GPU support, so we cannot use the multi-GPU g4dn.12xlarge instance. There are still 4 GPUs total in the cluster.

Additional configurations were tried that failed, which you can read more about in the sagemaker directory