- Unofficial reimplementation of MoCo: Momentum Contrast for Unsupervised Visual Representation Learning

- Found many helpful implementations from moco.tensorflow

- Augmentation code are copied from SimCLR - A Simple Framework for Contrastive Learning of Visual Representations

- Trying to implement as much as possible in Tensorflow 2.x

- Used MirroredStrategy with custom training loop (tensorflow-tutorial)

- Difference between official implementation

- 8 GPUs vs 4 GPUs

- 53 Hours vs 147 hours (Unsupervised training time) - much slower than official one

- Batch normalization - tf

- If one sets batch normalization layer as un-trainable, tf will normalize input with their moving mean & var,

even though you use

training=True

- If one sets batch normalization layer as un-trainable, tf will normalize input with their moving mean & var,

even though you use

- Lack of information about how to properly apply weight regularization within distributed environment

Result (Result lower than official one)

- MoCo v1

- Could not reproduce same accuracy (Linear classification protocol on Imagenet) result as official one.

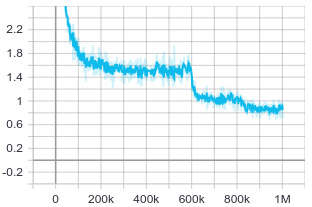

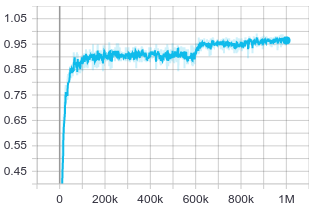

| InfoNCE | (K+1) Accuracy | |

|---|---|---|

| MoCo V1 |  |

|

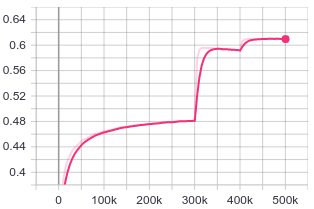

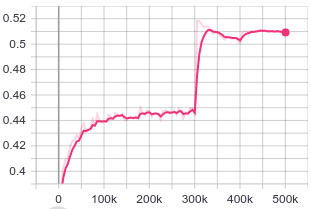

| Train Accuracy | Validation Accuracy | |

|---|---|---|

| lincls |  |

|

- Comparison with official result

| ResNet-50 | pre-train epochs |

pre-train time |

MoCo v1 top-1 acc. |

|---|---|---|---|

| Official Result |

200 | 53 hours | 60.6 |

| This repo Result |

200 | 147 hours | 50.8 |