🌟 DPoser: Diffusion Model as Robust 3D Human Pose Prior 🌟

🔗 Project Page | 🎥 Video | 📄 Paper

Authors

Junzhe Lu, Jing Lin, Hongkun Dou, Yulun Zhang, Yue Deng, Haoqian Wang

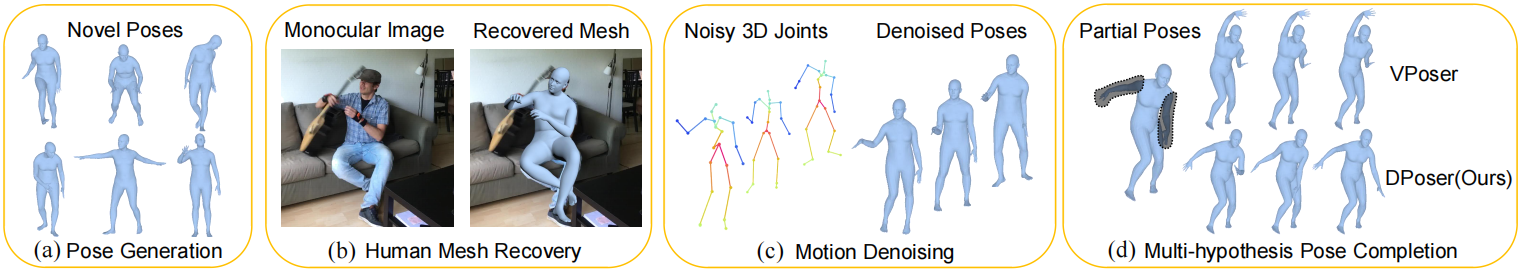

📊 An overview of DPoser’s versatility and performance across multiple pose-related tasks

📘 1. Introduction

Welcome to the official implementation of DPoser: Diffusion Model as Robust 3D Human Pose Prior. 🚀

In this repository, we're excited to introduce DPoser, a robust 3D human pose prior leveraging diffusion models. DPoser is designed to enhance various pose-centric applications like human mesh recovery, pose completion, and motion denoising. Let's dive in!

🛠️ 2. Setup Your Environment

-

Tested Configuration: Our code works great on PyTorch 1.12.1 with CUDA 11.3.

-

Installation Recommendation:

conda install pytorch==1.12.1 torchvision==0.13.1 cudatoolkit=11.3 -c pytorch

-

Required Python Packages:

pip install requirements.txt

-

Body Models: We use the SMPLX body model in our experiments. Make sure to set the

--bodymodel-pathparameter correctly in scripts like./run/demo.pyand./run/train.pybased on your body model's download location.

🚀 3. Quick Demo

-

Pre-trained Model: Grab the pre-trained DPoser model from here and place it in

./pretrained_models. -

Sample Data: Check out

./examplesfor some sample files, including 500 body poses from the AMASS dataset and a motion sequence fragment. -

Explore DPoser Tasks:

🎭 Pose Generation

Generate poses and save rendered images:

python -m run.demo --config configs/subvp/amass_scorefc_continuous.py --task generationFor videos of the generation process:

python -m run.demo --config configs/subvp/amass_scorefc_continuous.py --task generation_process🧩 Pose Completion

Complete poses and view results:

python -m run.demo --config configs/subvp/amass_scorefc_continuous.py --task completion --hypo 10 --part right_arm --view right_halfExplore other solvers like ScoreSDE for our DPoser prior:

python -m run.demo --config configs/subvp/amass_scorefc_continuous.py --task completion2 --hypo 10 --part right_arm --view right_half🌪️ Motion Denoising

Summarize visual results in a video:

python -m run.motion_denoising --config configs/subvp/amass_scorefc_continuous.py --file-path ./examples/Gestures_3_poses_batch005.npz --noise-std 0.04🕺 Human Mesh Recovery

Use the detected 2D keypoints from openpose and save fitting results:

python -m run.demo_fit --img=./examples/image_00077.jpg --openpose=./examples/image_00077_keypoints.json🧑🔬 4. Train DPoser Yourself

Dataset Preparation

To train DPoser, we use the AMASS dataset. You have two options for dataset preparation:

-

Option 1: Process the Dataset Yourself

Download the AMASS dataset and process it using the following script:python -m lib/data/script.py

Ensure you follow this directory structure:

${ROOT} |-- data | |-- AMASS | |-- amass_processed | |-- version1 | |-- test | |-- betas.pt | |-- pose_body.pt | |-- root_orient.pt | |-- train | |-- valid -

Option 2: Use Preprocessed Data

Alternatively, download the processed data directly from Google Drive.

🏋️♂️ Start Training

After setting up your dataset, begin training DPoser:

python -m run.train --config configs/subvp/amass_scorefc_continuous.py --name reproduceThis command will start the training process. The checkpoints, TensorBoard logs, and validation visualization results will be stored under ./output/amass_amass.

🧪 5. Test DPoser

Pose Generation

Quantitatively evaluate 500 generated samples using this script:

python -m run.demo --config configs/subvp/amass_scorefc_continuous.py --task generation --metricsThis will use the SMPL body model to evaluate APD and SI following Pose-NDF.

Pose Completion

For testing on the AMASS dataset (make sure you've completed the dataset preparation in Step 4):

python -m run.completion --config configs/subvp/amass_scorefc_continuous.py --gpus 1 --hypo 10 --sample 10 --part legsMotion Denoising

To evaluate motion denoising on the AMASS dataset, use the following steps:

- Split the

HumanEvapart of the AMASS dataset into fragments using this script:python lib/dataset/HumanEva.py --input-dir path_to_HumanEva --output-dir ./data/HumanEva_60frame --seq-len 60

- Then, run this script to evaluate the motion denoising task on all sub-sequences in the

data-dir:python -m run.motion_denoising --config configs/subvp/amass_scorefc_continuous.py --data-dir ./data/HumanEva_60frame --noise-std 0.04

Human Mesh Recovery

To test on the EHF dataset, follow these steps:

- First, download the EHF dataset from SMPLX.

- Specify the

--data-dirand run this script:python -m run.fitting --config configs/subvp/amass_scorefc_continuous.py --data-dir path_to_EHF --outdir ./fitting_results

❓ Troubleshoots

-

RuntimeError: Subtraction, the '-' operator, with a bool tensor is not supported. If you are trying to invert a mask, use the '~' or 'logical_not()' operator instead.: Solution here -

TypeError: startswith first arg must be bytes or a tuple of bytes, not str.: Fix here.

🙏 Acknowledgement

Big thanks to ScoreSDE, GFPose, and Hand4Whole for their foundational work and code.

📚 Reference

@article{lu2023dposer,

title={DPoser: Diffusion Model as Robust 3D Human Pose Prior},

author={Lu, Junzhe and Lin, Jing and Dou, Hongkun and Zhang, Yulun and Deng, Yue and Wang, Haoqian},

journal={arXiv preprint arXiv:2312.05541},

year={2023}

}