Yao Mu* †, Tianxing Chen* , Zanxin Chen* , Shijia Peng*,

Zeyu Gao, Zhiqian Lan, Yude Zou, Lunkai Lin, Zhiqiang Xie, Ping Luo†.

RoboTwin (early version), accepted to ECCV Workshop 2024 (Oral): Webpage | PDF | arXiv

See INSTALLATION.md for installation instructions. It takes about 20 minutes for installation.

Coming Soon

This part will be released soon. We now release 50 demos for each task:

In the project root directory:

mkdir data

cd data

View https://huggingface.co/datasets/YaoMarkMu/robotwin_dataset, download the files and unzip them to data.

Data collection configurations are located in the config folder, corresponding to each task. Here is an explanation of the important parameters:

- render_freq: Set to 0 means no rendering. If you wish to see the rendering, it can be set to 10.

- collect_data: Data collection will only be enabled if set to True.

- camera_w,h: These are the camera parameters, with a total of 4 cameras - two on the wrist and two positioned as top and front views.

- pcd_crop: Determines whether the obtained point cloud data is cropped to remove elements like tables and walls.

- pcd_down_sample_num: The point cloud data is downsampled using the FPS (Farthest Point Sampling) method, set it to 0 to keep the raw point cloud data.

- data_type/endpose: The 6D pose of the end effector, which still has some minor issues.

- data_type/qpos: Represents the joint action.

- observer: Decides whether to save a observer-view photo for easy observation.

See envs/base_task.py, search TODO and you may see the following code, make sure that policy.get_action(obs) will return action sequence (predicted actions).:

actions = model.get_action(obs) # TODO, get actions according to your policy and current obs

You need to modify script/eval_policy.py in the root directory to load your model for evaluation: Search TODO, modify the code to init your policy.

Run the follow command to run your policy in specific task env:

bash script/run_eval_policy.sh ${task_name} ${gpu_id}

The DP3 code can be found in policy/3D-Diffusion-Policy.

Process Data for DP3 training after collecting data (In root directory), and input the task name and the amount of data you want your policy to train with:

python script/pkl2zarr_dp3.py ${task_name} ${number_of_episodes}

Then, move to policy/3D-Diffusion-Policy first, and run the following code to train DP3 :

bash train.sh ${task_name} ${expert_data_num} ${seed} ${gpu_id}

Run the following code to eval DP3 for specific task:

bash eval.sh ${task_name} ${expert_data_num} ${checkpoint_num} ${seed} ${gpu_id}

Current leaderboard:

Here's the revised table with the averages listed at the end:

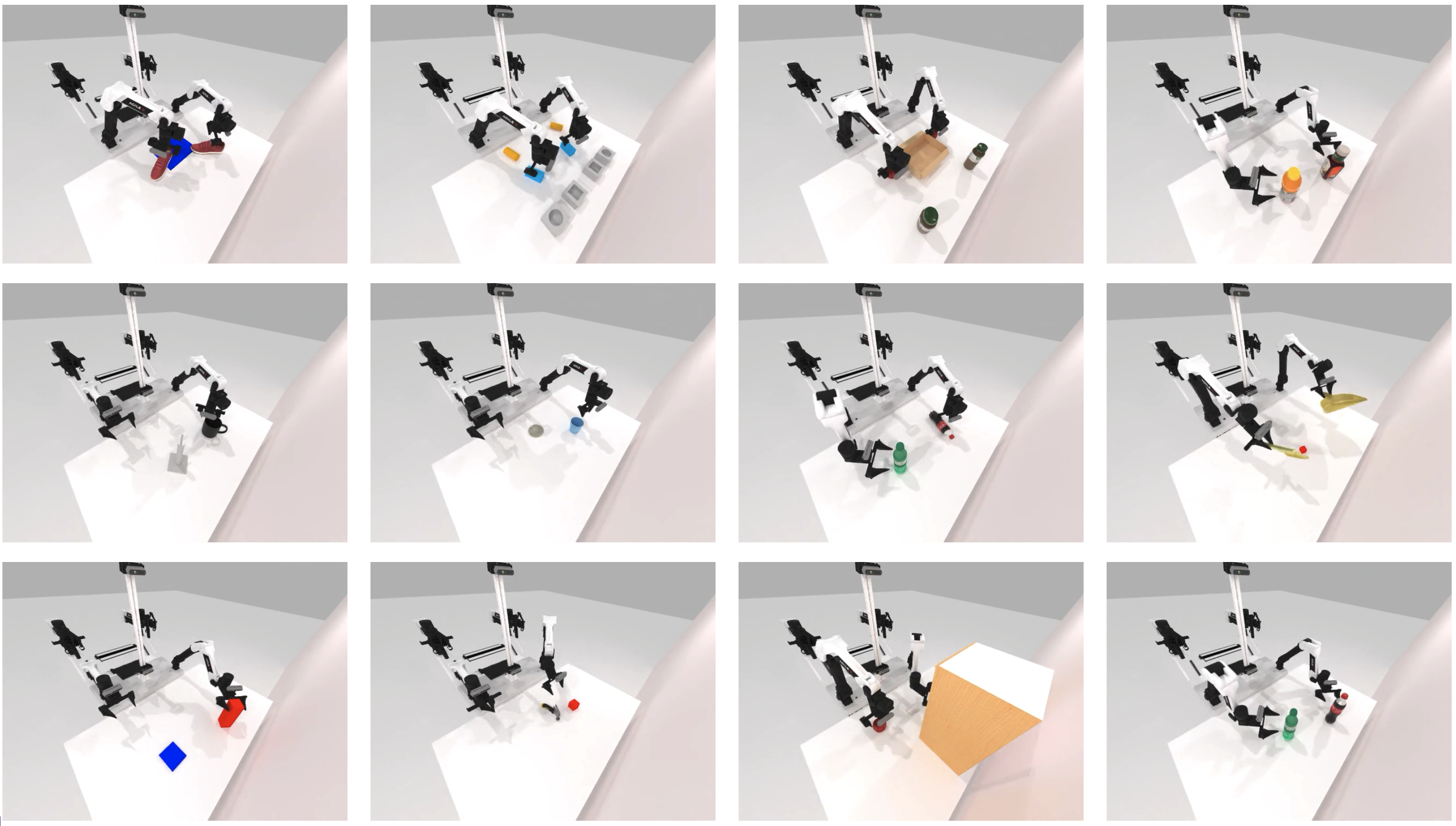

| Task | Algorithm | 10 demos | 20 demos | 50 demos |

|--------------------------------|---------------------|--------------|--------------|--------------|

| Apple Cabinet Storage | DP3 (XYZ) | 41% | 59% | 75% |

| | DP3 (XYZ+RGB) | 22% | 41% | 60% |

| Block Handover | DP3 (XYZ) | 55% | 89% | 70% |

| | DP3 (XYZ+RGB) | 48% | 81% | 94% |

| Blocks Stack (Easy) | DP3 (XYZ) | / | / | / |

| | DP3 (XYZ+RGB) | 0% | 1% | 23% |

| Container Place | DP3 (XYZ) | 34% | 54% | 68% |

| | DP3 (XYZ+RGB) | 18% | 28% | 54% |

| Dual Bottles Pick (Easy) | DP3 (XYZ) | 10% | 48% | 78% |

| | DP3 (XYZ+RGB) | 9% | 41% | 75% |

| Empty Cup Place | DP3 (XYZ) | 3% | 30% | 73% |

| | DP3 (XYZ+RGB) | 7% | 23% | 82% |

| Pick Apple Messy | DP3 (XYZ) | 2% | 2% | 9% |

| | DP3 (XYZ+RGB) | 2% | 3% | 25% |

| Shoes Place | DP3 (XYZ) | 2% | 1% | 12% |

| | DP3 (XYZ+RGB) | 0% | 0% | 5% |

| Block Hammer Beat | DP3 (XYZ) | 37% | 45% | 60% |

| | DP3 (XYZ+RGB) | 36% | 41% | 73% |

| Blocks Stack (Hard) | DP3 (XYZ) | / | / | / |

| | DP3 (XYZ+RGB) | 0% | 0% | 3% |

| Diverse Bottles Pick | DP3 (XYZ) | 3% | 12% | 38% |

| | DP3 (XYZ+RGB) | 0% | 1% | 7% |

| Dual Bottles Pick (Hard) | DP3 (XYZ) | 13% | 29% | 46% |

| | DP3 (XYZ+RGB) | 11% | 26% | 48% |

| Mug Hanging | DP3 (XYZ) | 1% | 9% | 13% |

| | DP3 (XYZ+RGB) | 1% | 2% | 6% |

| Shoe Place | DP3 (XYZ) | 12% | 16% | 54% |

| | DP3 (XYZ+RGB) | 13% | 20% | 35% |

| Average | DP3 (XYZ) | 17.75% | 32.83% | 49.67% |

| | DP3 (XYZ+RGB) | 11.93% | 22.00% | 42.14% |

Deemos Rodin: https://hyperhuman.deemos.com/rodin

If you find you fail to quit the running python process with Crtl + C, just try Ctrl + \.

We found Vulkan is not stable in someoff-screen devices, try reconnecting ssh -X ... if you meet any problem.

Other Common Issues can be found in COMMON_ISSUE

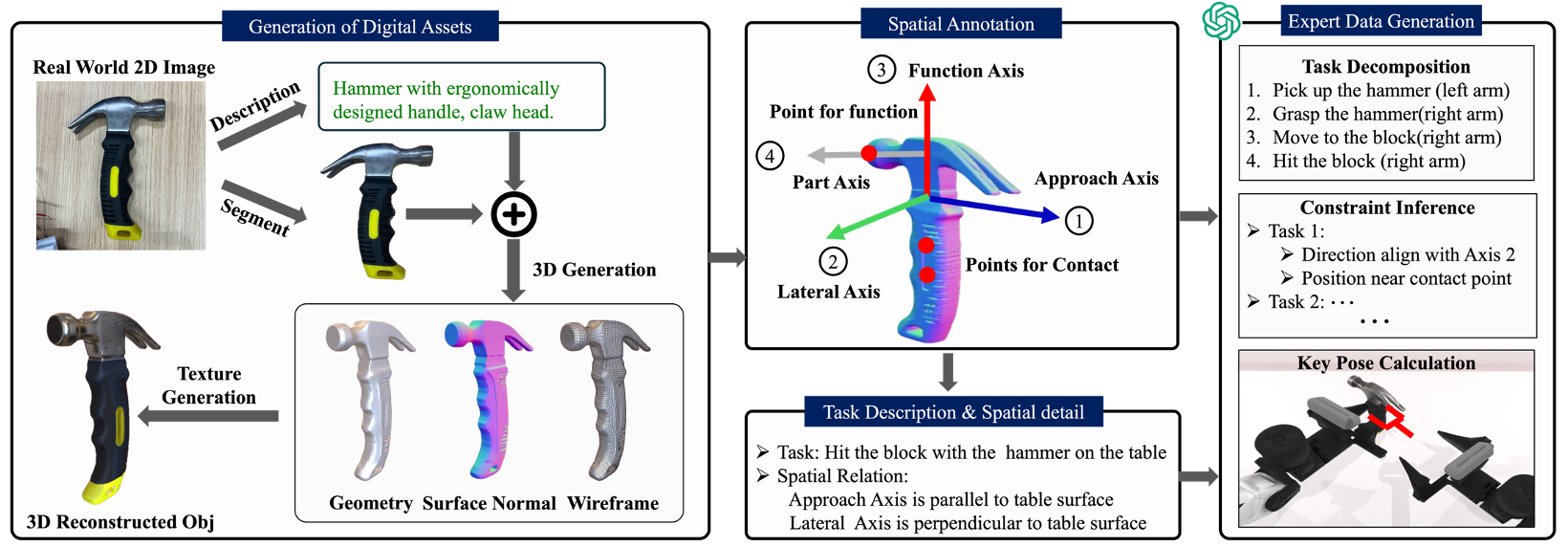

- Task Code Generation Pipeline.

- RoboTwin (Final Version) will be released soon.

- Real Robot Data collected by teleoperation.

- Tasks env (Data Collection).

- More baseline code will be integrated into this repository (RICE, ACT, Diffusion Policy).

If you find our work useful, please consider citing:

RoboTwin: Dual-Arm Robot Benchmark with Generative Digital Twins (early version), accepted to ECCV Workshop 2024 (Oral)

@article{mu2024robotwin,

title={RoboTwin: Dual-Arm Robot Benchmark with Generative Digital Twins (early version)},

author={Mu, Yao and Chen, Tianxing and Peng, Shijia and Chen, Zanxin and Gao, Zeyu and Zou, Yude and Lin, Lunkai and Xie, Zhiqiang and Luo, Ping},

journal={arXiv preprint arXiv:2409.02920},

year={2024}

}

This repository is released under the MIT license. See LICENSE for additional details.