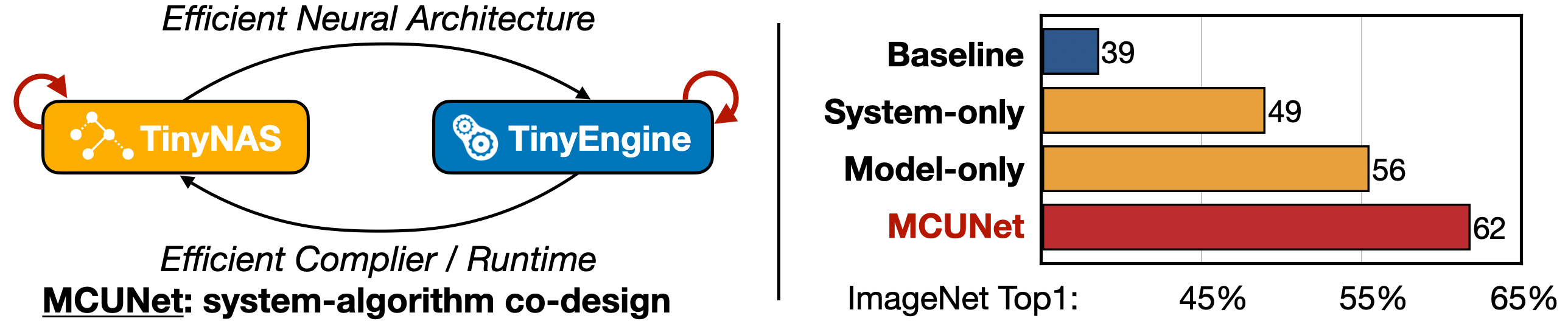

This is the official implementation of TinyEngine, a memory-efficient and high-performance neural network library for Microcontrollers. TinyEngine is a part of MCUNet, which also consists of TinyNAS. MCUNet is a system-algorithm co-design framework for tiny deep learning on microcontrollers. TinyEngine and TinyNAS are co-designed to fit the tight memory budgets.

The MCUNet and TinyNAS repo is here.

We will soon release Tiny Training Engine used in MCUNetV3: On-Device Training Under 256KB Memory. If you are interested in getting updates, please sign up here to get notified!

- (2022/08) Our New Course on TinyML and Efficient Deep Learning will be released soon in September 2022: efficientml.ai.

- (2022/08) We include the demo tutorial for deploying a visual wake word (VWW) model onto microcontrollers.

- (2022/08) We opensource the TinyEngine repo.

- (2022/07) We include the person detection model used in the video demo above in the MCUNet repo.

- (2022/06) We refactor the MCUNet repo as a standalone repo (previous repo: https://github.com/mit-han-lab/tinyml)

- (2021/10) MCUNetV2 is accepted to NeurIPS 2021: https://arxiv.org/abs/2110.15352 !

- (2020/10) MCUNet is accepted to NeurIPS 2020 as spotlight: https://arxiv.org/abs/2007.10319 !

- Our projects are covered by: MIT News, MIT News (v2), WIRED, Morning Brew, Stacey on IoT, Analytics Insight, Techable, etc.

Microcontrollers are low-cost, low-power hardware. They are widely deployed and have wide applications, but the tight memory budget (50,000x smaller than GPUs) makes deep learning deployment difficult.

MCUNet is a system-algorithm co-design framework for tiny deep learning on microcontrollers. It consists of TinyNAS and TinyEngine. They are co-designed to fit the tight memory budgets. With system-algorithm co-design, we can significantly improve the deep learning performance on the same tiny memory budget.

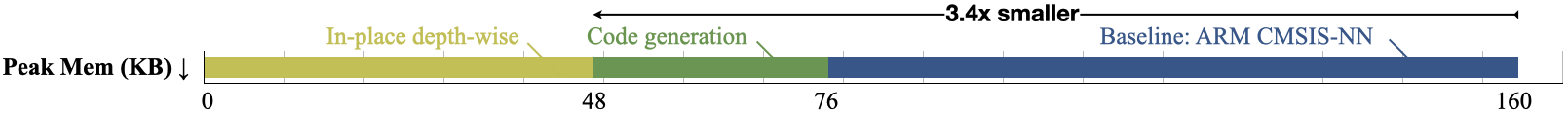

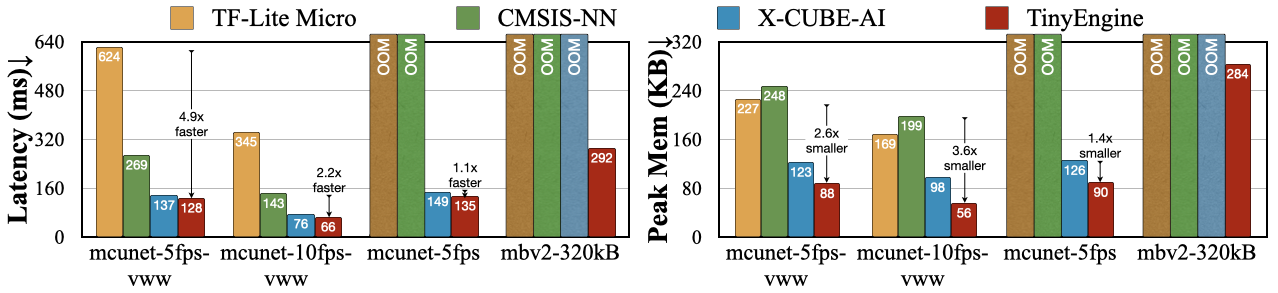

Specifically, TinyEngine is a memory-efficient inference library. TinyEngine adapts the memory scheduling according to the overall network topology rather than layer-wise optimization, reducing memory usage and accelerating the inference. It outperforms existing inference libraries such as TF-Lite Micro from Google, CMSIS-NN from Arm, and X-CUBE-AI from STMicroelectronics.

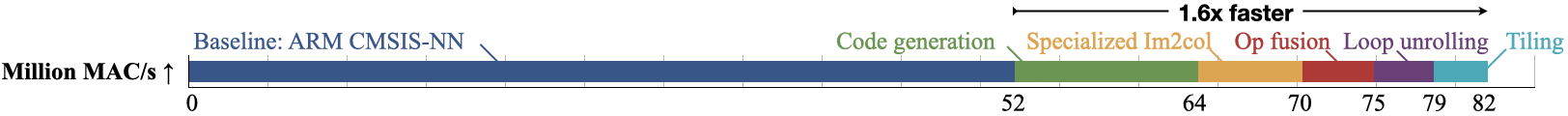

TinyEngine adopts the following optimization techniques to accelerate inference speed and minimize memory footprint.

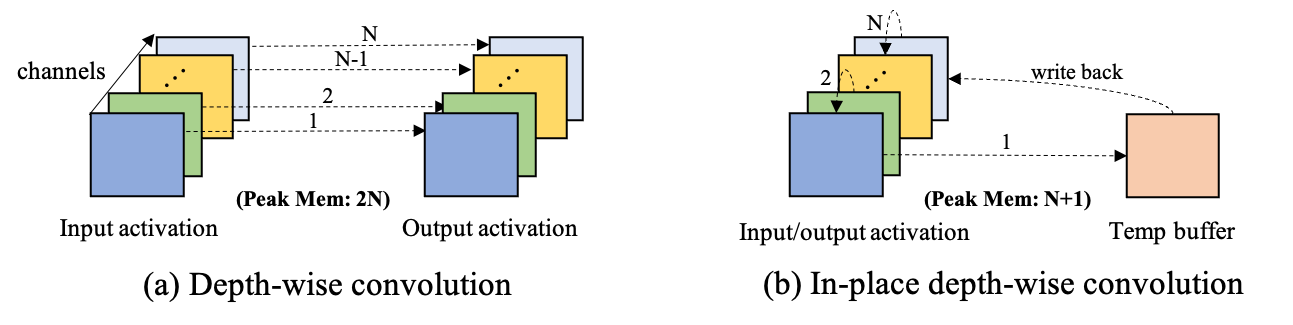

- In-place depth-wise convolution: A unique data placement technique for depth-wise convolution that overwrites input data by intermediate/output data to reduce peak SRAM memory.

- Operator fusion: A method that improves performance by merging one operator into a different operator so that they are executed together without requiring a roundtrip to memory.

- SIMD (Single instruction, multiple data) programming: A computing method that performs the same operation on multiple data points simultaneously.

- HWC to CHW weight format transformation: A weight format transformation technique that increases cache hit ratio for in-place depth-wise convolution.

- Image to Column (Im2col) convolution: An implementation technique of computing convolution operation using general matrix multiplication (GEMM) operations.

- Loop reordering: A loop transformation technique that attempts to optimize a program's execution speed by reordering/interchanging the sequence of loops.

- Loop unrolling: A loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space-time tradeoff.

- Loop tiling: A loop transformation technique that attempts to reduce memory access latency by partitioning a loop's iteration space into smaller chunks or blocks, so as to help ensure data used in a loop stays in the cache until it is reused.

By adopting the abovementioned optimization techniques, TinyEngine can not only enhance inference speed but also reduce peak memory, as shown in the figures below.

To sum up, our TinyEngine inference engine could be a useful infrastructure for MCU-based AI applications. It significantly improves the inference speed and reduces the memory usage compared to existing libraries like TF-Lite Micro, CMSIS-NN, X-CUBE-AI, etc. It improves the inference speed by 1.1-18.6x, and reduces the peak memory by 1.3-3.6x.

code_generator contains a python library that is used to compile neural networks into low-level source code (C/C++).

TinyEngine contains a C/C++ library that implements operators and performs inference on Microcontrollers.

examples contains the examples of transforming TFLite models into our TinyEngine models.

tutorial contains the demo tutorial of deploying a visual wake word (VWW) model onto microcontrollers.

assets contains misc assets.

- Python 3.6+

- STM32CubeIDE 1.5+

First, clone this repository:

git clone --recursive https://github.com/mit-han-lab/tinyengine.git(Optional) Using a virtual environment with conda is recommended.

conda create -n tinyengine python=3.6 pip

conda activate tinyengineInstall dependencies:

pip install -r requirements.txtInstall pre-commit hooks to automatically format changes in your code.

pre-commit install

Please see tutorial to learn how to deploy a visual wake word (VWW) model onto microcontrollers by using TinyEngine.

- All the tflite models are from Model Zoo in MCUNet repo. Please see MCUNet repo to know how to build the pre-trained int8 quantized models in TF-Lite format.

- All the latency, peak memory (SRAM) and Flash memory usage results are profiled on STM32F746G-DISCO discovery boards.

- Note that we measure the newer versions of libraries in this repo, so that the results in this repo might be different from the ones in the MCUNet papers.

- Since TF-Lite Micro no longer has version numbers anymore, we use the git commit ID to indicate its newer version.

- All the tflite models are compiled by

-Ofastoptimization level in STM32CubeIDE. - OOM denotes Out Of Memory.

The latency results:

| net_id | TF-Lite Micro v2.1.0 |

TF-Lite Micro @ 713b6ed |

CMSIS-NN v2.0.0 |

X-CUBE-AI v7.1.0 |

TinyEngine |

|---|---|---|---|---|---|

| # mcunet models (VWW) | |||||

| mcunet-5fps-vww | 624ms | 2346ms | 269ms | 137ms | 128ms |

| mcunet-10fps-vww | 345ms | 1230ms | 143ms | 76ms | 66ms |

| mcunet-320kB-vww | OOM | OOM | OOM | 657ms | 570ms |

| # mcunet models (ImageNet) | |||||

| mcunet-5fps | OOM | OOM | OOM | 149ms | 135ms |

| mcunet-10fps | OOM | OOM | OOM | 84ms | 62ms |

| mcunet-256kB | OOM | OOM | OOM | 839ms | 681ms |

| mcunet-320kB | OOM | OOM | OOM | OOM | 819ms |

| # baseline models | |||||

| mbv2-320kB | OOM | OOM | OOM | OOM | 292ms |

| proxyless-320kB | OOM | OOM | OOM | 484ms | 425ms |

The peak memory (SRAM) results:

| net_id | TF-Lite Micro v2.1.0 |

TF-Lite Micro @ 713b6ed |

CMSIS-NN v2.0.0 |

X-CUBE-AI v7.1.0 |

TinyEngine |

|---|---|---|---|---|---|

| # mcunet models (VWW) | |||||

| mcunet-5fps-vww | 227kB | 220kB | 248kB | 123kB | 88kB |

| mcunet-10fps-vww | 169kB | 163kB | 199kB | 98kB | 56kB |

| mcunet-320kB-vww | OOM | OOM | OOM | 259kB | 162kB |

| # mcunet models (ImageNet) | |||||

| mcunet-5fps | OOM | OOM | OOM | 126kB | 90kB |

| mcunet-10fps | OOM | OOM | OOM | 76kB | 45kB |

| mcunet-256kB | OOM | OOM | OOM | 311kB | 200kB |

| mcunet-320kB | OOM | OOM | OOM | OOM | 242kB |

| # baseline models | |||||

| mbv2-320kB | OOM | OOM | OOM | OOM | 284kB |

| proxyless-320kB | OOM | OOM | OOM | 312kB | 242kB |

The Flash memory usage results:

| net_id | TF-Lite Micro v2.1.0 |

TF-Lite Micro @ 713b6ed |

CMSIS-NN v2.0.0 |

X-CUBE-AI v7.1.0 |

TinyEngine |

|---|---|---|---|---|---|

| # mcunet models (VWW) | |||||

| mcunet-5fps-vww | 782kB | 733kB | 743kB | 534kB | 517kB |

| mcunet-10fps-vww | 691kB | 643kB | 653kB | 463kB | 447kB |

| mcunet-320kB-vww | OOM | OOM | OOM | 773kB | 742kB |

| # mcunet models (ImageNet) | |||||

| mcunet-5fps | OOM | OOM | OOM | 737kB | 720kB |

| mcunet-10fps | OOM | OOM | OOM | 856kB | 837kB |

| mcunet-256kB | OOM | OOM | OOM | 850kB | 827kB |

| mcunet-320kB | OOM | OOM | OOM | OOM | 835kB |

| # baseline models | |||||

| mbv2-320kB | OOM | OOM | OOM | OOM | 828kB |

| proxyless-320kB | OOM | OOM | OOM | 866kB | 835kB |

If you find the project helpful, please consider citing our paper:

@article{

lin2020mcunet,

title={Mcunet: Tiny deep learning on iot devices},

author={Lin, Ji and Chen, Wei-Ming and Lin, Yujun and Gan, Chuang and Han, Song},

journal={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}

@inproceedings{

lin2021mcunetv2,

title={MCUNetV2: Memory-Efficient Patch-based Inference for Tiny Deep Learning},

author={Lin, Ji and Chen, Wei-Ming and Cai, Han and Gan, Chuang and Han, Song},

booktitle={Annual Conference on Neural Information Processing Systems (NeurIPS)},

year={2021}

}

@inproceedings{

lin2022ondevice,

title={On-Device Training Under 256KB Memory},

author={Lin, Ji and Zhu, Ligeng and Chen, Wei-Ming and Wang, Wei-Chen and Gan, Chuang and Han, Song},

booktitle={ArXiv},

year={2022}

}

MCUNet: Tiny Deep Learning on IoT Devices (NeurIPS'20)

MCUNetV2: Memory-Efficient Patch-based Inference for Tiny Deep Learning (NeurIPS'21)