- Created a model that predicts a next day price for XTB company's stock (R2= 0.965, RMSE=0.715).

- Feature selection based on domain knowledge of financial markets and the research about the company.

- Reviewing and combining several datasets that represent various financial instruments.

- Performed exploratory data analysis for a better understanding of underlying relations between features.

- Preprocessing the data and used multiple regression algorithms, from Linear Model to Random Forrest Regressor.

- Performing basic measurements and conducting cross-validation for better score verification.

- Python version: 3.8

- Packages: numpy, pandas, matplotlib, sklearn

- Environment Anaconda, Win64

With more than 15 years experience, XTB is one of the largest stock exchange-listed FX & CFD brokers in the world. It has offices in over 13 countries including UK, Poland, Germany, France and Chile.

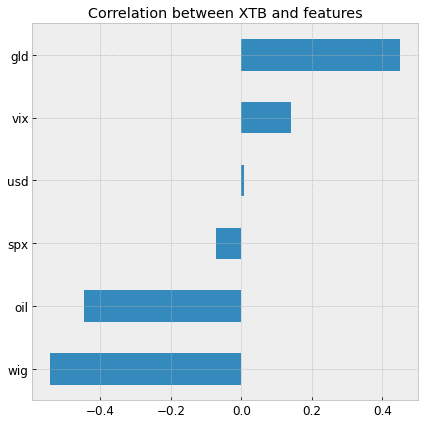

According to the company financial reports, its main profits come from FX, comodities, and CFD contracts. So, the more volatility on the global financial market, the better for the profits. Keeping that in mind, I choose some key fundamental indicators (indices) or possible underlying products (USD/EUR, gold, oil). That seems to be the most promising for my analysis. What is essential due to the different timezones is that some of them can be treated as leading indicators, which is beneficial for the practical application of such research.

- X-Trade Brokers SA (XTB)

- WIG (WIG)

- S&P 500 U.S. (^SPX)

- CBOE Volatility Index (^VIX)CBOE Volatility Index (^VIX)

- U.S. Dollar / Euro 1:1 (USDEUR)

- Gold - COMEX (GC.F)

- Crude Oil WTI - NYMEX (CL.F)

Was not needed since the data was clean and well organized.

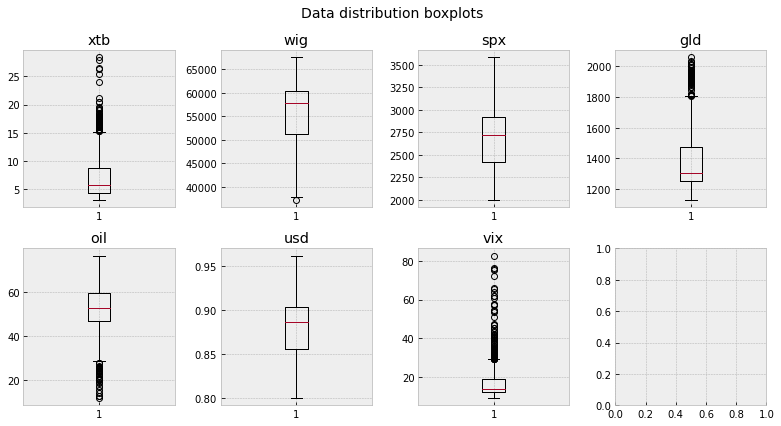

- EDA was an important part of the project and remained a valuable foundation for future model performance improvements.

- The key message that comes directly from data suggests that traditional linear algorithms will have difficulties dealing with such skewed datasets, high variance, and common occurrence of outliers. It was a reason I chose and tried tree type algorithms as well.

- There are some nonlinear relations between features that should be investigated in the future. Some data transformations will be needed for further model optimization.

Below are a few highlights from the analysis.

- The data were scaled using the sklearn StandardScaler and split into training and testing datasets (60/40).

- I tried different models and evaluated them using R2 and RMSE.

- While I suspect that using regressors such as Decision Tree and Random Forrest could be a good fit, I have also tested Linear Regression and, for pure curiosity Huber and Ridge regressors.

The Random Forest Regressor (rfr) outperformed the other approaches on the test and validation data.

| R2 | RMSE | |

|---|---|---|

| rfr | 0.965555 |

0.712411 |

| dtr | 0.942541 | 0.920118 |

| hr | 0.610065 | 2.39696 |

| rge | 0.609518 | 2.39864 |

| lr | 0.608597 | 2.40147 |