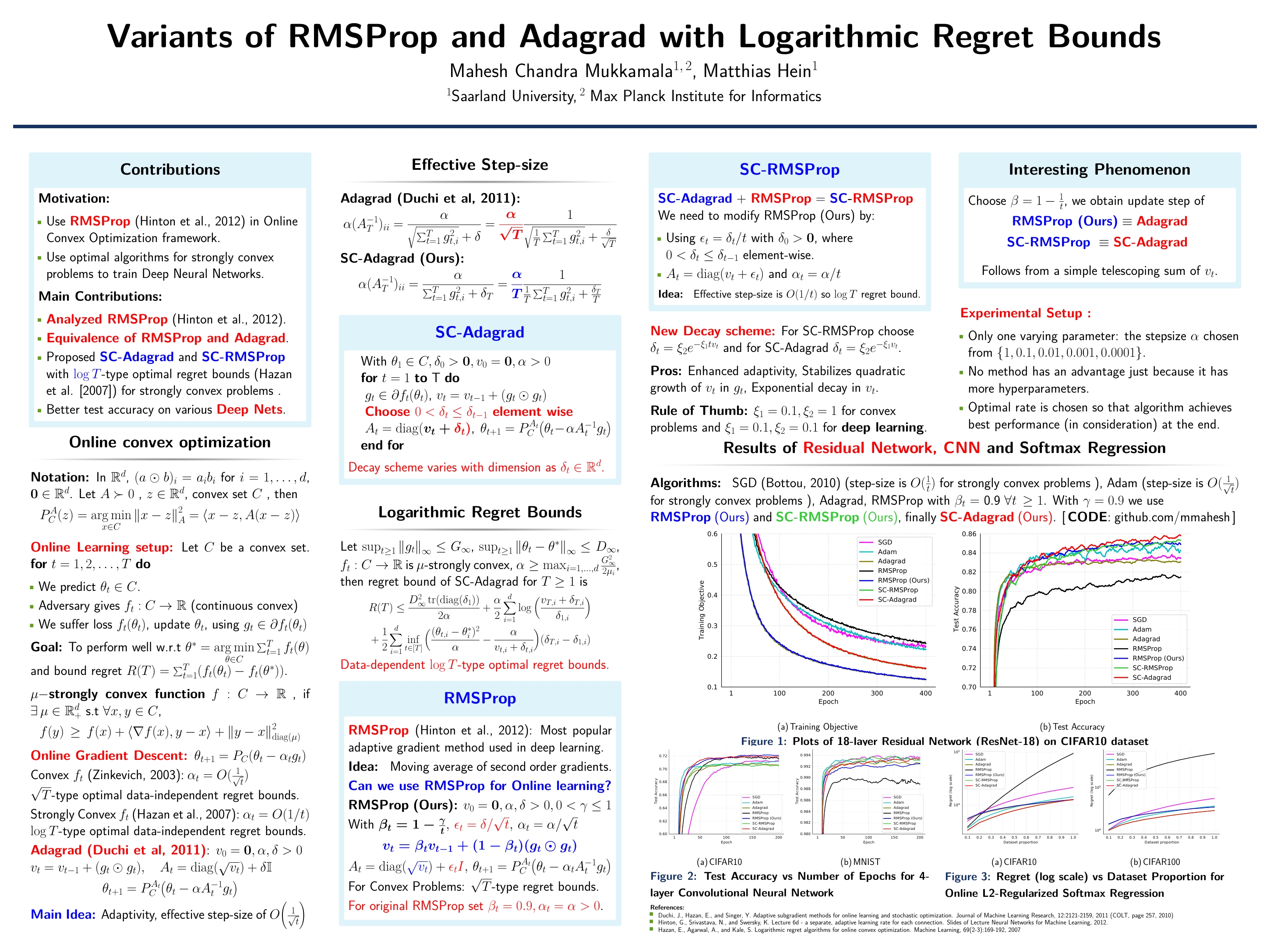

Keras implementation of SC-Adagrad, SC-RMSProp and RMSProp Algorithms proposed in here

Short version accepted at ICML, 17 can be found here

I wrote a blog/tutorial here, describing Adagrad, RMSProp, Adam, SC-Adagrad and SC-RMSProp in simple terms, so that it is easy to understand the gist of the algorithms.

So, you created a deep network using keras, now you want to train with above algorithms. Copy the file "new_optimizers.py" into your repository. Then in the file where the model is created (also to be compiled) add the following

from new_optimizers import *

# lets for example you want to use SC-Adagrad then

# create optimizer object as follows.

sc_adagrad = SC_Adagrad()

# similarly for SC-RMSProp and RMSProp (Ours)

sc_rmsprop = SC_RMSProp()

rmsprop_variant = RMSProp_variant() Then in the code where you compile your keras model you must set optimizer=sc_adagrad. You can do the same for SC-RMSProp and RMSProp algorithms.