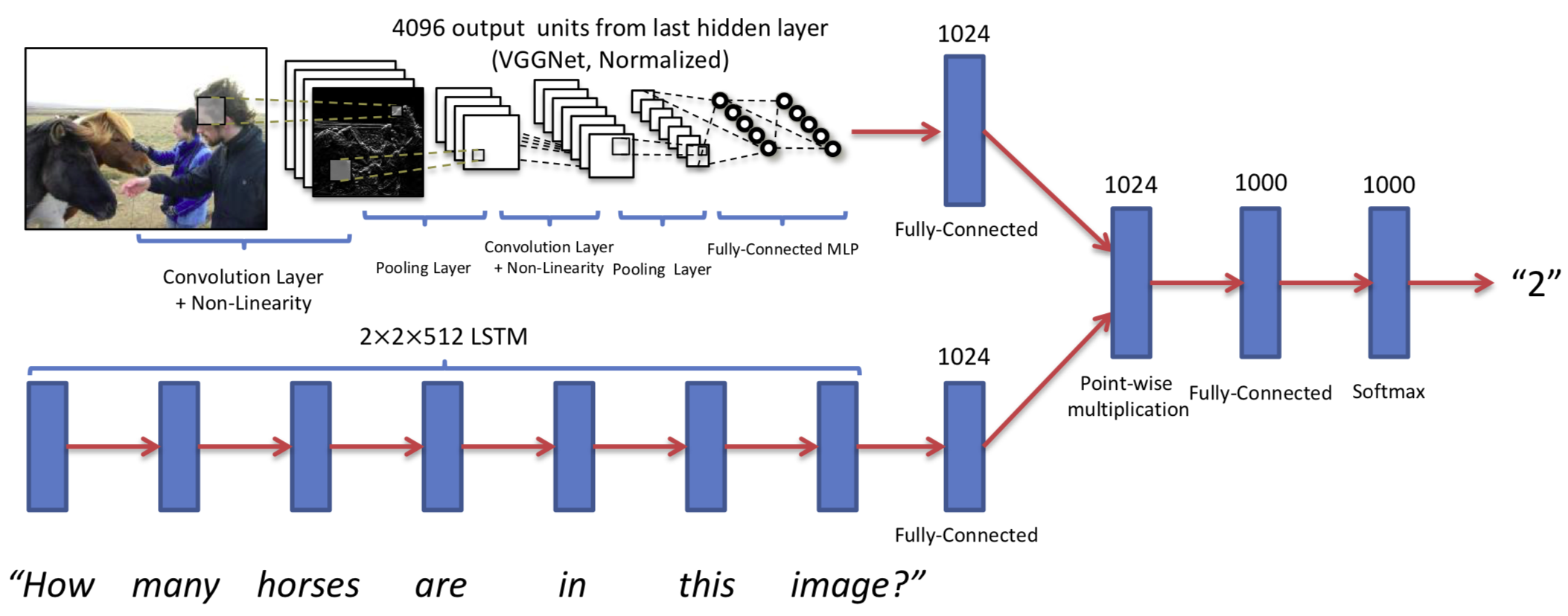

Pytorch implementation of the paper - VQA: Visual Question Answering (https://arxiv.org/pdf/1505.00468.pdf).

$ git clone https://github.com/tbmoon/basic_vqa.git2. Download and unzip the dataset from official url of VQA: https://visualqa.org/download.html.

*** MICHAEL: Chengskip this step

$ cd basic_vqa/utils

$ chmod +x download_and_unzip_datasets.csh

$ ./download_and_unzip_datasets.csh*** MICHAEL: Cheng skip this step

$ python resize_images.py --input_dir='../../VQA/datasets/Images' --output_dir='../../VQA/datasets/Resized_Images' *** MICHAEL

$ python make_vacabs_for_questions_answers.py --input_dir='../../VQA/datasets'

$ python build_vqa_inputs.py --input_dir='../../VQA/datasets' --output_dir='../datasets'$ cd ..

$ python train.py- Comparison Result

| Model | Metric | Dataset | Accuracy | Source |

|---|---|---|---|---|

| Paper Model | Open-Ended | VQA v2 | 54.08 | VQA Challenge |

| My Model | Multiple Choice | VQA v2 | 54.72 |

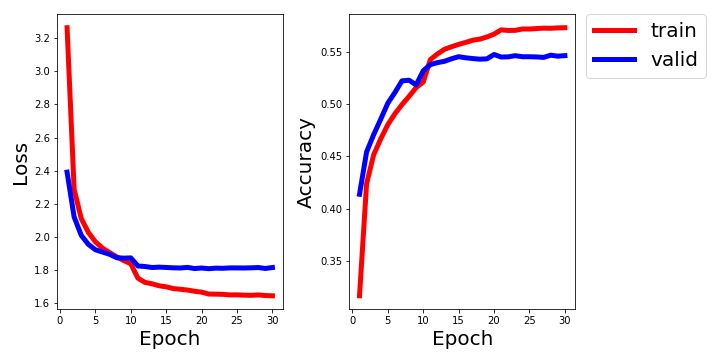

- Loss and Accuracy on VQA datasets v2

-

Paper implementation

- Paper: VQA: Visual Question Answering

- URL: https://arxiv.org/pdf/1505.00468.pdf

-

Pytorch tutorial

-

Preprocessing

- Tensorflow implementation of N2NNM

- Github: https://github.com/ronghanghu/n2nmn