This is a PyTorch implementation of the following paper:

GLASS: Global to Local Attention for Scene-Text Spotting, ECCV 2022.

Roi Ronen*, Shahar Tsiper*, Oron Anschel, Inbal Lavi, Amir Markovitz and R. Manmatha.

Paper | Pretrained Models | Citation | Demo

Abstract:

In recent years, the dominant paradigm for text spotting is to combine the tasks of text detection and recognition into a single end-to-end framework.

Under this paradigm, both tasks are accomplished by operating over a shared global feature map extracted from the input image.

Among the main challenges end-to-end approaches face is the performance degradation when recognizing text across scale variations (smaller or larger text), and arbitrary word rotation angles.

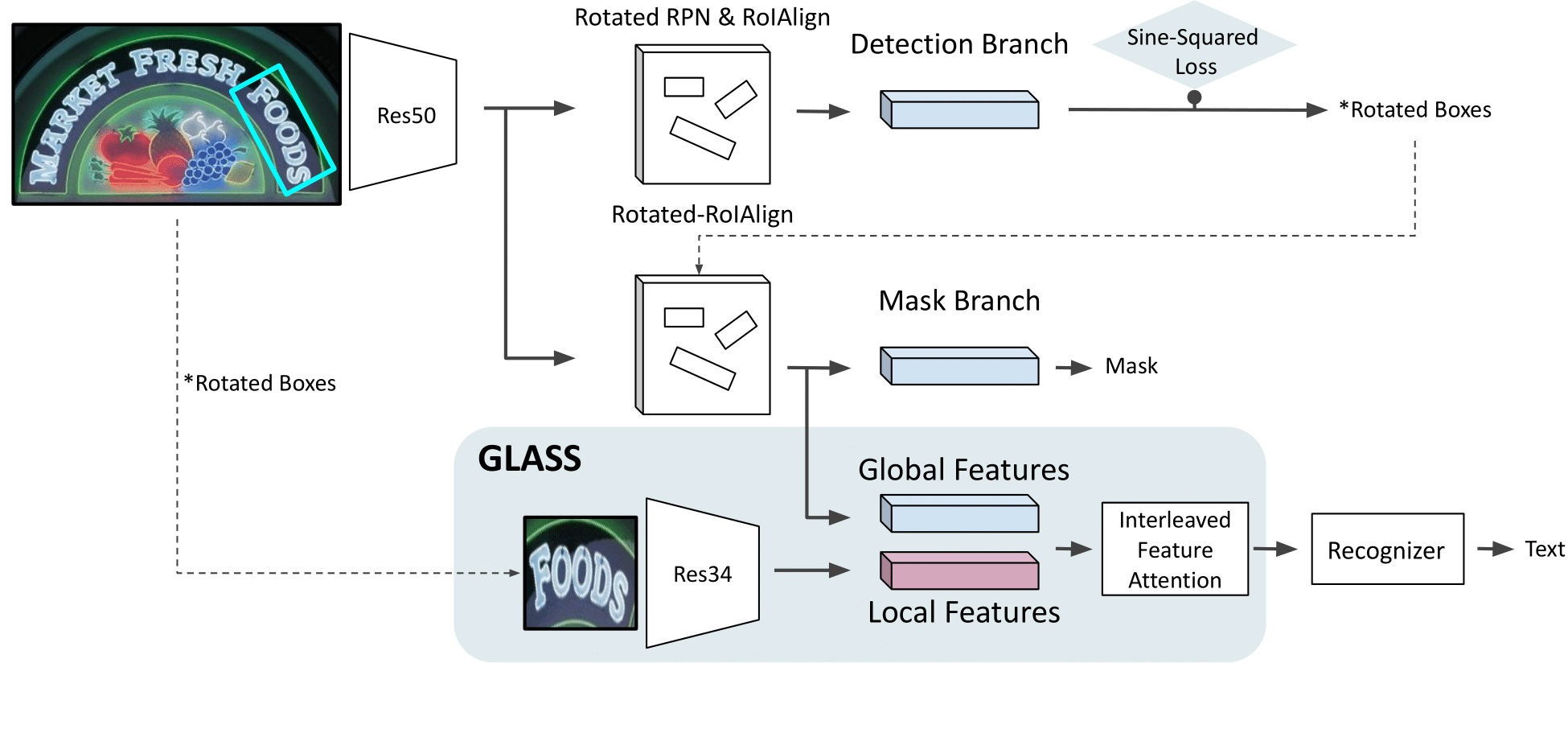

In this work, we address these challenges by proposing a novel global-to-local attention mechanism for text spotting, termed GLASS, that fuses together global and local features.

The global features are extracted from the shared backbone, preserving contextual information from the entire image, while the local features are computed individually on resized, high resolution rotated word crops.

The information extracted from the local crops alleviates much of the inherent difficulties with scale and word rotation.

We show a performance analysis across scales and angles, highlighting improvement over scale and angle extremities.

In addition, we introduce a periodic, orientation-aware loss term supervising the detection task, and show its contribution on both detection and recognition performance across all angles.

Finally, we show that GLASS is agnostic to architecture choice, and apply it to other leading text spotting algorithms, improving their text spotting performance.

Our method achieves state-of-the-art results on multiple benchmarks, including the newly released TextOCR.

Result on Total-Text test dataset:

Compilation of this package requires Detectron2==0.6 package. Installation has been tested on Linux using using anaconda package management.

Clone the repository into your local machine

git clone https://github.com/amazon-research/glass-text-spotting

cd glassStart a clean virtual environment and setup enviroment variables

conda create -n glass python=3.8

conda activate glassInstall required packages

pip install -e .A Lab Collab demo is available for running inference:

You can check out all of our fine-tuned models and configs here:

- Pretrained + fine-tuned on ICDAR'15: IC'15 Model, IC'15 config

- Pretrained + fine-tuned on TotalText: TotalText Model, TotalText config

- Pretrained + fine-tuned on all datasets, inc. TextOCR: TextOCR Model, TextOCR config

All of these models can be run together with the default pre-training config, or invoked using the demo above.

Pretraining on SynthText dataset

python ./tools/train_glass.py \

# The dataset configuration

--datasets ./data_configs/data_config_pretrain.yaml \

# The architecture config

--config ./configs/glass_pretrain \

# The output path of the train artifacts

--output <output_path> Fine-tuning the model

python ./tools/train_glass.py \

--datasets <path_to_dataset_config> \

--resume <pretrained_weights_path> \

--output <output_path>

See DATA.md for instructions on data prepation and ingestion.

The model in this work were trained the following datasets:

- December 12th, 2022 - Added Lab Collab demo, numerous bug fixes and improvments, and code cleanup

- November 14th, 2022 - Evalutaion code and updated post-processing code is included

- September 20th, 2022 - Including the models, additional components for rotated box training and bug fixes

- July 10th, 2022 - Initial commit, getting things ready towards ECCV'22, main training code and architecture are included

Please consider citing our work if you find it useful for your research.

@article{ronen2022glass,

title={GLASS: Global to Local Attention for Scene-Text Spotting},

author={Ronen, Roi and Tsiper, Shahar and Anschel, Oron and Lavi, Inbal and Markovitz, Amir and Manmatha, R},

journal={arXiv preprint arXiv:2208.03364},

year={2022}

}See CONTRIBUTING for more information on contributions.

This project is licensed under the Apache-2.0 License.