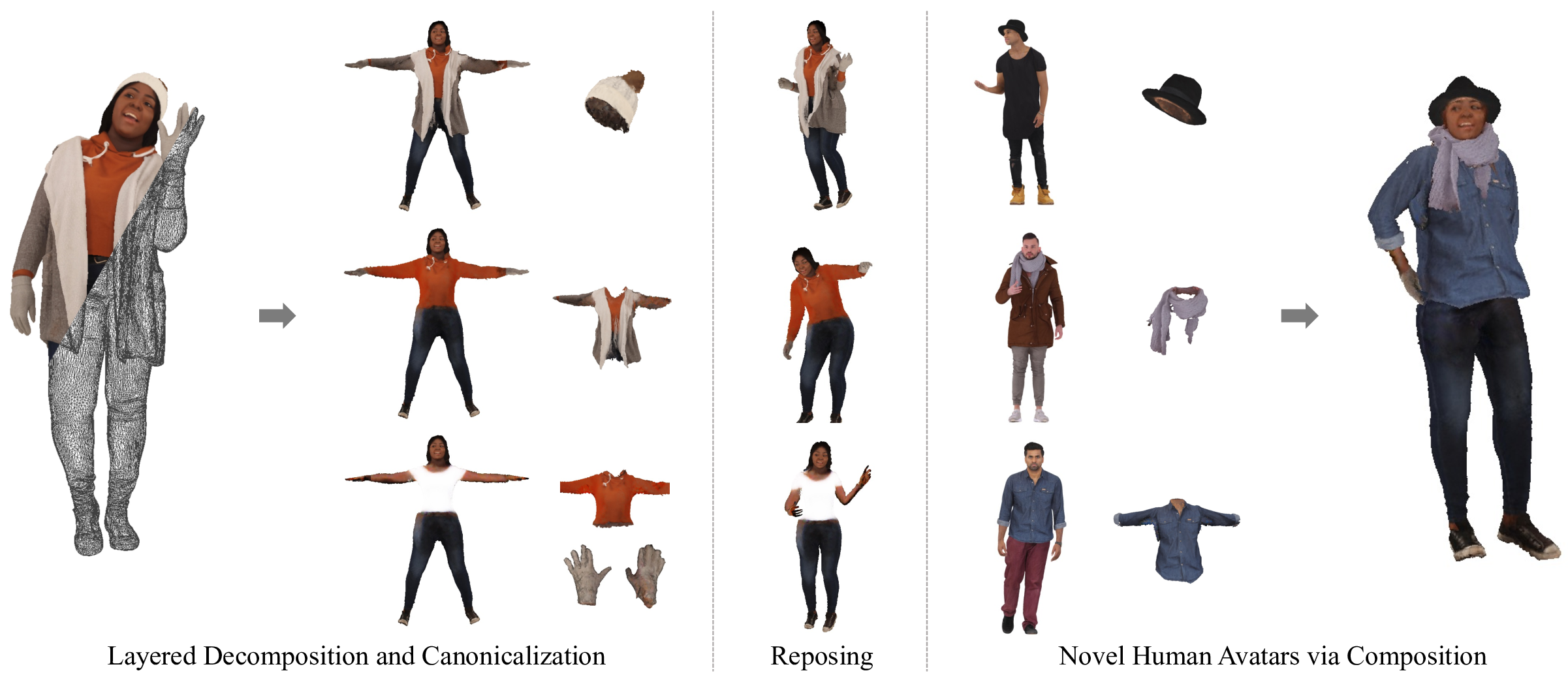

This is the official code for the paper "GALA: Generating Animatable Layered Assets from a Single Scan".

- [2024/01/24] Initial release.

Setup the environment using conda.

conda env create -f env.yaml

conda activate gala

Install and download required libraries and data. For downloading SMPL-X, you must register here. Installing xformers reduces training time, but it takes extremely long. Remove it from "scripts/setup.sh" if needed.

bash scripts/setup.sh

Download "ViT-H HQ-SAM model" checkpoint here, and place it in ./segmentation.

We use THuman2.0 in our demo since it's publicly accessible. The same pipeline also works for commercial dataset like RenderPeople, as used in our paper. Get access to Thuman2.0 scans and smplx parameters here and organize the folder as below.

./data

├── thuman

│ └── 0001

│ └── 0001.obj

│ └── material0.mtl

│ └── material0.jpeg

│ └── smplx_param.pkl

│ └── 0002

│ └── ...

For preprocessing THuman 2.0 scans, run the script below. Preprocessing includes normalization and segmentation of the input scan.

bash scripts/preprocess_data_single.sh thuman data/thuman/$SCAN_NAME $TARGET_OBJECT

# example

bash scripts/preprocess_data_single.sh thuman data/thuman/0001 jacket

For canonicalized decomposition, run the commands below.

# Geometry Stage

python train.py config/th_0001_geo.yaml

# Appearance Stage

python train.py config/th_0001_tex.yaml

You can check the outputs in ./results. You can modify input text conditions in "config/th_0001_geo.yaml" or "config/th_0001_tex.yaml", and change experimental settings in "config/default_geo.yaml" or "config/default_geo.yaml".

If you find this work useful, please cite our paper:

@misc{kim2024gala,

title={GALA: Generating Animatable Layered Assets from a Single Scan},

author={Taeksoo Kim and Byungjun Kim and Shunsuke Saito and Hanbyul Joo},

year={2024},

eprint={2401.12979},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We sincerely thank the authors of

for their amazing work and codes!