I2L-MeshNet: Image-to-Lixel Prediction Network for Accurate 3D Human Pose and Mesh Estimation from a Single RGB Image

There was a code mistake in here. Basically, the translation during the rigid alignment was wrong. The results in my paper became better after I fix the error.

This repo is official PyTorch implementation of I2L-MeshNet: Image-to-Lixel Prediction Network for Accurate 3D Human Pose and Mesh Estimation from a Single RGB Image (ECCV 2020). Our I2L-MeshNet wons the first and second place at 3DPW challenge on unknown assocation track in part orientation and joint position metrics, respectively.:tada:

- Install PyTorch and Python >= 3.7.3 and run

sh requirements.sh. You should slightly changetorchgeometrykernel code following here. - Download the pre-trained I2L-MeshNet from here. This is not the best accurate I2L-MeshNet, but provides visually smooth meshes. Here is discussion about this.

- Prepare

input.jpgand pre-trained snapshot atdemofolder. - Download

basicModel_f_lbs_10_207_0_v1.0.0.pklandbasicModel_m_lbs_10_207_0_v1.0.0.pklfrom here andbasicModel_neutral_lbs_10_207_0_v1.0.0.pklfrom here. Place them atcommon/utils/smplpytorch/smplpytorch/native/models. - Go to

demofolder and editbboxin here. - run

python demo.py --gpu 0 --stage param --test_epoch 8if you want to run on gpu 0. - You can see

output_mesh_lixel.jpg,output_mesh_param.jpg,rendered_mesh_lixel.jpg,rendered_mesh_param.jpg,output_mesh_lixel.obj, andoutput_mesh_param.obj.*_lixel.*are from lixel-based 1D heatmap of mesh vertices and*_param.*are from regressed SMPL parameters. - If you run this code in ssh environment without display device, do follow:

1、Install oemesa follow https://pyrender.readthedocs.io/en/latest/install/

2、Reinstall the specific pyopengl fork: https://github.com/mmatl/pyopengl

3、Set opengl's backend to egl or osmesa via os.environ["PYOPENGL_PLATFORM"] = "egl"

The ${ROOT} is described as below.

${ROOT}

|-- data

|-- demo

|-- common

|-- main

|-- output

datacontains data loading codes and soft links to images and annotations directories.democontains demo codes.commoncontains kernel codes for I2L-MeshNet.maincontains high-level codes for training or testing the network.outputcontains log, trained models, visualized outputs, and test result.

You need to follow directory structure of the data as below.

${ROOT}

|-- data

| |-- Human36M

| |-- |-- rootnet_output

| | | |-- bbox_root_human36m_output.json

| | |-- images

| | |-- annotations

| | |-- J_regressor_h36m_correct.npy

| |-- MuCo

| | |-- data

| | | |-- augmented_set

| | | |-- unaugmented_set

| | | |-- MuCo-3DHP.json

| | | |-- smpl_param.json

| |-- MSCOCO

| | |-- rootnet_output

| | | |-- bbox_root_coco_output.json

| | |-- images

| | | |-- train2017

| | | |-- val2017

| | |-- annotations

| | |-- J_regressor_coco_hip_smpl.npy

| |-- PW3D

| | |-- rootnet_output

| | | |-- bbox_root_pw3d_output.json

| | |-- data

| | | |-- 3DPW_train.json

| | | |-- 3DPW_validation.json

| | | |-- 3DPW_test.json

| | |-- imageFiles

| |-- FreiHAND

| | |-- rootnet_output

| | | |-- bbox_root_freihand_output.json

| | |-- data

| | | |-- training

| | | |-- evaluation

| | | |-- freihand_train_coco.json

| | | |-- freihand_train_data.json

| | | |-- freihand_eval_coco.json

| | | |-- freihand_eval_data.json

- Download Human3.6M parsed data and SMPL parameters [data][SMPL parameters from SMPLify-X]

- Download MuCo parsed/composited data and SMPL parameters [data][SMPL parameters from SMPLify-X]

- Download MS COCO SMPL parameters [SMPL parameters from SMPLify-X]

- Download 3DPW parsed data [data]

- Download FreiHAND parsed data [data]

- All annotation files follow MS COCO format.

- If you want to add your own dataset, you have to convert it to MS COCO format.

To download multiple files from Google drive without compressing them, try this. If you have a problem with 'Download limit' problem when tried to download dataset from google drive link, please try this trick.

* Go the shared folder, which contains files you want to copy to your drive

* Select all the files you want to copy

* In the upper right corner click on three vertical dots and select “make a copy”

* Then, the file is copied to your personal google drive account. You can download it from your personal account.

- For the SMPL layer, I used smplpytorch. The repo is already included in

common/utils/smplpytorch. - Download

basicModel_f_lbs_10_207_0_v1.0.0.pklandbasicModel_m_lbs_10_207_0_v1.0.0.pklfrom here andbasicModel_neutral_lbs_10_207_0_v1.0.0.pklfrom here. Place them atcommon/utils/smplpytorch/smplpytorch/native/models. - For the MANO layer, I used manopth. The repo is already included in

common/utils/manopth. - Download

MANO_RIGHT.pklfrom here atcommon/utils/manopth/mano/models.

You need to follow the directory structure of the output folder as below.

${ROOT}

|-- output

| |-- log

| |-- model_dump

| |-- result

| |-- vis

- Creating

outputfolder as soft link form is recommended instead of folder form because it would take large storage capacity. logfolder contains training log file.model_dumpfolder contains saved checkpoints for each epoch.resultfolder contains final estimation files generated in the testing stage.visfolder contains visualized results.

- Install PyTorch and Python >= 3.7.3 and run

sh requirements.sh. You should slightly changetorchgeometrykernel code following here. - In the

main/config.py, you can change settings of the model including dataset to use, network backbone, and input size and so on. - There are two stages. 1)

lixeland 2)param. In thelixelstage, I2L-MeshNet predicts lixel-based 1D heatmaps for each human joint and mesh vertex. Inparamstage, I2L-MeshNet predicts SMPL parameters from lixel-based 1D heatmaps.

First, you need to train I2L-MeshNet of lixel stage. In the main folder, run

python train.py --gpu 0-3 --stage lixel to train I2L-MeshNet in the lixel stage on the GPU 0,1,2,3. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

Once you pre-trained I2L-MeshNet in lixel stage, you can resume training in param stage. In the main folder, run

python train.py --gpu 0-3 --stage param --continueto train I2L-MeshNet in the param stage on the GPU 0,1,2,3. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

Place trained model at the output/model_dump/. Choose the stage you want to test among lixel and param.

In the main folder, run

python test.py --gpu 0-3 --stage $STAGE --test_epoch 20 to test I2L-MeshNet in $STAGE stage (should be one of lixel and param) on the GPU 0,1,2,3 with 20th epoch trained model. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

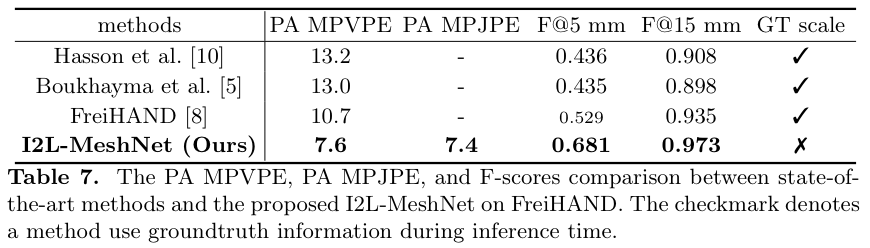

Here I report the performance of the I2L-MeshNet.

- Download I2L-MeshNet trained on [Human3.6M+MSCOCO].

- Download bounding boxs and root joint coordinates (from RootNet) of [Human3.6M].

$ python test.py --gpu 4-7 --stage param --test_epoch 17

>>> Using GPU: 4,5,6,7

Stage: param

08-10 00:25:56 Creating dataset...

creating index...

index created!

Get bounding box and root from ../data/Human36M/rootnet_output/bbox_root_human36m_output.json

08-10 00:26:16 Load checkpoint from ../output/model_dump/snapshot_17.pth.tar

08-10 00:26:16 Creating graph...

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 35/35 [00:46<00:00, 1.09it/s]

MPJPE from lixel mesh: 55.83 mm

PA MPJPE from lixel mesh: 41.10 mm

MPJPE from param mesh: 66.05 mm

PA MPJPE from param mesh: 45.03 mm

- Download I2L-MeshNet trained on [Human3.6M+MuCo+MSCOCO].

- Download bounding boxs and root joint coordinates (from RootNet) of [3DPW].

$ python test.py --gpu 4-7 --stage param --test_epoch 7

>>> Using GPU: 4,5,6,7

Stage: param

08-09 20:47:19 Creating dataset...

loading annotations into memory...

Done (t=4.91s)

creating index...

index created!

Get bounding box and root from ../data/PW3D/rootnet_output/bbox_root_pw3d_output.json

08-09 20:47:27 Load checkpoint from ../output/model_dump/snapshot_7.pth.tar

08-09 20:47:27 Creating graph...

100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 555/555 [08:05<00:00, 1.06s/it]

MPJPE from lixel mesh: 93.15 mm

PA MPJPE from lixel mesh: 57.73 mm

MPJPE from param mesh: 100.04 mm

PA MPJPE from param mesh: 60.04 mm

The testing results on MSCOCO dataset are used for visualization (qualitative results).

- Download I2L-MeshNet trained on [Human3.6M+MuCo+MSCOCO].

- Download bounding boxs and root joint coordinates (from RootNet) [MSCOCO].

$ python test.py --gpu 4-7 --stage param --test_epoch 7

>>> Using GPU: 4,5,6,7

Stage: param

08-10 00:34:26 Creating dataset...

loading annotations into memory...

Done (t=0.35s)

creating index...

index created!

Load RootNet output from ../data/MSCOCO/rootnet_output/bbox_root_coco_output.json

08-10 00:34:39 Load checkpoint from ../output/model_dump/snapshot_7.pth.tar

08-10 00:34:39 Creating graph...

100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 100/100 [01:31<00:00, 1.05it/s]

- Download I2L-MeshNet trained on [FreiHand].

- Download bounding boxs and root joint coordinates (from RootNet) of [FreiHAND].

bboxis from Detectron2.

$ python test.py --gpu 4-7 --stage lixel --test_epoch 24

>>> Using GPU: 4,5,6,7

Stage: lixel

08-09 21:31:30 Creating dataset...

loading annotations into memory...

Done (t=0.06s)

creating index...

index created!

Get bounding box and root from ../data/FreiHAND/rootnet_output/bbox_root_freihand_output.json

08-09 21:31:30 Load checkpoint from ../output/model_dump/snapshot_24.pth.tar

08-09 21:31:30 Creating graph...

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 62/62 [00:54<00:00, 1.12it/s]

Saved at ../output/result/pred.json

loss['joint_orig'] and loss['mesh_joint_orig'] in main/model.py makes the lixel-based meshes visually not smooth but 3D pose from meshes more accurate. This is because the loss functions are calculated from joint coordinates of each dataset, not from SMPL joint set. Thus, for the visually pleasant lixel-based meshes, disable the two loss functions when training.

- Download I2L-MeshNet trained on [Human3.6M+MuCo+MSCOCO] without

loss['joint_orig']andloss['mesh_joint_orig']. - Below is the test result on 3DPW dataset. As the results show, it is worse than the above best accurate I2L-MeshNet.

$ python test.py --gpu 4 --stage param --test_epoch 8

>>> Using GPU: 4

Stage: param

08-16 13:56:54 Creating dataset...

loading annotations into memory...

Done (t=7.05s)

creating index...

index created!

Get bounding box and root from ../data/PW3D/rootnet_output/bbox_root_pw3d_output.json

08-16 13:57:04 Load checkpoint from ../output/model_dump/snapshot_8.pth.tar

08-16 13:57:04 Creating graph...

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 8879/8879 [17:42<00:00, 3.58it/s]

MPJPE from lixel mesh: 93.47 mm

PA MPJPE from lixel mesh: 60.87 mm

MPJPE from param mesh: 99.34 mm

PA MPJPE from param mesh: 61.80 mm

RuntimeError: Subtraction, the '-' operator, with a bool tensor is not supported. If you are trying to invert a mask, use the '~' or 'logical_not()' operator instead.: Go to here

@InProceedings{Moon_2020_ECCV_I2L-MeshNet,

author = {Moon, Gyeongsik and Lee, Kyoung Mu},

title = {I2L-MeshNet: Image-to-Lixel Prediction Network for Accurate 3D Human Pose and Mesh Estimation from a Single RGB Image},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020}

}