Mystique enterprise's stores generate numerous sales and inventory events across multiple locations. To efficiently handle this data and enable further processing, Mystique enterprise requires a solution for data ingestion and centralized storage.

Additionally, Mystique enterprise needs the ability to selectively process events based on specific properties. They are interested in filtering events classified as sale_event or inventory_event. This selective processing helps them focus on relevant data for analysis and decision-making. Furthermore, Mystique enterprise wants to apply an additional filter by considering events with a discount greater than 20. This filter is crucial for analyzing key business metrics, such as total_sales and total_discounts, as well as detecting potential fraudulent activity.

Below is a sample of their event. How can we assist them?

{

"id": "743da362-69df-4e63-a95f-a1d93e29825e",

"request_id": "743da362-69df-4e63-a95f-a1d93e29825e",

"store_id": 5,

"store_fqdn": "localhost",

"store_ip": "127.0.0.1",

"cust_id": 549,

"category": "Notebooks",

"sku": 169008,

"price": 45.85,

"qty": 34,

"discount": 10.3,

"gift_wrap": true,

"variant": "red",

"priority_shipping": false,

"ts": "2023-05-19T14:36:09.985501",

"contact_me": "github.com/miztiik",

"is_return": true

}Event properties,

{

"event_type":"sale_event",

"priority_shipping":false,

}Can you provide guidance on how to accomplish this?

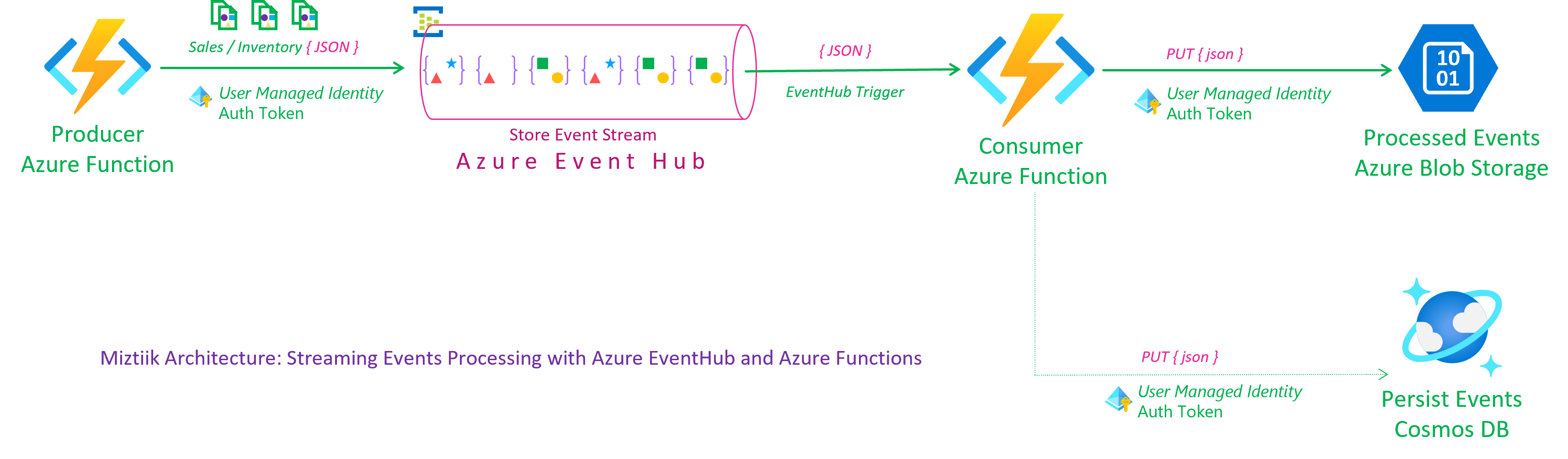

To meet their needs, Mystique enterprise has chosen Azure Event Hub as the platform of choice. It provides the necessary capabilities to handle the ingestion and storage of the data. We can utilize the capture streaming events capability of Azure Event Hub to persist the message to Azure Blob Storage. This allows us to store the data in a central location for archival needs. Additionally, we can leverage the partitioning feature of Azure Event Hub to segregate the events based on specific criteria. This allows us to focus on relevant data for analysis and decision-making.

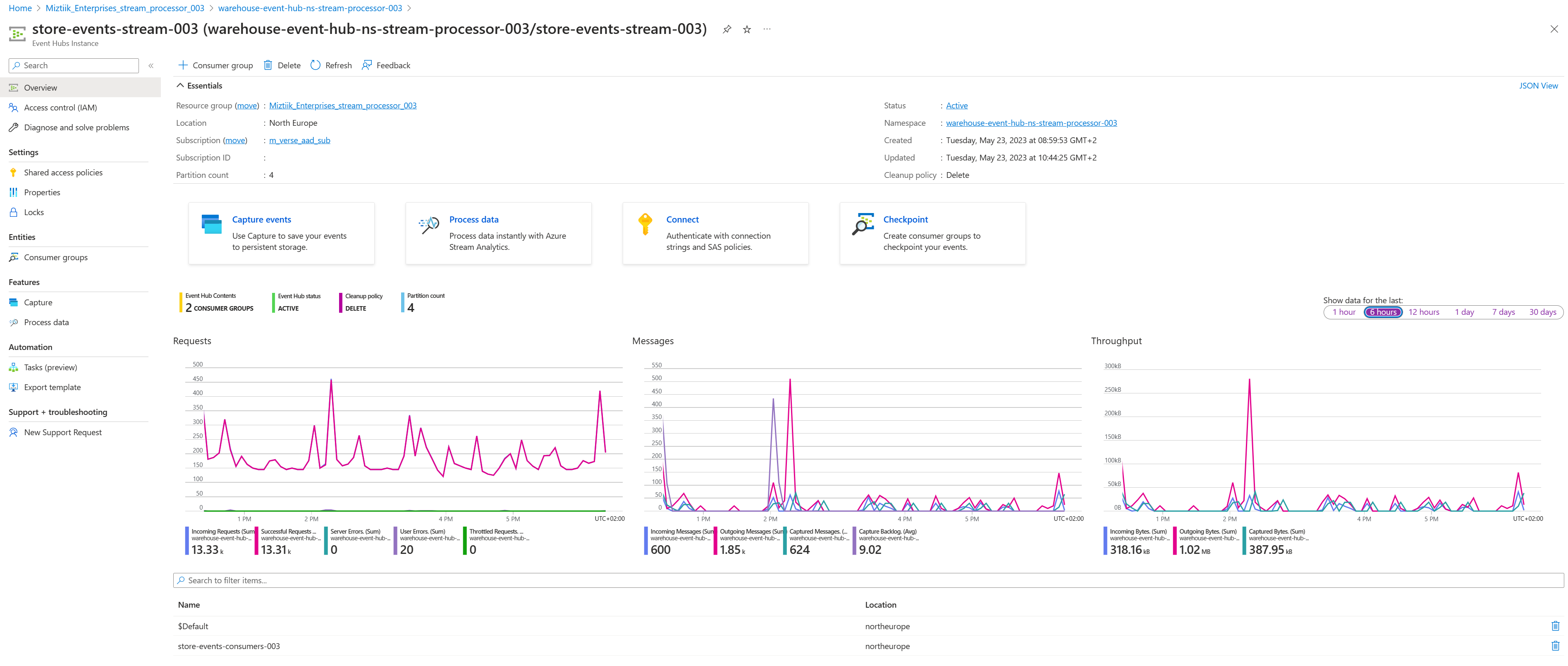

Event Producer: To generate events, an Azure Function with a managed identity will send them to an event hub within a designated event hub namespace. The event hub is configured with 4 subscriptions, and we can utilize partitions to segregate events based on the event_type property. For instance, sale_events and inventory_events can be directed to different partitions. This partitioning enables us to process events based on specific criteria, allowing us to filter events based on the partition_id. By doing so, we can focus on relevant data for analysis and decision-making.

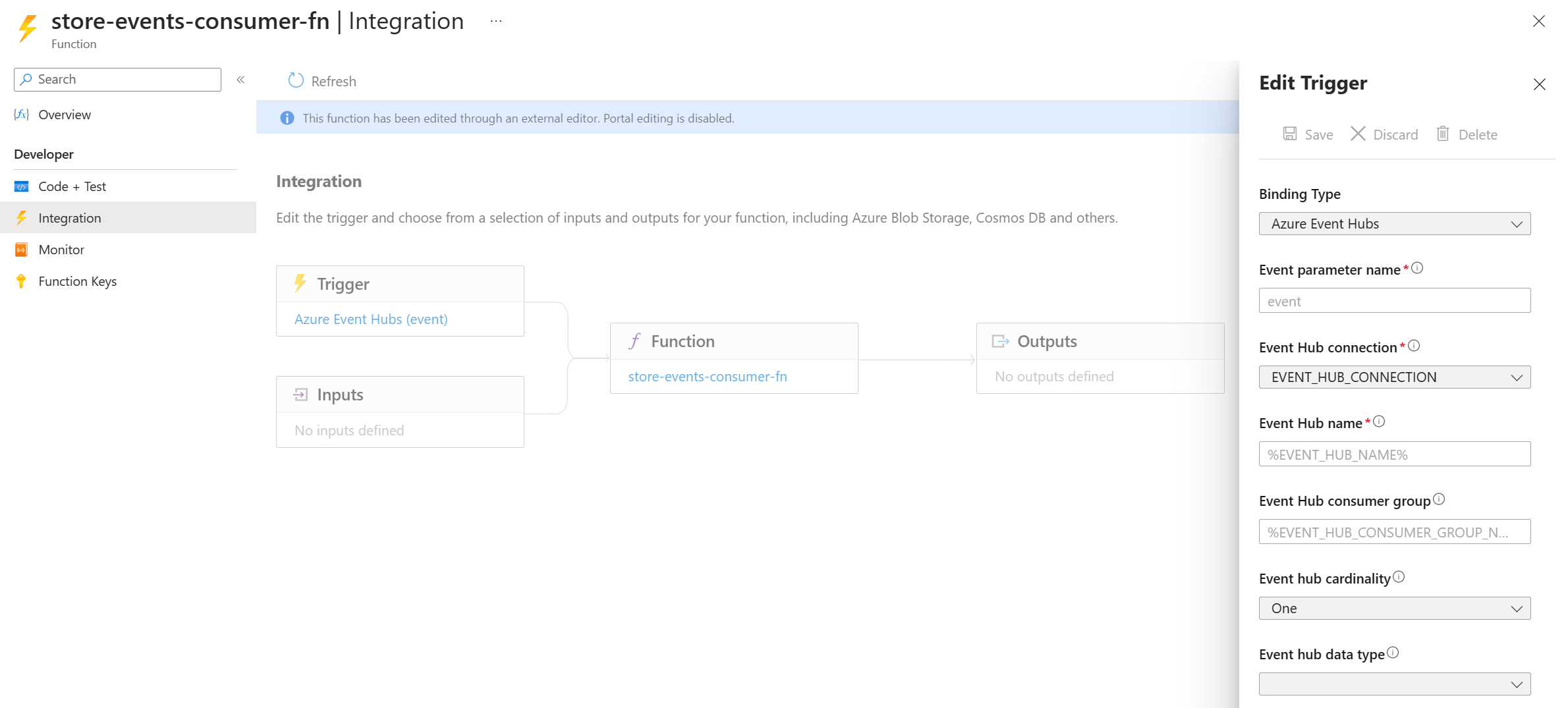

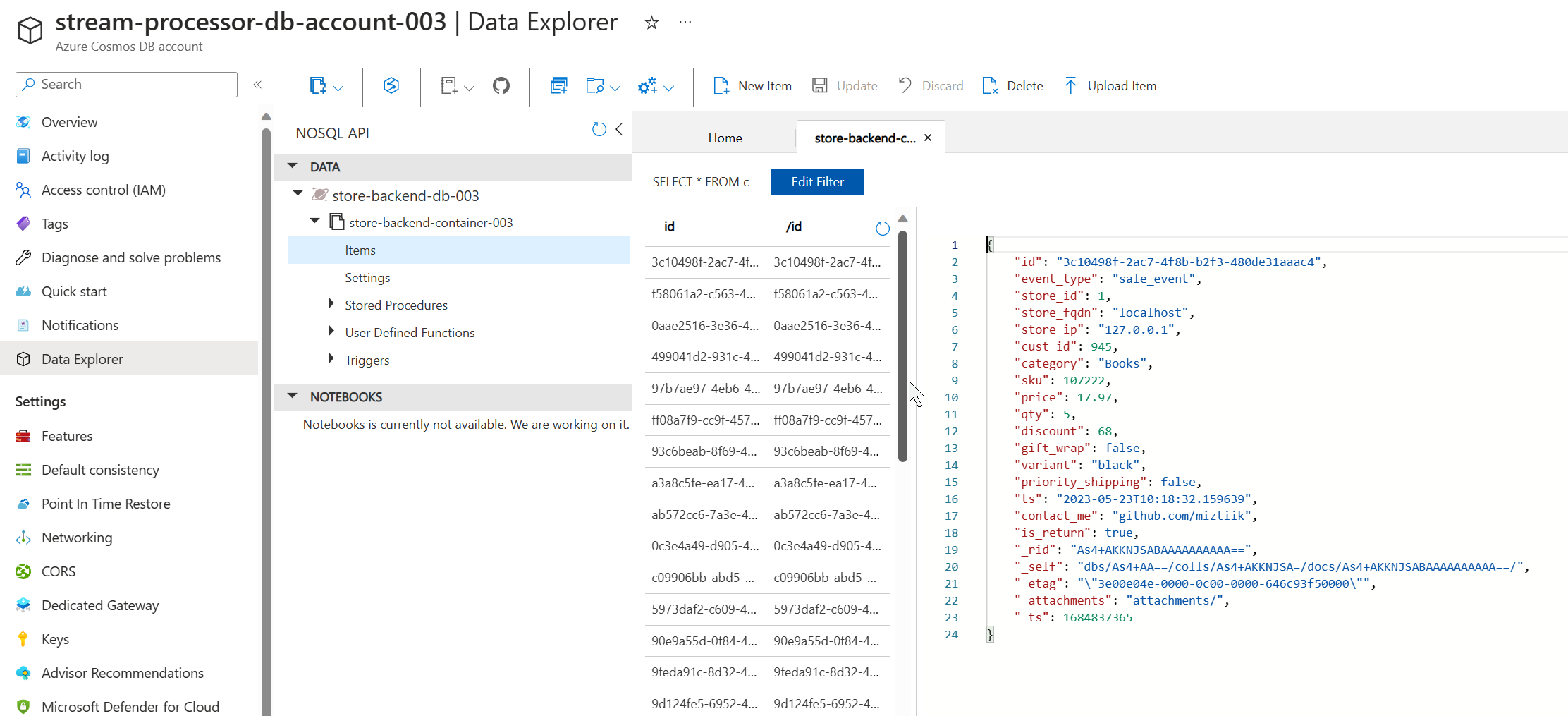

Event Consumer: To process the incoming events, a consumer function is set up with an event hub trigger, specifically targeting a consumer group. This consumer function efficiently handles and persists these events to an Azure Storage Account and Cosmos DB. To ensure secure and controlled access to the required resources, a scoped managed identity with RBAC (Role-Based Access Control) permissions is utilized. This approach allows for granular control over access to resources based on assigned roles. Furthermore, the trigger connection itself is authenticated using a managed identity. This ensures that only authorized entities can interact with the event hub, enhancing the overall security of the system.

Note: If you do not intend to process all events and only want to consume a subset of events, it is recommended to avoid using event hub triggers for your function. Instead, opt for a pull mechanism where you can configure event pulling using the SDK (Software Development Kit) of your choice (not covered here). This allows you to selectively retrieve events only from specific partitions, providing more control over the event consumption process.

By leveraging the capabilities of Bicep, all the required resources can be easily provisioned and managed with minimal effort. Bicep simplifies the process of defining and deploying Azure resources, allowing for efficient resource management.

-

This demo, along with its instructions, scripts, and Bicep template, has been specifically designed to be executed in the

northeuroperegion. However, with minimal modifications, you can also try running it in other regions of your choice (the specific steps for doing so are not covered in this context)- 🛠 Azure CLI Installed & Configured - Get help here

- 🛠 Azure Function Core Tools - Get help here

- 🛠 Bicep Installed & Configured - Get help here

- 🛠 [Optional] VS Code & Bicep Extenstions - Get help here

jq- Get help herebashor git bash - Get help here

-

-

Get the application code

git clone https://github.com/miztiik/azure-event-hub-stream-processor.git cd azure-event-hub-stream-processor

-

-

Ensure you have jq, Azure Cli and bicep working

jq --version func --version bicep --version bash --version az account show

-

-

Stack: Main Bicep We will create the following resources

- Storage Accounts for storing the events

- General purpose Storage Account - Used by Azure functions to store the function code

warehouse*- Azure Function will store the events here

- Event Hub Namespace

- Event Hub Stream, with

4Partitions- Even Partitions -

0&2-inventory_Event - Odd Partitions -

1&3-sale_event - Event Hub Capture - Enabled

- Events will be stored in

warehouse*storage account.

- Events will be stored in

- Even Partitions -

- Event Hub Stream, with

- Managed Identity

- This will be used by the Azure Function to interact with the service bus

- Python Azure Function

- Producer:

HTTPTrigger. Customized to sendcountnumber of events to the service bus, using parameters passed in the query string.countdefaults to10 - Consumer:

eventHubTriggertrigger for a specific consumer group. It is configured to receive1event at a time. you can change binding configurationcardinalitytomanyto receive an array of events.

- Producer:

- Note: There are few additional resources created, but you can ignore them for now, they aren't required for this demo, but be sure to clean them up later

Initiate the deployment with the following command,

# make deploy sh deployment_scripts/deploy.shAfter successfully deploying the stack, Check the

Resource Groups/Deploymentssection for the resources. - Storage Accounts for storing the events

-

-

-

Trigger the function

FUNC_URL="https://stream-processor-store-backend-fn-app-003.azurewebsites.net/store-events-producer-fn" curl ${FUNC_URL}?count=10

You should see an output like this,

{ "miztiik_event_processed": true, "msg": "Generated 10 messages", "resp": { "status": true, "tot_msgs": 10, "bad_msgs": 3, "sale_evnts": 5, "inventory_evnts": 5, "tot_sales": 482.03 }, "count": 10, "last_processed_on": "2023-05-23T16:23:16.949855" }During the execution of this function, a total of 10 messages were produced, with 4 of them being classified as

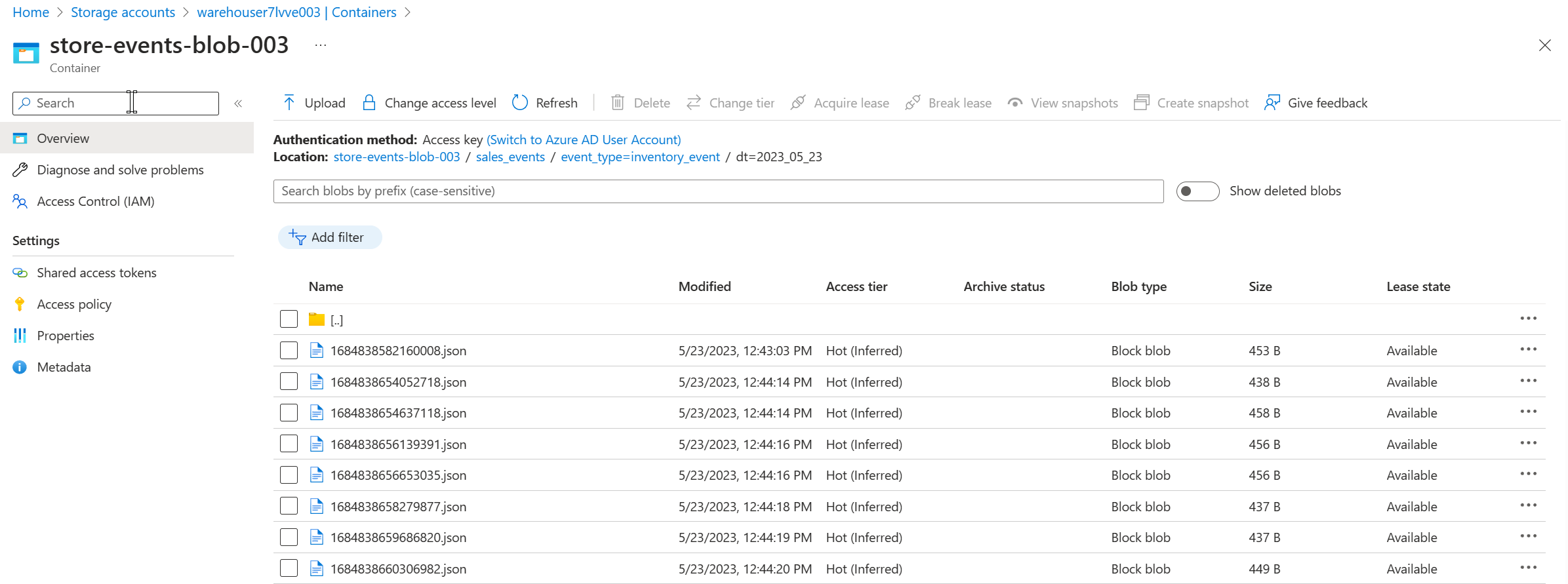

sale_eventsand 6 of them asinventory_events. Please note that the numbers may vary for your specific scenario if you run the producer function multiple times.Additionally, when observing the storage of events in Blob Storage, you will notice that inventory_events are stored in blob prefixes either labeled as

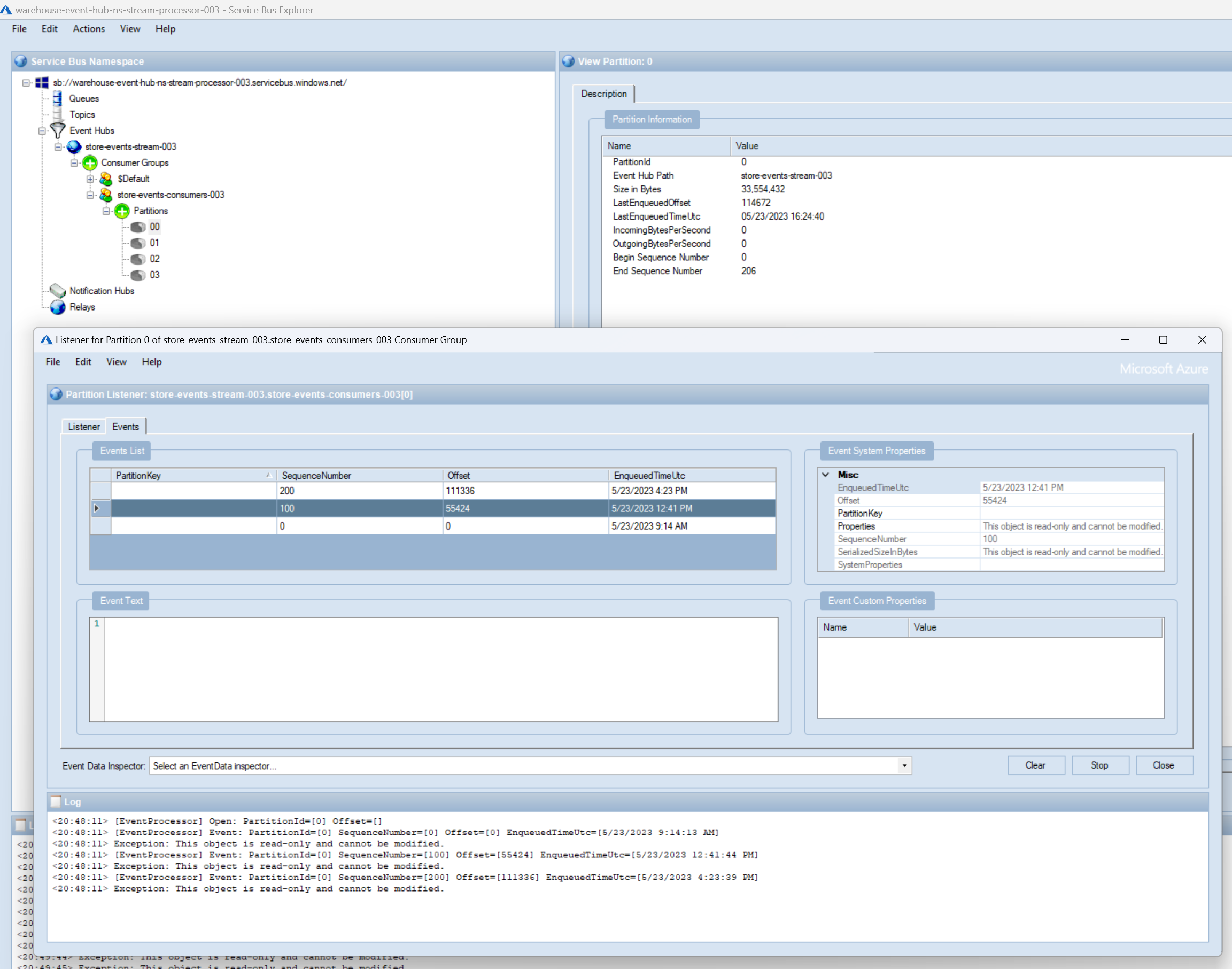

0or2, whilesale_eventsare stored in blob prefixes labeled as either1or3. This configuration is a result of setting up the event hub to utilize 4 partitions, with 2 partitions allocated for each event type.You can also use the Service Bus Hub Explorer Tool to view the events in the event hub.

-

-

In this demonstration, we showcase a streamlined process for event streaming ingestion with Azure Event Hub and stream processing with Azure Functions. This allows for optimized event processing and enables targeted handling of events based on specific criteria, facilitating seamless downstream processing with the use of consumer groups or partition_id filters or event properties.

-

If you want to destroy all the resources created by the stack, Execute the below command to delete the stack, or you can delete the stack from console as well

- Resources created during Deploying The Solution

- Any other custom resources, you have created for this demo

# Delete from resource group az group delete --name Miztiik_Enterprises_xxx --yes # Follow any on-screen prompt

This is not an exhaustive list, please carry out other necessary steps as maybe applicable to your needs.

This repository aims to show how to Bicep to new developers, Solution Architects & Ops Engineers in Azure.

Thank you for your interest in contributing to our project. Whether it is a bug report, new feature, correction, or additional documentation or solutions, we greatly value feedback and contributions from our community. Start here

- Azure Docs - Event Hub

- Azure Docs - Event Hub - Streaming Event Capture

- Azure Docs - Event Hub Python Samples

- Azure Docs - Event Hub Explorer Tool

- Azure Docs - Event Hub Partitions

- Azure Docs - Managed Identity

- Azure Docs - Managed Identity Caching

- Gitub Issue - Default Credential Troubleshooting

- Gitub Issue - Default Credential Troubleshooting

Level: 200