This script allows you to easily import various AWS log types into an Elasticsearch cluster running locally on your computer in a docker container.

- ELB access logs

- ALB access logs

- VPC flow logs

- Route53 query logs

- Apache access logs

- Cloudtrail audit logs

- Cloudfront access logs

- S3 access logs

- Others to come!

The script configures everything that is needed in the ELK stack:

-

Elasticsearch:

- indices

- mappings

- ingest pipelines

-

Kibana:

- index-patterns

- field formatting for index-pattern fields

- importing dashboards, visualizations, and dashboards

- custom link directly to the newly created dashboard

-

Install Docker for Windows or Docker for Mac

-

Clone this git repository:

git clone https://github.com/mike-mosher/aws-la.git && cd aws-la -

Install requirements:

pip install -r ./requirements.txt

-

Bring the docker environment up:

docker-compose up -d -

Verify that the containers are running:

docker ps -

Verify that Elasticsearch is running:

curl -XGET localhost:9200/_cluster/health?pretty -

To run the script, specify the log type and directory containing the logs. For example, you could run the following command to import ELB Access Logs

python importLogs.py --logtype elb --logdir ~/logs/elblogs/ -

Valid log types are specified by running the

--helpargument. Currently, the valid logtypes are the following:elb # ELB access logs alb # ALB access logs vpc # VPC flow logs r53 # Route53 query logs apache # apache access log ('access_log') apache_archives # apache access logs (gunzip compressed with logrotate) -

Browse to the link provided in the output by using

cmd + double-click, or browse directly to the default Kibana page:http://localhost:5601 -

You can import multiple log types in the same ELK cluster. Just run the command again with the new log type and log directory:

python importLogs.py --logtype vpc --logdir ~/logs/vpc-flowlogs/ -

When done, you can shutdown the containers:

docker-compose down -v

-

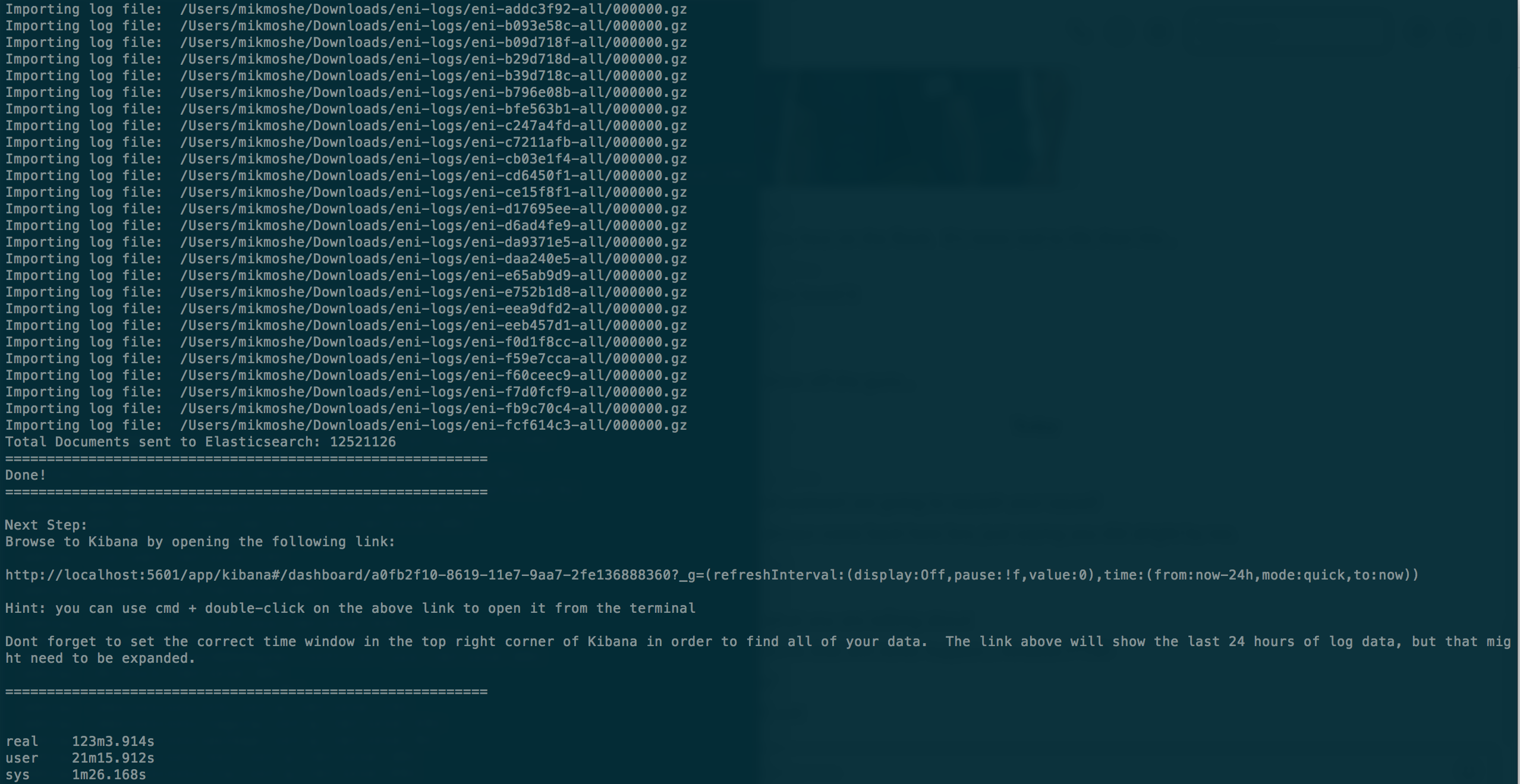

- As you can see, I was able to import 12.5 million VPC flowlogs in around 2 hours

-

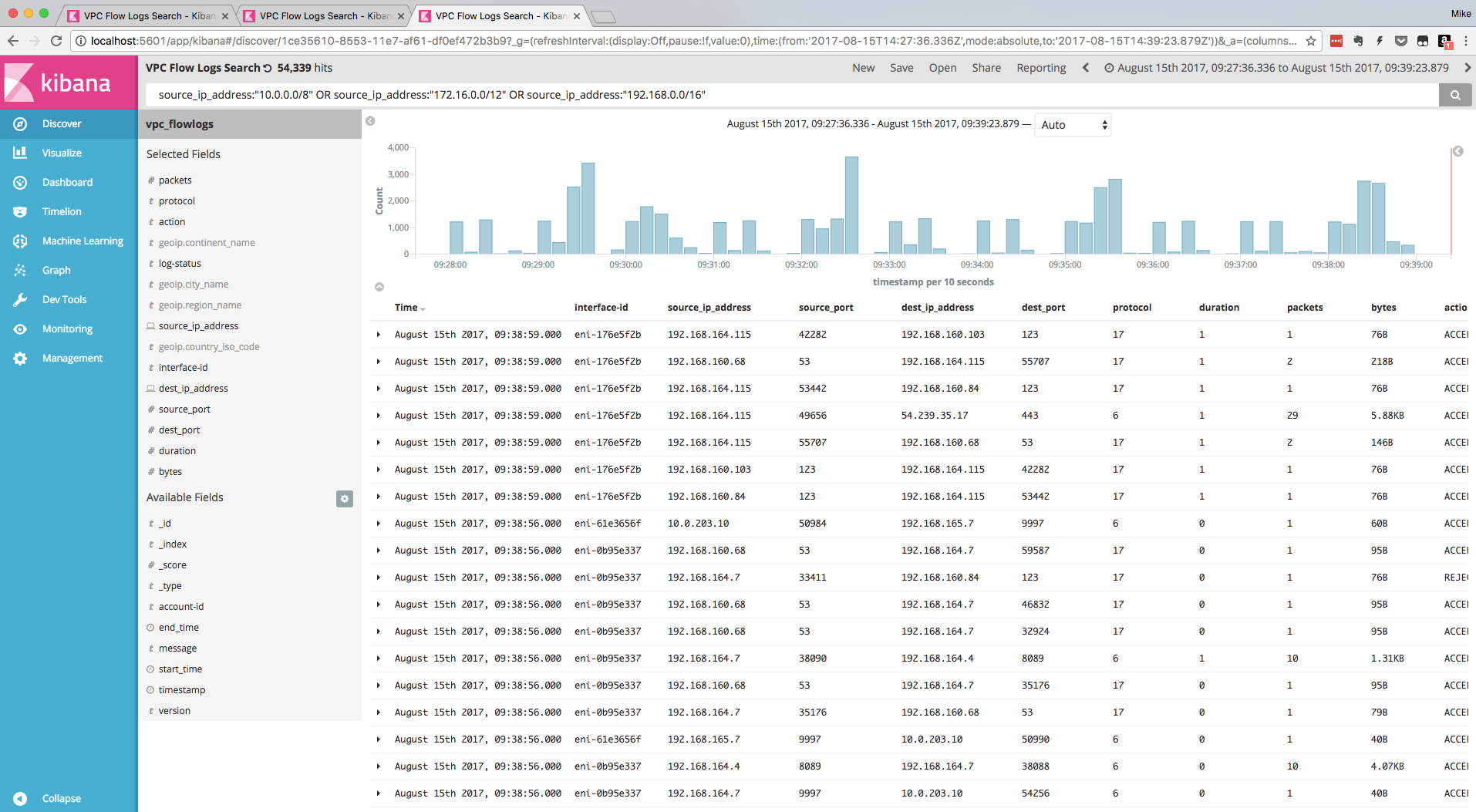

Searching for traffic initiated by RFC1918 (private) IP addresses:

- Browse to Discover tab, and enter the following query in the query bar:

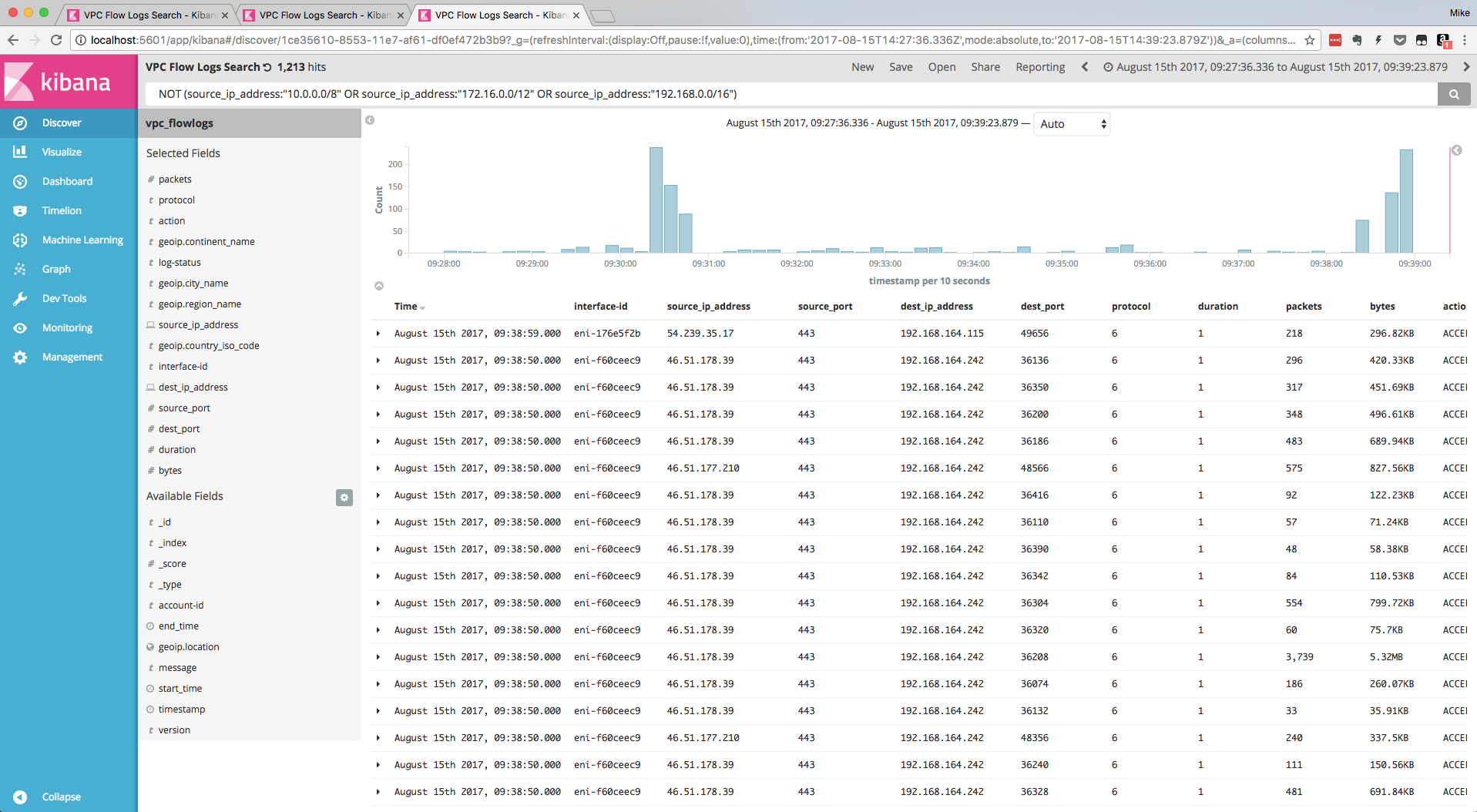

source_ip_address:"10.0.0.0/8" OR source_ip_address:"172.16.0.0/12" OR source_ip_address:"192.168.0.0/16"- Alternately, you can search for all traffic initiated by Public IP addresses in the logs:

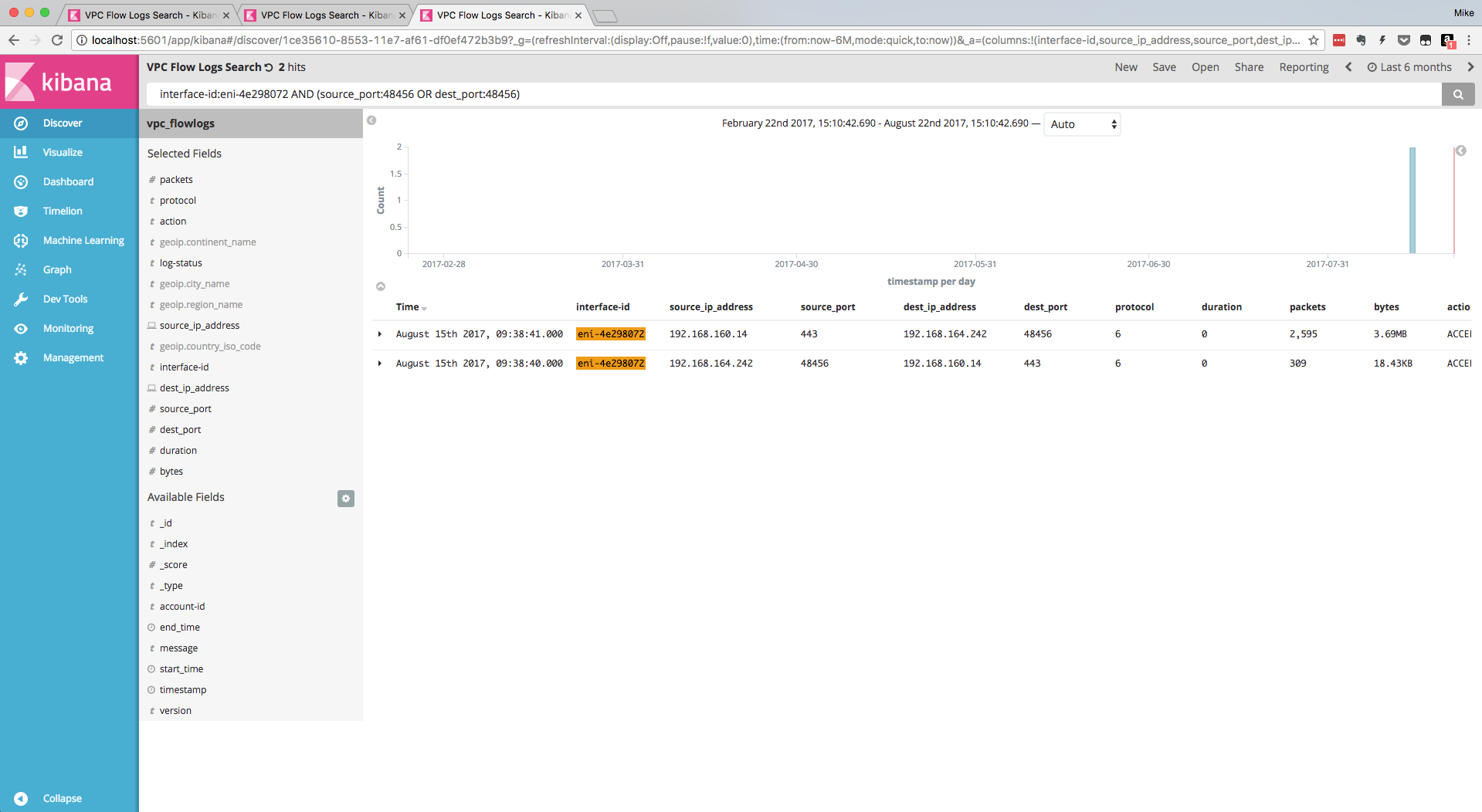

NOT (source_ip_address:"10.0.0.0/8" OR source_ip_address:"172.16.0.0/12" OR source_ip_address:"192.168.0.0/16")- Search for a specific flow to/from a specific ENI:

interface-id:<eni-name> AND (source_port:<port> OR dest_port:<port>)- Note: VPC Flow Logs split a flow into two log entries, so the above search will find both sides of the flow and show packets / bytes for each