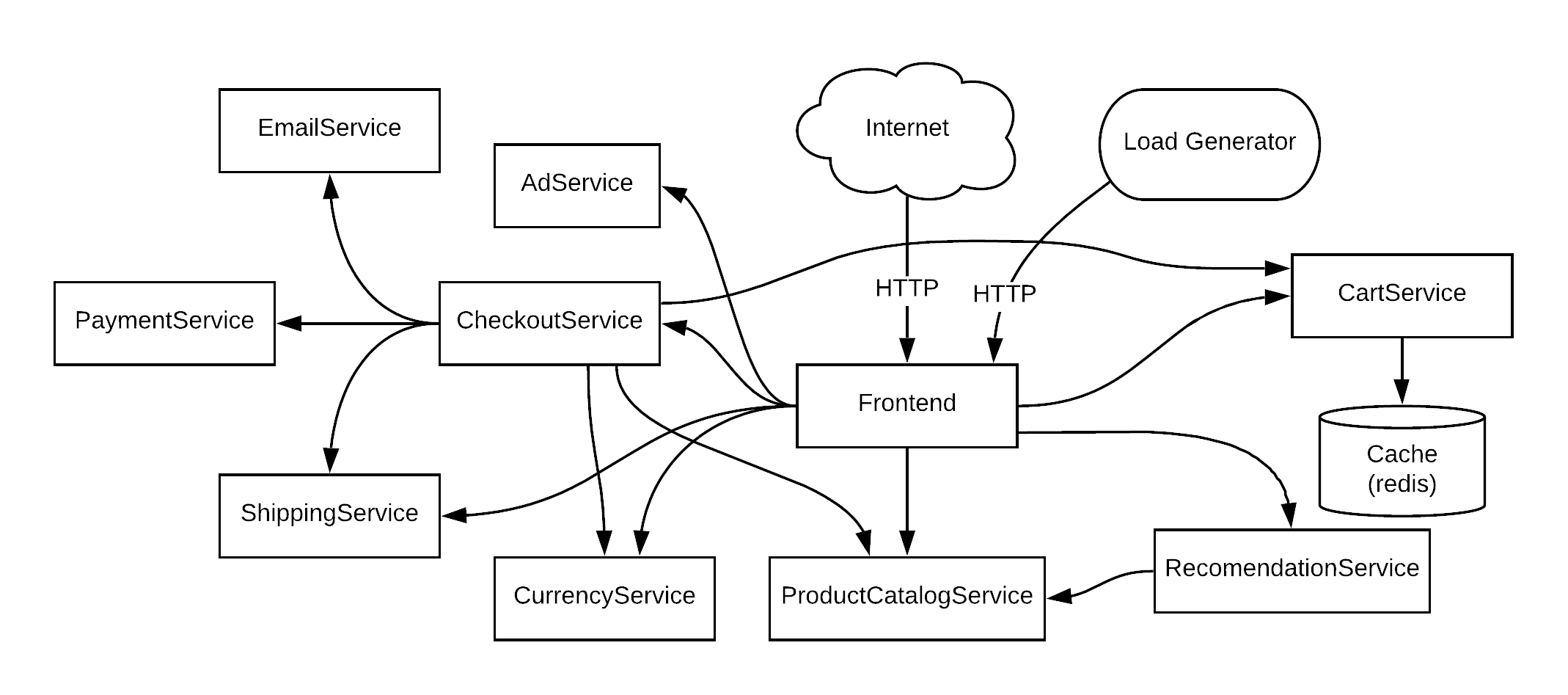

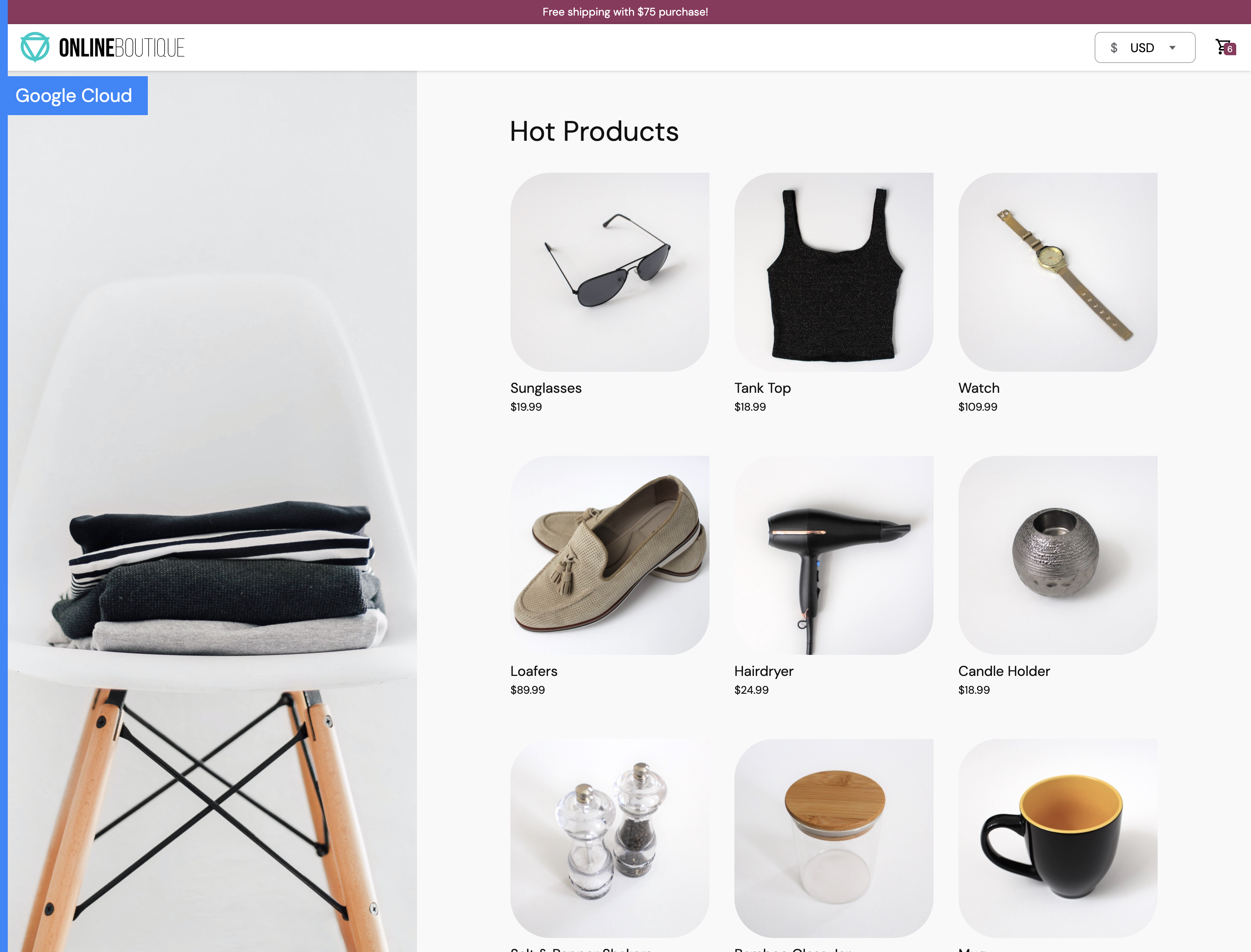

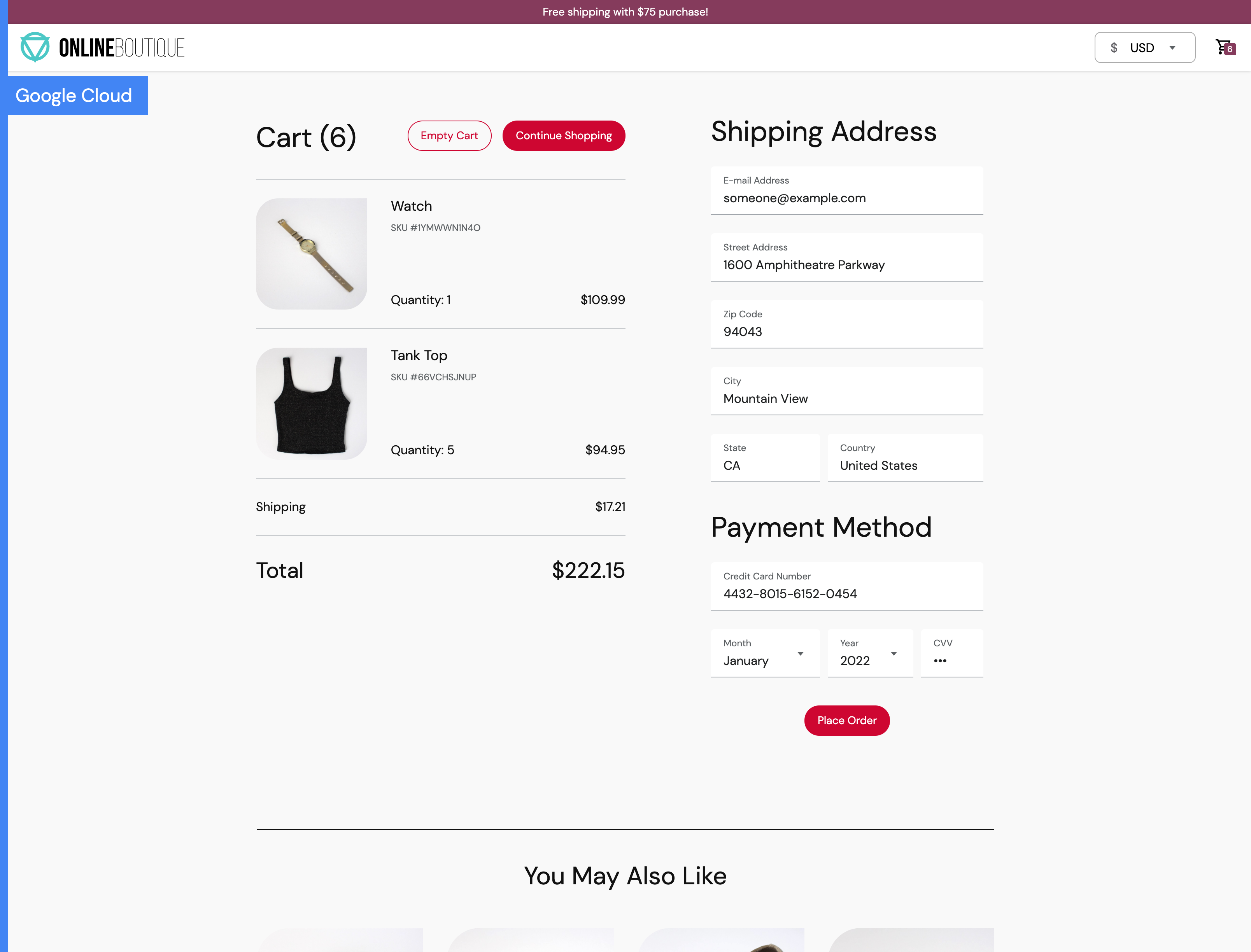

This repository will explain how to deploy the various CNCF project to observe properly your Kubernetes cluster . This repository is based on the popular Demo platform provided by Google : The Online Boutique

Online Boutique is a cloud-native microservices demo application. Online Boutique consists of a 10-tier microservices application. The application is a web-based e-commerce app where users can browse items, add them to the cart, and purchase them. The Google HipsterShop is a microservice architecture using several langages :

- Go

- Python

- Nodejs

- C#

- Java

| Home Page | Checkout Screen |

|---|---|

|

|

The following tools need to be install on your machine :

- jq

- kubectl

- git

- gcloud ( if you are using GKE)

- Helm

First of all, build the demo image:

make buildThen, run the demo:

make runYou will first need a Kubernetes cluster with 2 Nodes. You can either deploy on Minikube or K3s or follow the instructions to create GKE cluster:

PROJECT_ID="<your-project-id>"

gcloud services enable container.googleapis.com --project ${PROJECT_ID}

gcloud services enable monitoring.googleapis.com \

cloudtrace.googleapis.com \

clouddebugger.googleapis.com \

cloudprofiler.googleapis.com \

--project ${PROJECT_ID}

ZONE=us-central1-b

gcloud container clusters create onlineboutique \

--project=${PROJECT_ID} --zone=${ZONE} \

--machine-type=e2-standard-2 --num-nodes=4

git clone https://github.com/observe-k8s/Observe-k8s-demo

cd Observe-k8s-demo

helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace

this command will install the nginx controller on the nodes having the label observability

Since we are using Ingress controller to route the traffic , we will need to get the public ip adress of our ingress. With the public ip , we would be able to update the deployment of the ingress for :

- hipstershop

- grafana

- K6

IP=$(kubectl get svc ingress-nginx-controller -n ingress-nginx -ojson | jq -j '.status.loadBalancer.ingress[].ip')

update the following files to update the ingress definitions :

sed -i "s,IP_TO_REPLACE,$IP," kubernetes-manifests/k8s-manifest.yaml

sed -i "s,IP_TO_REPLACE,$IP," grafana/ingress.yaml

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

helm install prometheus prometheus-community/kube-prometheus-stack --set sidecar.datasources.enabled=true --set sidecar.datasources.label=grafana_datasource --set sidecar.datasources.labelValue="1" --set sidecar.dashboards.enabled=true

To measure the impact of our experiments on use traffic , we will use the load testing tool named K6. K6 has a Prometheus integration that writes metrics to the Prometheus Server. This integration requires to enable a feature in Prometheus named: remote-writer

To enable this feature we will need to edit the CRD containing all the settings of promethes: prometehus

To get the Prometheus object named use by prometheus we need to run the following command:

kubectl get Prometheus

here is the expected output:

NAME VERSION REPLICAS AGE

prometheus-kube-prometheus-prometheus v2.32.1 1 22h

We will need to add an extra property in the configuration object :

enableFeatures:

- remote-write-receiver

so to update the object :

kubectl edit Prometheus prometheus-kube-prometheus-prometheus

After the update your Prometheus object should look like :

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

annotations:

meta.helm.sh/release-name: prometheus

meta.helm.sh/release-namespace: default

generation: 2

labels:

app: kube-prometheus-stack-prometheus

app.kubernetes.io/instance: prometheus

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/part-of: kube-prometheus-stack

app.kubernetes.io/version: 30.0.1

chart: kube-prometheus-stack-30.0.1

heritage: Helm

release: prometheus

name: prometheus-kube-prometheus-prometheus

namespace: default

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: prometheus-kube-prometheus-alertmanager

namespace: default

pathPrefix: /

port: http-web

enableAdminAPI: false

enableFeatures:

- remote-write-receiver

externalUrl: http://prometheus-kube-prometheus-prometheus.default:9090

image: quay.io/prometheus/prometheus:v2.32.1

listenLocal: false

logFormat: logfmt

logLevel: info

paused: false

podMonitorNamespaceSelector: {}

podMonitorSelector:

matchLabels:

release: prometheus

portName: http-web

probeNamespaceSelector: {}

probeSelector:

matchLabels:

release: prometheus

replicas: 1

retention: 10d

routePrefix: /

ruleNamespaceSelector: {}

ruleSelector:

matchLabels:

release: prometheus

securityContext:

fsGroup: 2000

runAsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-kube-prometheus-prometheus

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector:

matchLabels:

release: prometheus

shards: 1

version: v2.32.1

kubectl apply -f prometheus/PrometheusRule.yaml

kubectl create secret generic addtional-scrape-configs --from-file=prometheus/additionnalscrapeconfig.yaml

kubectl apply -f prometheus/Prometheus.yaml

PROMETHEUS_SERVER=$(kubectl get svc -l app=kube-prometheus-stack-prometheus -o jsonpath="{.items[0].metadata.name}")

GRAFANA_SERVICE=$(kubectl get svc -l app.kubernetes.io/name=grafana -o jsonpath="{.items[0].metadata.name}")

ALERT_MANAGER_SVC=$(kubectl get svc -l app=kube-prometheus-stack-alertmanager -o jsonpath="{.items[0].metadata.name}")

kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.6.1/cert-manager.yaml

kubectl get svc -n cert-manager

After a few minutes, you should see:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cert-manager ClusterIP 10.99.253.6 <none> 9402/TCP 42h

cert-manager-webhook ClusterIP 10.99.253.123 <none> 443/TCP 42h

kubectl apply -f https://github.com/open-telemetry/opentelemetry-operator/releases/latest/download/opentelemetry-operator.yaml

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

kubectl create ns tempo

helm upgrade --install tempo grafana/tempo --namespace tempo

TEMPO_SERICE_NAME=$(kubectl get svc -l app.kubernetes.io/instance=tempo -n tempo -o jsonpath="{.items[0].metadata.name}")

sed -i "s,TEMPO_SERVICE_TOREPLACE,$TEMPO_SERICE_NAME," kubernetes-manifests/openTelemetry-manifest.yaml

sed -i "s,PROM_SERVICE_TOREPLACE,$PROMETHEUS_SERVER," kubernetes-manifests/openTelemetry-manifest.yaml

CLUSTERID=$(kubectl get namespace kube-system -o jsonpath='{.metadata.uid}')

sed -i "s,CLUSTER_ID_TOREPLACE,$CLUSTERID," kubernetes-manifests/openTelemetry-manifest.yaml

helm install fluent-operator --create-namespace -n kubesphere-logging-system https://github.com/fluent/fluent-operator/releases/download/v1.0.0/fluent-operator.tgz

kubectl create ns loki

helm upgrade --install loki grafana/loki --namespace loki

kubectl wait pod -n loki -l app=loki --for=condition=Ready --timeout=2m

LOKI_SERVICE=$(kubectl get svc -l app=loki -n loki -o jsonpath="{.items[0].metadata.name}")

sed -i "s,LOKI_SERVICE_TOREPLACE,$LOKI_SERVICE," fluent/ClusterOutput_loki.yaml

kubectl apply -f fluent/fluentbit_deployment.yaml -n kubesphere-logging-system

kubectl apply -f fluent/ClusterOutput_loki.yaml -n kubesphere-logging-system

kubectl create namespace kubecost

helm repo add kubecost https://kubecost.github.io/cost-analyzer/

helm install kubecost kubecost/cost-analyzer --namespace kubecost --set kubecostToken="aGVucmlrLnJleGVkQGR5bmF0cmFjZS5jb20=xm343yadf98" --set prometheus.kube-state-metrics.disabled=true --set prometheus.nodeExporter.enabled=false --set ingress.enabled=true --set ingress.hosts[0]="kubecost.$IP.nip.io" --set global.grafana.enabled=false --set global.grafana.fqdn="http://$GRAFANA_SERVICE.default.svc" --set prometheusRule.enabled=true --set global.prometheus.fqdn="http://$PROMETHEUS_SERVER.default.svc:9090" --set global.prometheus.enabled=false --set serviceMonitor.enabled=true

sed -i "s,IP_TO_REPLACE,$IP," kubecost/kubecost_ingress.yaml

kubectl apply -f kubecost/kubecost_ingress.yaml -n kubecost

sed -i "s,ALERT_MANAGER_TOREPLACE,$ALERT_MANAGER_SVC," kubecost/kubecost_cm.yaml

sed -i "s,PROMETHEUS_SVC_TOREPALCE,$PROMETHEUS_SERVER," kubecost/kubecost_cm.yaml

sed -i "s,GRAFANA_SERICE_TOREPLACE,$GRAFANA_SERVICE," kubecost/kubecost_nginx_cm.yaml

kubectl apply -n kubecost -f kubecost/kubecost_cm.yaml

kubectl apply -n kubecost -f kubecost/kubecost_nginx_cm.yaml

kubectl delete pod -n kubecost -l app=cost-analyzer

echo "adding the various datasource in Grafana"

sed -i "s,LOKI_TO_REPLACE,$LOKI_SERVICE," grafana/prometheus-datasource.yaml

sed -i "s,TEMPO_TO_REPLACE,$TEMPO_SERICE_NAME," grafana/prometheus-datasource.yaml

kubectl apply -f grafana/prometheus-datasource.yaml

kubectl apply -f kubernetes-manifests/openTelemetry-manifest.yaml

kubectl create ns hipster-shop

sed -i "s,PROMETHEUS_SVC_TOREPALCE,$PROMETHEUS_SERVER," kubernetes-manifests/k8s-manifest.yaml

kubectl apply -f kubernetes-manifests/k8s-manifest.yaml -n hipster-shop

gcloud container clusters delete onlineboutique \

--project=${PROJECT_ID} --zone=${ZONE}

Online Boutique is composed of 11 microservices written in different languages that talk to each other over gRPC. See the Development Principles doc for more information.

Find Protocol Buffers Descriptions at the ./pb directory.

| Service | Language | Description |

|---|---|---|

| frontend | Go | Exposes an HTTP server to serve the website. Does not require signup/login and generates session IDs for all users automatically. |

| cartservice | C# | Stores the items in the user's shopping cart in Redis and retrieves it. |

| productcatalogservice | Go | Provides the list of products from a JSON file and ability to search products and get individual products. |

| currencyservice | Node.js | Converts one money amount to another currency. Uses real values fetched from European Central Bank. It's the highest QPS service. |

| paymentservice | Node.js | Charges the given credit card info (mock) with the given amount and returns a transaction ID. |

| shippingservice | Go | Gives shipping cost estimates based on the shopping cart. Ships items to the given address (mock) |

| emailservice | Python | Sends users an order confirmation email (mock). |

| checkoutservice | Go | Retrieves user cart, prepares order and orchestrates the payment, shipping and the email notification. |

| recommendationservice | Python | Recommends other products based on what's given in the cart. |

| adservice | Java | Provides text ads based on given context words. |

| loadgenerator | JS /K6 | Continuously sends requests imitating realistic user shopping flows to the frontend. |