Apache Airflow pipeline design in accordance of Sparkify Dataset requirements.

This pipeline extracts from S3 and loads into AWS Redshift

Index:

- Files and Requirements

- Schema

- How to Run

udac_example_dag.py: Main DAG fileOperators: Folder with custom Apache Airflow Python Operatorscreate_tables.sql: SQL statements to create the staging and Star Schema tables.sql_qieries.py: Class with modularized SQL queries

For instructions on how to create and start an AWS Redshift cluster, please follow this github repository: Datawharehouse with Redshift

- python3

- AWS Redshift

- Enviroment running Apache Airflow

- airflow

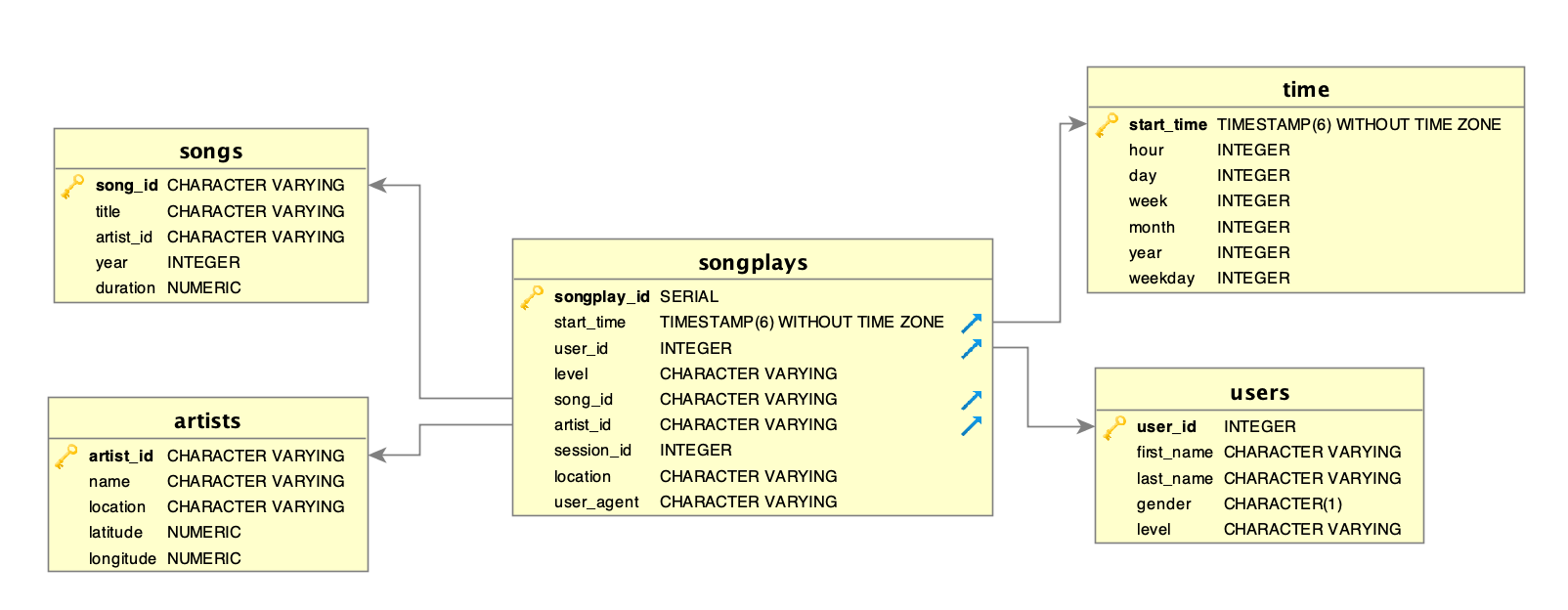

The star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of dimension tables. The star schema is an important special case of the snowflake schema, and is more effective for handling simpler queries

Source: Wikipedia

- songplays - records in log data associated with song plays i.e. records with page NextSong.

- songplay_id, start_time, user_id, level, song_id, artist_id, session_id, location, user_agent.

- users - users in the app.

- user_id, first_name, last_name, gender, level

- songs - songs in music database.

- song_id, title, artist_id, year, duration

- artists - artists in music database

- artist_id, name, location, latitude, longitude.

- time - timestamps of records in songplays broken down into specific units.

- start_time, hour, day, week, month, year, weekday.

a) Dowload the repository to a machine that has Apache Airflow installed.

b) Once you have created your Redshift Cluster, get the following parameters:

USER:

- IAM ARN ROLE

CLUSTER:

- Host

- DB Name

- DB User

- DB Password

- DB Port

Your should also have at hand your AWS user details with programmatic access.

c) to start the Apache Airflow server UI run the following command: /opt/airflow/start.sh

d) Create the Variables that will be used by the Hooks

e) Turn the Dag to ON and it should start executing according to the schedule

IMPORTANT: Make sure to delete your Redshift cluster and IAM client at the end as this incurs in cost.

Helpful reference: https://github.com/shravan-kuchkula/