This repository contains codebase to apply vision transformers on surface data. This is the official PyTorch implementation of Surface Vision Transformers: Attention-Based Modelling applied to Cortical Analysis, presented at the MIDL 2022 conference.

Here, Surface Vision Transformer (SiT) is applied on cortical data for phenotype predictions.

V.1.1 - 12.02.24

Major codebase update - 12.02.24- Adding masked patch pretraining code to codebase

- can be run as simply as with: python pretrain.py ../config/SiT/pretraining/mpp.yml

V.1.0 - 18.07.22

Major codebase update - 18.07.22- birth age and scan age prediction tasks

- simplifying training script

- adding birth age prediction script

- simplifying preprocessing script

- ingle config file tasks (scan age / birth age) and data configurations (template / native)

- adding mesh indices to extract non-overlapping triangular patches from a cortical mesh ico 6 sphere representation

V.0.2

Update - 25.05.22- testing file and config

- installation guidelines

- data access

V.0.1

Initial commits - 12.10.21- training script

- README

- config file for training

Connectome Workbench is a free software for visualising neuroimaging data and can be used for visualising cortical metrics on surfaces. Downloads and instructions here.

For PyTorch and dependencies installation with conda, please follow instructions in install.md.

Coming soon

For docker support, please follow instructions in docker.md

Data used in this project comes from the dHCP dataset. Instructions for processing MRI scans and extract cortical metrics can be found in S. Dahan et al 2021 and references cited in.

To simplify reproducibility of the work, data has been already processed and is made available by following the next guidelines.

Cortical surface metrics already processed as in S. Dahan et al 2021 and A. Fawaz et al 2021 are available upon request.

How to access the processed data?

To access the data please:

- Sign in here

- Sign the dHCP open access agreement

- Forward the confirmation email to slcn.challenge@gmail.com

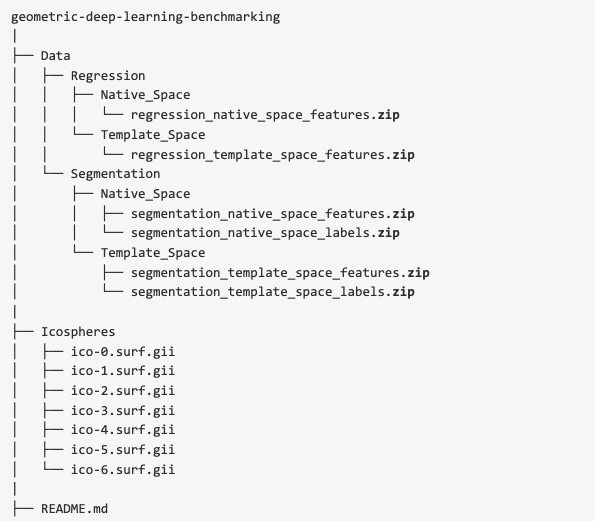

G-Node GIN repository

Once the confirmation has been sent, you will have access to the G-Node GIN repository containing the data already processed.

The data used for this project is in the zip files `regression_native_space_features.zip` and `regression_template_space_features.zip`. You also need to use the `ico-6.surf.gii` spherical mesh.

Training and validation sets are available for the task of birth-age and scan-age prediction, in template and native configuration.

However the test set is not currently publicly available as used as testing set in the SLCN challenge on surface learning alongside the MLCN workshop at MICCAI 2022.

Once the data is accessible, further preparation steps are required to get right and left metrics files in the same orientation, before extracting the sequences of patches.

- Download zip files containing the cortical features:

regression_template_space_features.zipandregression_native_space_features.zip. Unzip the files. Data is in the format

{uid}_{hemi}.shape.gii

-

Download the

ico-6.surf.giispherical mesh from the G-Node GIN repository. This icosphere is by default set to a CORTEX_RIGHT structure in workbench. -

Rename the

ico-6.surf.giifile asico-6.R.surf.gii -

Create a new sphere by symmetrising the righ sphere using workbench. In bash:

wb_command -surface-flip-lr ico-6.R.surf.gii ico-6.L.surf.gii

- Then, set the structure of the new icosphere to

CORTEX_LEFT. In bash:

wb_command -set-structure ico-6.L.surf.gii CORTEX_LEFT

- Use the new left sphere to resample all left metric files in the template and native data folder. In bash:

cd regression_template_space_features

for i in *L*; do wb_command -metric-resample ${i} ../ico-6.R.surf.gii ../ico-6.L.surf.gii BARYCENTRIC ${i}; done

and

cd regression_native_space_features

for i in *L*; do wb_command -metric-resample ${i} ../ico-6.R.surf.gii ../ico-6.L.surf.gii BARYCENTRIC ${i}; done

- Set the structure of the right metric files to CORTEX_LEFT, in both template and native data folder. In bash:

cd regression_template_space_features

for i in *R*; do wb_command -set-structure ${i} CORTEX_LEFT; done

and

cd regression_native_space_features

for i in *R*; do wb_command -set-structure ${i} CORTEX_LEFT; done

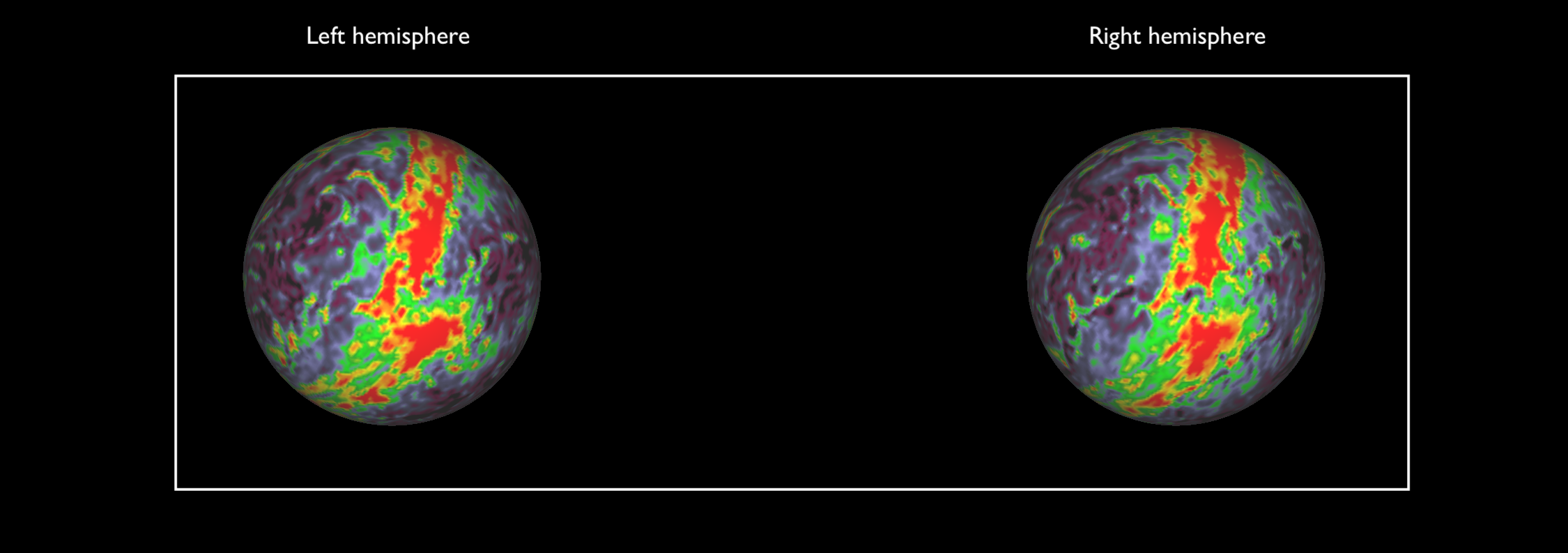

Example of left and right myelin maps after resampling

Once symmetrised, both left and right hemispheres have the same orientation when visualised on a left hemipshere template.

- Once this step is done, the preprocessing script can be used to prepare the training and validation numpy array files, per task (birth-age, scan-age) and data configuration (template, native).

In the YAML file config/preprocessing/hparams.yml, change the path to data, set the parameters and run the preprocessing.py script in ./tools:

cd tools

python preprocessing.py ../config/preprocessing/hparams.yml

For training a SiT model, use the following command:

python train.py ../config/SiT/training/hparams.yml

Where all hyperparameters for training and model design models are to be set in the yaml file config/preprocessing/hparams.yml, such as:

- Transformer architecture

- Training strategy: from scratch, ImageNet or SSL weights

- Optimisation strategy

- Patching configuration

- Logging

For testing a SiT model, please put the path of the SiT weights in /testing/hparams.yml and use the following command:

python test.py ../config/SiT/training/hparams.yml

Coming soon

This codebase uses the vision transformer implementation from

lucidrains/vit-pytorch and the pre-trained ViT models from the timm librairy.

Please cite these works if you found it useful:

Surface Vision Transformers: Attention-Based Modelling applied to Cortical Analysis

@InProceedings{pmlr-v172-dahan22a,

title = {Surface Vision Transformers: Attention-Based Modelling applied to Cortical Analysis},

author = {Dahan, Simon and Fawaz, Abdulah and Williams, Logan Z. J. and Yang, Chunhui and Coalson, Timothy S. and Glasser, Matthew F. and Edwards, A. David and Rueckert, Daniel and Robinson, Emma C.},

booktitle = {Proceedings of The 5th International Conference on Medical Imaging with Deep Learning},

pages = {282--303},

year = {2022},

editor = {Konukoglu, Ender and Menze, Bjoern and Venkataraman, Archana and Baumgartner, Christian and Dou, Qi and Albarqouni, Shadi},

volume = {172},

series = {Proceedings of Machine Learning Research},

month = {06--08 Jul},

publisher = {PMLR},

pdf = {https://proceedings.mlr.press/v172/dahan22a/dahan22a.pdf},

url = {https://proceedings.mlr.press/v172/dahan22a.html},

}