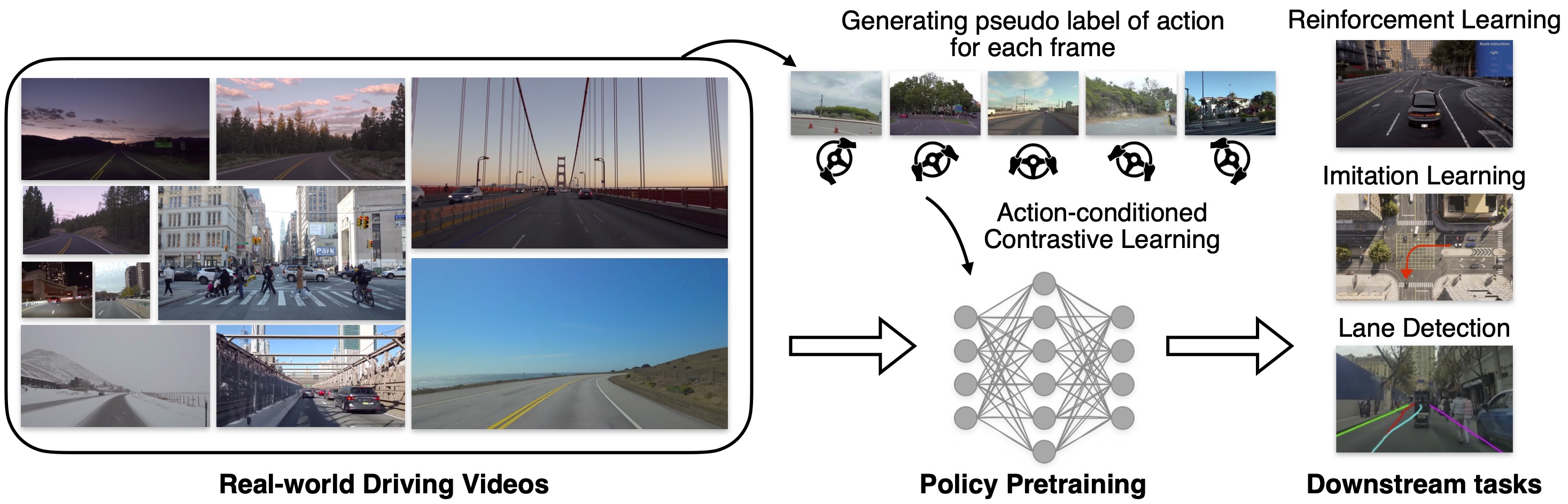

Learning to Drive by Watching YouTube videos: Action-Conditioned Contrastive Policy Pretraining (ECCV22)

Webpage | Code | Paper | YouTube Driving Dataset | Pretrained ResNet34

Our codebase is based on MoCo. A simple PyTorch environment will be enough (see MoCo's installation instruction).

We collect driving videos from YouTube. Here we provide the 🔗 video list we used. You could also download the frames directly via 🔗 this OneDrive link and run:

cat sega* > frames.zipto get the zip file.

For training ACO, you should also download label.pt and meta.txt, and put them under {aco_path}/code and {your_dataset_directory}/ respectively.

We provide main_label_moco.py for training. To perform ACO training of a ResNet-34 model in an 8-gpu machine, run:

python main_label_moco.py -a resnet34 --mlp -j 16 --lr 0.003 \

--batch-size 256 --moco-k 40960 --dist-url 'tcp://localhost:10001' \

--multiprocessing-distributed --world-size 1 --rank 0 {your_dataset_directory} Some important arguments:

--aug_cf: whether to use Cropping and Flipping augmentations in pre-training. In ACO, we do not use these two augmentations by default.--thres: action similarity threshold.

We also provide 🔗 pretrained ResNet34 checkpoint. After downloading, you can load this checkpoint via:

import torch

from torchvision.models import resnet34

net = resnet34()

net.load_state_dict(torch.load('ACO_resnet34.ckpt'), strict=False) @article{zhang2022learning,

title={Learning to Drive by Watching YouTube videos: Action-Conditioned Contrastive Policy Pretraining},

author={Zhang, Qihang and Peng, Zhenghao and Zhou, Bolei},

journal={European Conference on Computer Vision (ECCV)},

year={2022}

}