- 🔥 09/2022: The extension to this work (ALADIN: Distilling Fine-grained Alignment Scores for Efficient Image-Text Matching and Retrieval) has been published in proceedings of CBMI 2022. Check out code and paper!

Code for the cross-modal visual-linguistic retrieval method from "Fine-grained Visual Textual Alignment for Cross-modal Retrieval using Transformer Encoders", accepted for publication in ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) [Pre-print PDF].

This work is an extension to our previous approach TERN accepted at ICPR 2020.

This repo is built on top of VSE++ and TERN.

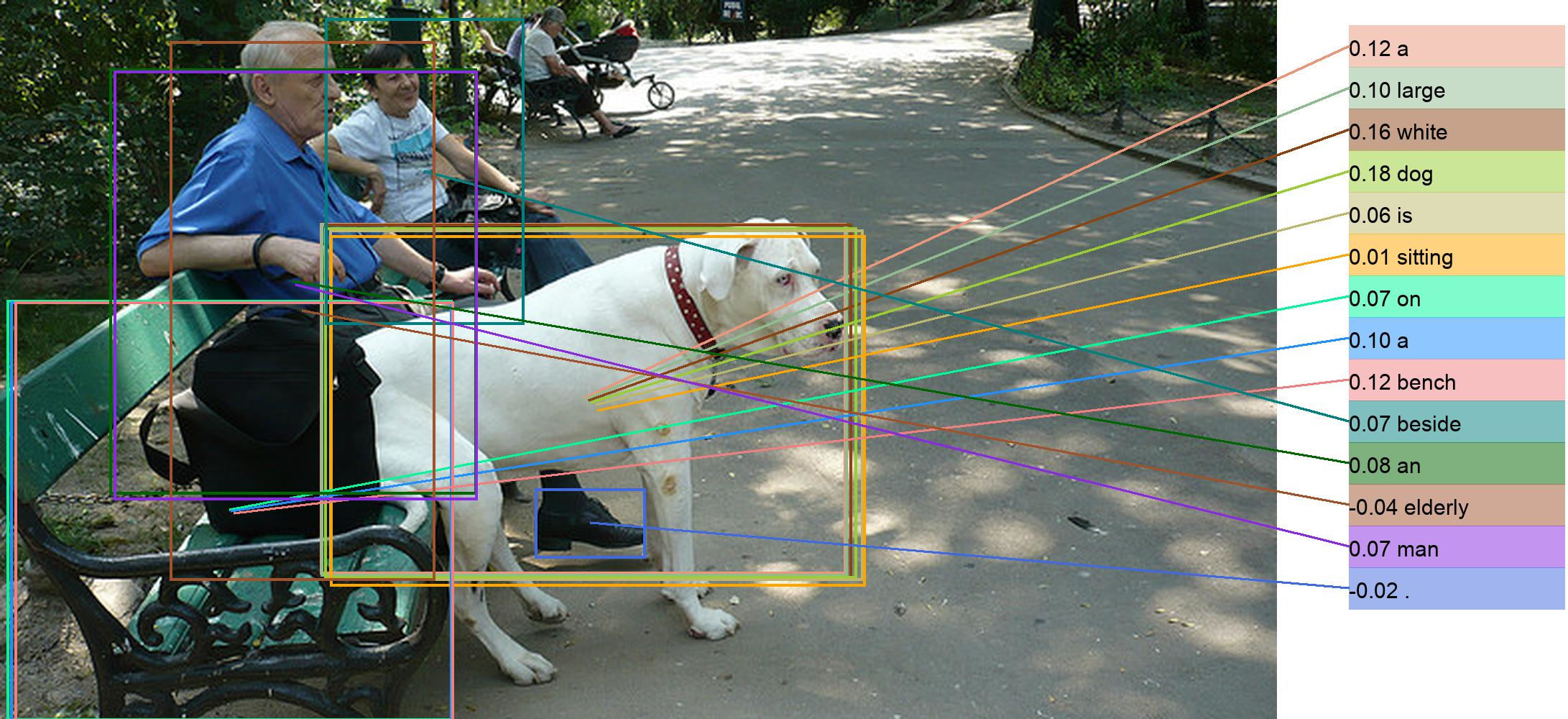

Fine-grained Alignment for Precise Matching

- Clone the repo and move into it:

git clone https://github.com/mesnico/TERAN

cd TERAN

- Setup python environment using conda:

conda env create --file environment.yml

conda activate teran

export PYTHONPATH=.

Data and pretrained models be downloaded from this OneDrive link (see the steps below to understand which files you need):

- Download and extract the data folder, containing annotations, the splits by Karpathy et al. and ROUGEL - SPICE precomputed relevances for both COCO and Flickr30K datasets. Extract it:

tar -xvf data.tgz

- Download the bottom-up features for both COCO and Flickr30K. We use the code by Anderson et al. for extracting them.

The following command extracts them under

data/coco/anddata/f30k/. If you prefer another location, be sure to adjust the configuration file accordingly.

# for MS-COCO

tar -xvf features_36_coco.tgz -C data/coco

# for Flickr30k

tar -xvf features_36_f30k.tgz -C data/f30k

Extract our pre-trained TERAN models:

tar -xvf TERAN_pretrained_models.tgz

Then, issue the following commands for evaluating a given model on the 1k (5fold cross-validation) or 5k test sets.

python3 test.py pretrained_models/[model].pth --size 1k

python3 test.py pretrained_models/[model].pth --size 5k

Please note that if you changed some default paths (e.g. features are in another folder than data/coco/features_36), you will need to use the --config option and provide the corresponding yaml configuration file containing the right paths.

In order to train the model using a given TERAN configuration, issue the following command:

python3 train.py --config configs/[config].yaml --logger_name runs/teran

runs/teran is where the output files (tensorboard logs, checkpoints) will be stored during this training session.

WIP

If you found this code useful, please cite the following paper:

@article{messina2021fine,

title={Fine-grained visual textual alignment for cross-modal retrieval using transformer encoders},

author={Messina, Nicola and Amato, Giuseppe and Esuli, Andrea and Falchi, Fabrizio and Gennaro, Claudio and Marchand-Maillet, St{\'e}phane},

journal={ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM)},

volume={17},

number={4},

pages={1--23},

year={2021},

publisher={ACM New York, NY}

}