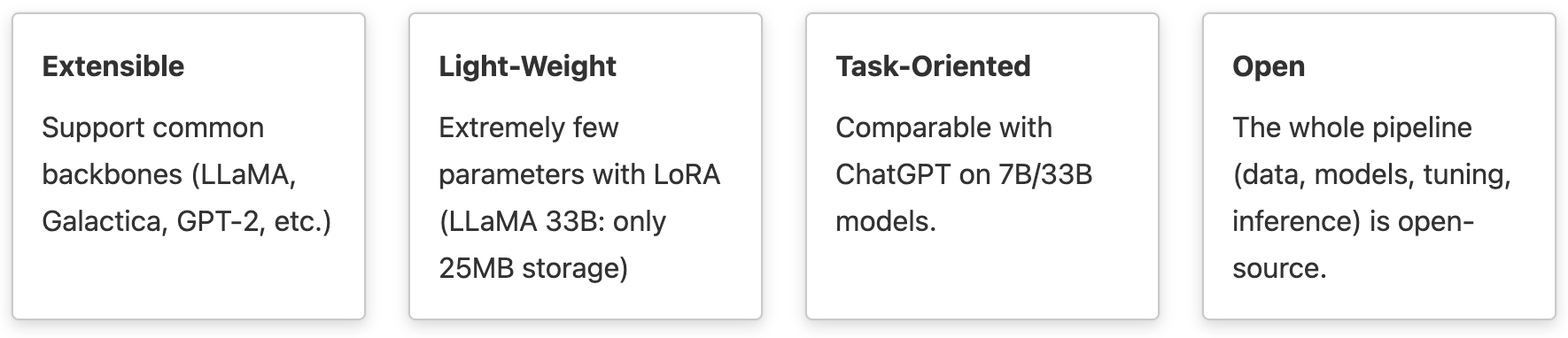

An extensible, convenient, and efficient toolbox for finetuning large machine learning models, designed to be user-friendly, speedy and reliable, and accessible to the entire community.

| PubMedQA (ID) | MedQA-USMLE (OOD) | MedMCQA (ID) | Average | |

|---|---|---|---|---|

| Human (pass) | 60.0 | 50.0 | ||

| Human (expert) | 78.0 | 87.0 | 90.0 | 85.0 |

| InstructGPT 175B | 73.2 | 46.0 | 44.0 | 54.4 |

| ChatGPT | 63.9 | 57.0 | 44.7 | 55.2 |

| LLaMA 7B | 5.2 | 27.1 | 24.3 | 18.9 |

| LLaMA 33B | 1.8 | 43.4 | 30.3 | 25.2 |

| Task-tuned LLaMA 7B (Full) | 75.1 | 44.5 | 49.9 | 56.5 |

| Task-tuned LLaMA 33B (LoRA) | 74.0 | 51.3 | 50.2 | 58.5 |

The LLaMA 33B (LoRA) performance is achieved with only ~16h finetuning on the training split of PubMedQA and MedMCQA with a single 8 * A100 server. For more performance, including instruction tuning results, please refer to our Documentation.

| Pipelines | Status |

|---|---|

| Task Tuning | ✅ Supported |

| Instruction Tuning | ✅ Supported |

| Parameter-Efficient Tuning | ✅ Supported |

| Large Model Inference | ✅ Supported |

| Reinforced Tuning | 🚧 Developing |

Seamlessly supported the models in 🤗 huggingface.

| Models | Status | Models | Status | |

|---|---|---|---|---|

| GPT2-large | ✅ Tested | Galactica-6.7B | 🚧 Untested | |

| GPT2-xl | ✅ Tested | Galactica-30B | 🚧 Untested | |

| GPT-Neo-1.3B | 🚧 Untested | LLaMA-7B | ✅ Tested ⭐ | |

| GPT-Neo-2.7B | 🚧 Untested | LLaMA-13B | ✅ Tested ⭐ | |

| GPT-Neox-20B | 🚧 Untested | LLaMA-33B | ✅ Tested ⭐ | |

| Galactica-1.3B | ✅ Tested | LLaMA-65B | 🚧 Untested |

git clone https://github.com/OptimalScale/LMFlow.git

cd LMFlow

conda create -n lmflow python=3.9 -y

conda activate lmflow

conda install mpi4py

pip install -e .You can easily download the example training dataset and test dataset by running

cd data

bash download.sh all

cd -If you cannot access Google Drive, you can download the data by BaiduNetDisk.

You can also use your own dataset by simply convert to the following format:

{

"type": "text2text",

"instances": [

{

"input": "Question: The Transformer architecture [START_REF]",

"output": "N/A"

},

...

]

}{

"type": "text_only",

"instances": [

{

"text": "Defintion: In this task, we ask you to write an answer to a question that involves events that may be stationary (not changing over time) or transient (changing over time). For example, the sentence \"he was born in the U.S.\" contains a stationary event since it will last forever; however, \"he is hungry\" contains a transient event since it will remain true for a short period of time. Note that a lot of the questions could have more than one correct answer. We only need a single most-likely answer. Please try to keep your \"answer\" as simple as possible. Concise and simple \"answer\" is preferred over those complex and verbose ones. \n Input: Question: Sentence: It's hail crackled across the comm, and Tara spun to retake her seat at the helm. \nQuestion: Will the hail storm ever end? \n Output: NA \n\n"

},

...

]

}You can run scripts/run_finetune.sh to finetune a GPT-2 base model

./scripts/run_finetune.shIf you would like to provide arguments for deepspeed to reflect your machine settings, you may pass the corresponding deepspeed arguments to the script. For example,

./scripts/run_finetune.sh "--num_gpus=8 --master_port 10001"To enable LoRA finetuning, you may refer to

./scripts/run_finetune_with_lora.shwhich can be run in similar manner.

For detailed configurations, one may modify these scripts directly. These

scripts actually just call python script examples/finetune.py, which can

be run in following manner,

deepspeed ${deepspeed_args} \

examples/finetune.py \

--deepspeed configs/ds_config_zero3.json \

--bf16 \

--run_name finetune_with_lora \

--model_name_or_path facebook/galactica-1.3b \

--num_train_epochs 0.01 \

--learning_rate 2e-5 \

--dataset_path ${dataset_path} \

--per_device_train_batch_size 1 \

--per_device_eval_batch_size 1 \

--validation_split_percentage 0 \

--logging_steps 20 \

--block_size 512 \

--do_train \

--output_dir output_models/finetune \

--overwrite_output_dir \

--ddp_timeout 72000 \

--save_steps 5000 \

--dataloader_num_workers 1Here we set number of epochs --num_train_epochs to 0.01 so that the

finetuning process can be finished quickly. If you wish to obtain a model with

better performance, feel free to adjust those hyperparameters. You may run

python examples/finetune.py -hto view all possible finetuning arguments. The finetuned model checkpoint will

be saved in the argument specified by --output_dir, which is

output_models/finetune in the above example.

One can directly run inference with an existing huggingface model, e.g. to run GPT2 large, one may execute

./scripts/run_inference.shor run the corresponding python script

CUDA_VISIBLE_DEVICES=0 \

deepspeed examples/inference.py \

--answer_type medmcqa \

--model_name_or_path gpt2-large \

--test_file data/MedQA-USMLE/validation/valid_1273.json \

--deepspeed examples/ds_config.json \To load the finetuned model, specify --model_name_or_path with the saved

model checkpoint directory path.

For LoRA finetuned models, one may refer to

./scripts/run_inference_with_lora.shThose scripts invoke the examples examples/*.py built based on our APIs. For

more API-related examples, one may refer to the methods in the unittest

tests.

-

First, you need to get the access of LLaMA model from facebookresearch/llama. Download the official checkpoints and save them into

${llama-path}. -

Second, convert the official checkpoints

${llama-path}to HuggingFace supported checkpoints${llama-hf-path}by runningpython ./scripts/convert_llama_weights_to_hf.py --input_dir ${llama-path} --model_size 7B --output_dir ${llama-hf-path}/llama-7b-hf -

Then you are good to go by setting the checkpoint path to

${llama-hf-path}/llama-7b-hf. Enjoy it! -

(optional) Now you have the original llama-7b-hf pretrained model. With

cd output_models && ./download.sh all && cd -You can obtain the model difference finetuned by ours. By a way similar to ./scripts/run_inference_with_lora.sh,

CUDA_VISIBLE_DEVICES=0 \

deepspeed examples/inference.py \

--answer_type text \

--model_name_or_path ${llama-hf-path}/llama-7b-hf \

--lora_model_path output_models/${llama-model-diff-path} \

--test_file data/alpaca/test/test_252.json \

--deepspeed examples/ds_config.jsonYou can now inference with the finetuned llama model.

You can config the deepspeed under configs. Details can be referred at DeepSpeed Configuration

You can run following script to download our medical model checkpoints :

cd output_models

bash download.sh medical_ckpt

cd -You can also directly download our model via google drive link : medical_ckpt.tar.gz

Similarly, you can run following script to download our instruction model checkpoints :

cd output_models

bash download.sh instruction_ckpt

cd -You can also directly download our model via google drive link : instruction_ckpt.tar.gz

After downloading the model checkpoints. You can replace the --lora_model_path with output_models/instruction_ckpt/llama7b-lora (example for llama-7b for instruction) and replace --model_name_or_path with your converted llama model inside LMFlow/scripts/run_inference_with_lora.sh and run this shell script to reproduce the result.

Then you can check the model performance at our Doc.

Please refer to our Documentation for more API reference and experimental results.

@misc{lmflow,

author = {Shizhe Diao and Rui Pan and Hanze Dong and KaShun Shum and Jipeng Zhang and Wei Xiong and Tong Zhang},

title = {LMFlow: An Extensible Toolkit for Finetuning and Inference of Large Foundation Models},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://optimalscale.github.io/LMFlow/}},

}

This package aims to provide a streamlined and user-friendly pipeline for large model tuning. Its functionalities serve as a reference and are intended for use by the user. However, it is important to note that the responsibility for the preparation of the data and pretrained models lies solely with the user. This package does not guarantee the accuracy, completeness, applicability, or legality of the components from the user's preparation. Users must be aware of and assume all risks and liabilities associated with the preparation of the models and data, and obtain legal, commercial, and technical advice before utilizing this package. The pipeline shall not be held responsible for any direct, indirect, special, incidental, or consequential damages resulting from the user's improper preparation of the data and pretrained models.

It is also crucial to highlight that the results generated by the model are based on probabilistic models and not directly related to this pipeline. The accuracy, reliability, applicability, and legality of the results are not guaranteed by this pipeline. Therefore, users must also be aware of the risks and liabilities associated with the results and seek legal, commercial, and technical advice before relying on the model-generated outcomes. This pipeline shall not be accountable for any direct, indirect, special, incidental, or consequential damages resulting from the user's reliance on the model-generated results.

If you need any help, please submit a Github issue.