BrabeNetz is a supervised neural network written in C++, aiming to be as fast as possible. It can effectively multithread on the CPU where needed, is heavily performance optimized and is well inline-documented. System Technology (BRAH) TGM 2017/18

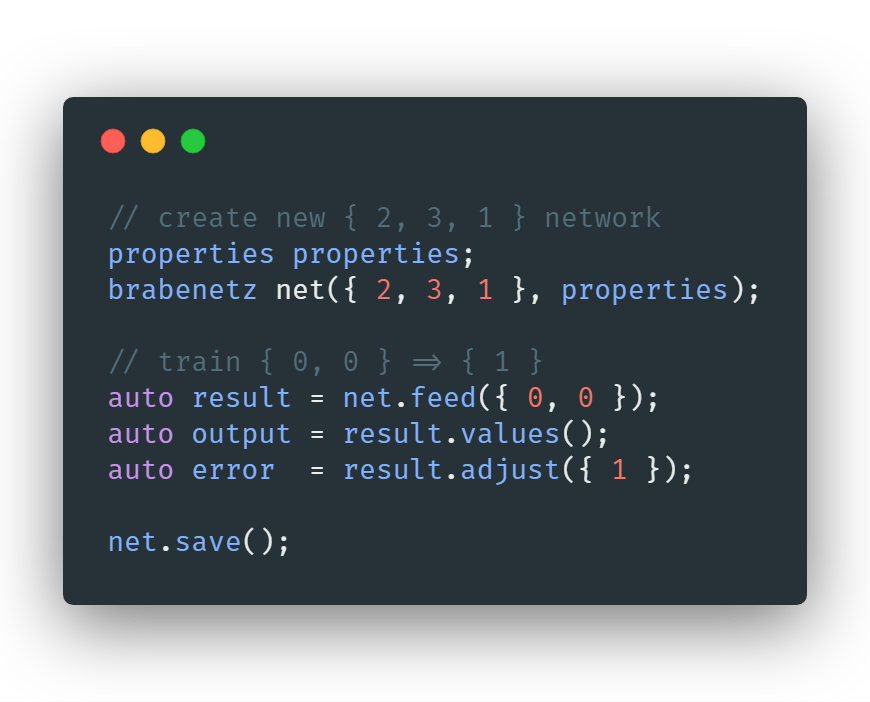

PM> Install-Package BrabeNetzI've written two examples of using BrabeNetz in the Trainer class to train a XOR ({0,0}=0, {0,1}=1, ..) and recognize handwritten characters.

In my XOR example, I'm using a {2,3,1} topology (2 input-, 3 hidden- and 1 output-neurons), but BrabeNetz is scalable until the hardware reaches its limits. The digits recognizer is using a {784,500,100,10} network to train handwritten digits from the MNIST DB.

Be sure to read the network description, and check out my digit recognizer written in Qt (using a trained BrabeNetz MNIST dataset)

Build: Release x64 | Windows 10 64bit

CPU: Intel i7 6700k @ 4.0GHz x 8cores

RAM: HyperX Fury DDR4 32GB CL14 2400MHz

SSD: Samsung 850 EVO 540MB/s

Commit: 53328c3

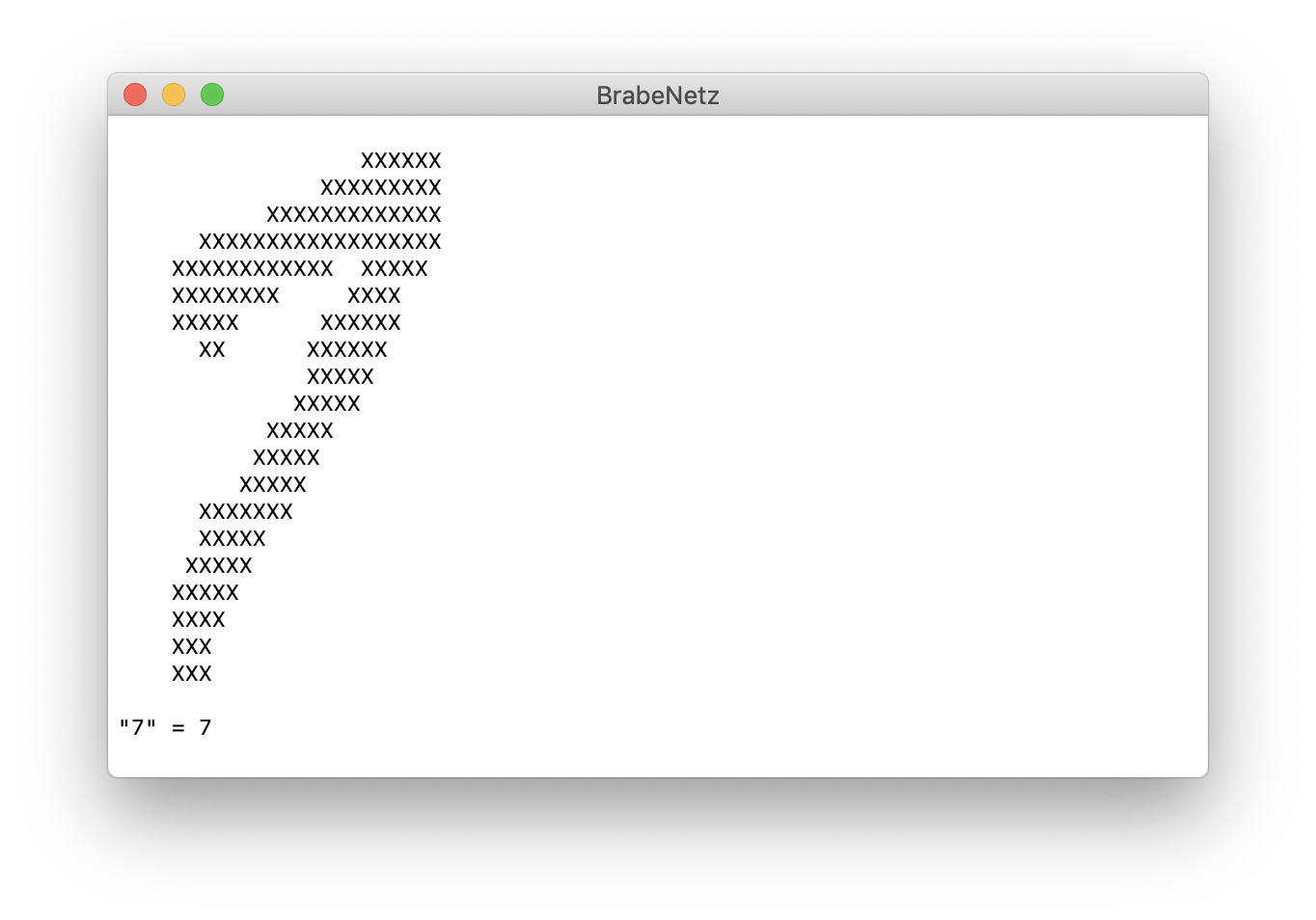

Actual prediction of the digit recognizer network on macOS Mojave

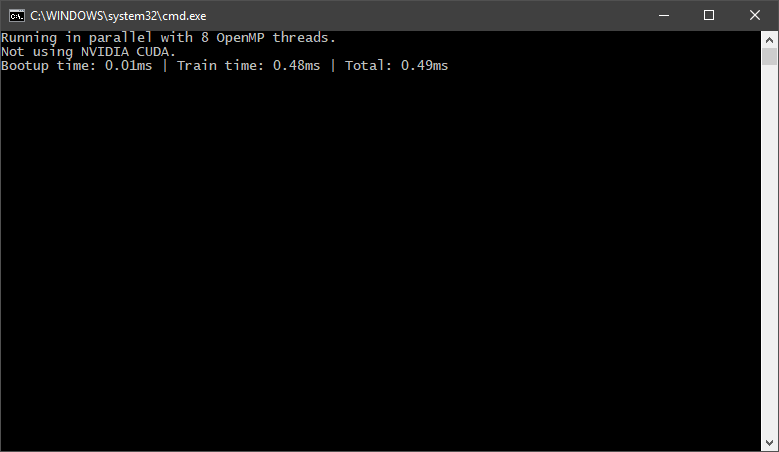

Training a XOR 1000 times takes just 0.49ms

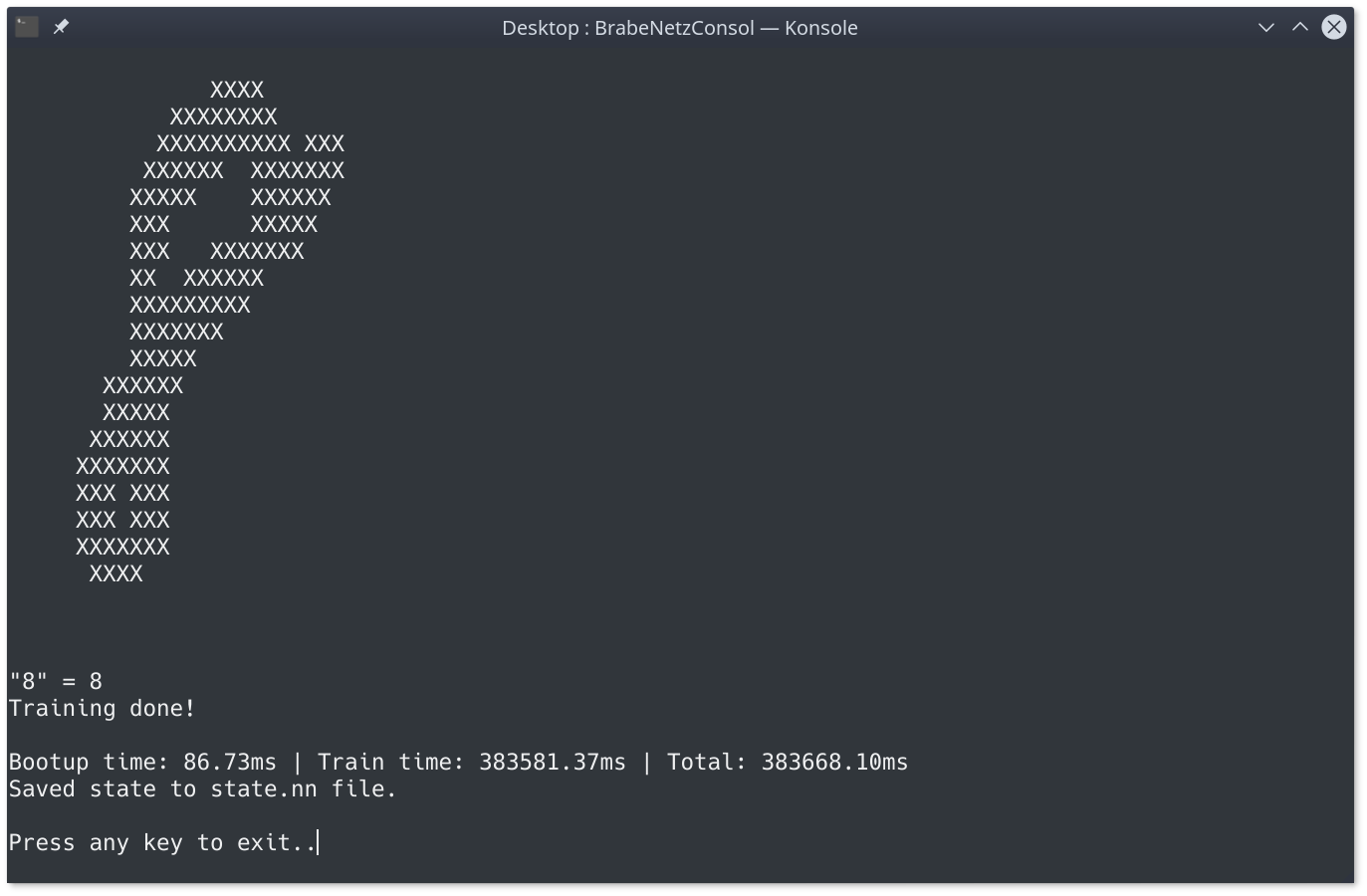

Actual prediction of the digit recognizer network on Debian Linux

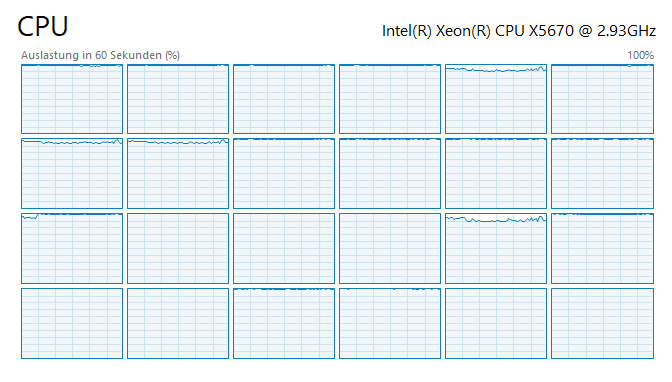

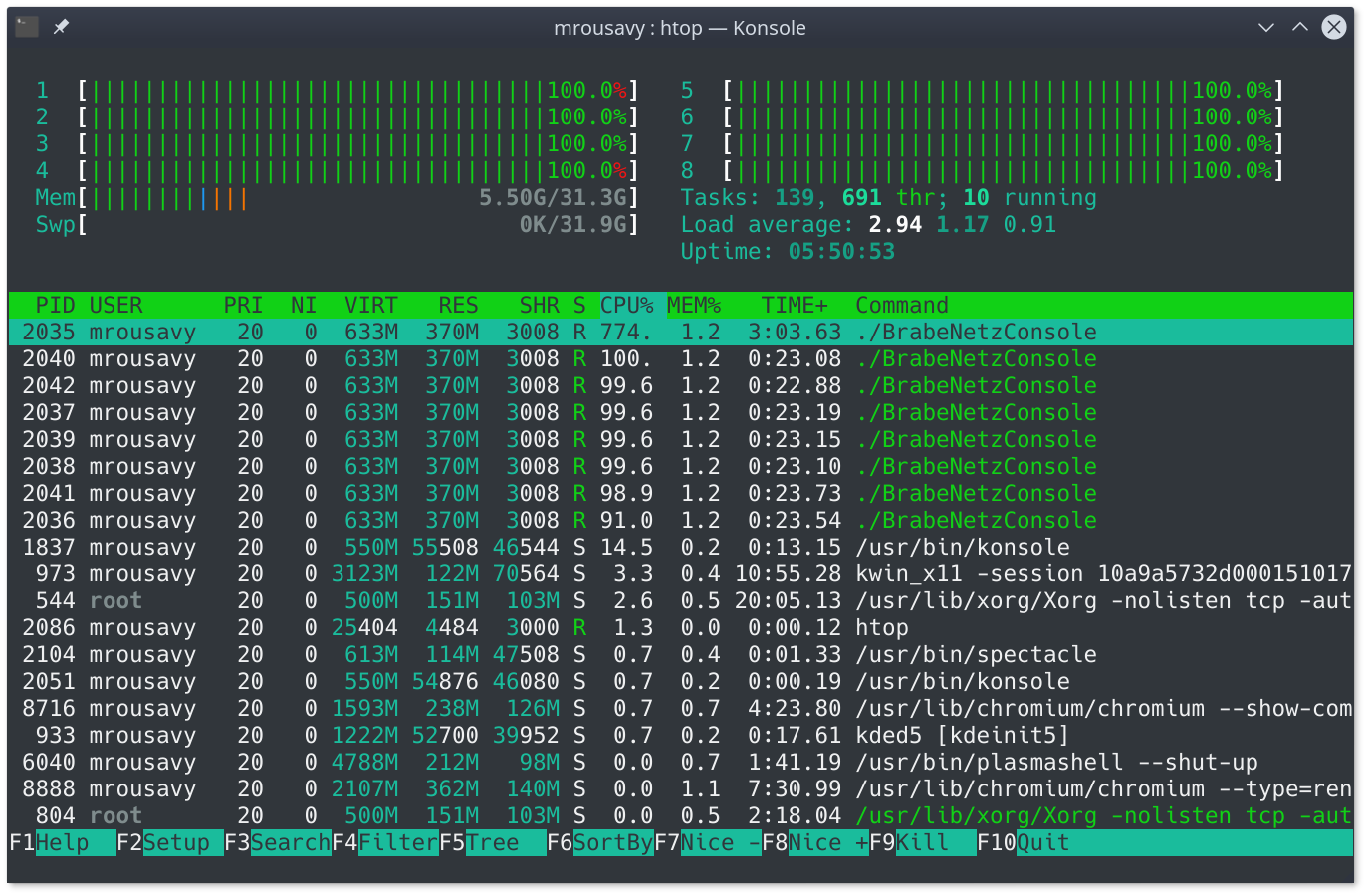

Effectively using all available cores (24/24, 100% workload)

Task Resource viewer (htop) on Linux (Debian 9, Linux 4.9.62, KDE Plasma)

- Optimized algorithms via raw arrays instead of

std::vectorand more - Smart multithreading by OpenMP anywhere the spawn-overhead is worth the performance gain

- Scalability (Neuron size, Layer count) - only limited by hardware

- Easy to use (Inputs, outputs)

- Randomly generated values to begin with

- Easily binary save/load with

network::save(string)/network::load(string)(state.nnfile) - Sigmoid squashing function

- Biases for each neuron

network_topologyhelper objects for loading/saving state and inspecting networkbrabenetzwrapper class for an easy-to-use interface

-

Build & link library

-

Choose your interface

brabenetz.h: [Recommended] A wrapper for the rawnetwork.hinterface, but with error handling and modern C++ interface styling such asstd::vectors,std::exceptions, etc.network.h: The rawnetworkwith C-style arrays and no bound/error checking. Only use this if performance is important.

-

Constructors

(initializer_list<int>, properties): Construct a new neural network with the given network size (e.g.{ 2, 3, 4, 1 }) and randomize all base weights and biases - ref(network_topology&, properties): Construct a new neural network with the given network topology and import it's existing weights and biases - ref(string, properties): Construct a new neural network with and load the neural network state from the file specified inproperties.state_file- ref

-

Functions

network_result brabenetz::feed(std::vector<double>& input_values): Feed the network input values and forward propagate through all neurons to estimate a possible output (Use thenetwork_resultstructure (ref) to access the result of the forward propagation, such as.valuesto view the output) - refdouble network_result::adjust(std::vector<double>& expected_output): Backwards propagate through the whole network to adjust wrong neurons for result trimming and return the total network error - refvoid brabenetz::save(string path): Save the network's state to disk by serializing weights - refvoid brabenetz::set_learnrate(double value): Set the network's learning rate. It is good practice and generally recommended to use one divided by the train count, so the learn rate decreases the more often you train - refnetwork_topology& brabenetz::build_topology(): Build and set the network topology object of the current network's state (can be used for network visualization or similar) - ref

Usage examples can be found here, and here

Thanks for using BrabeNetz!