schemacrawler-additional-command-lints-as-csv

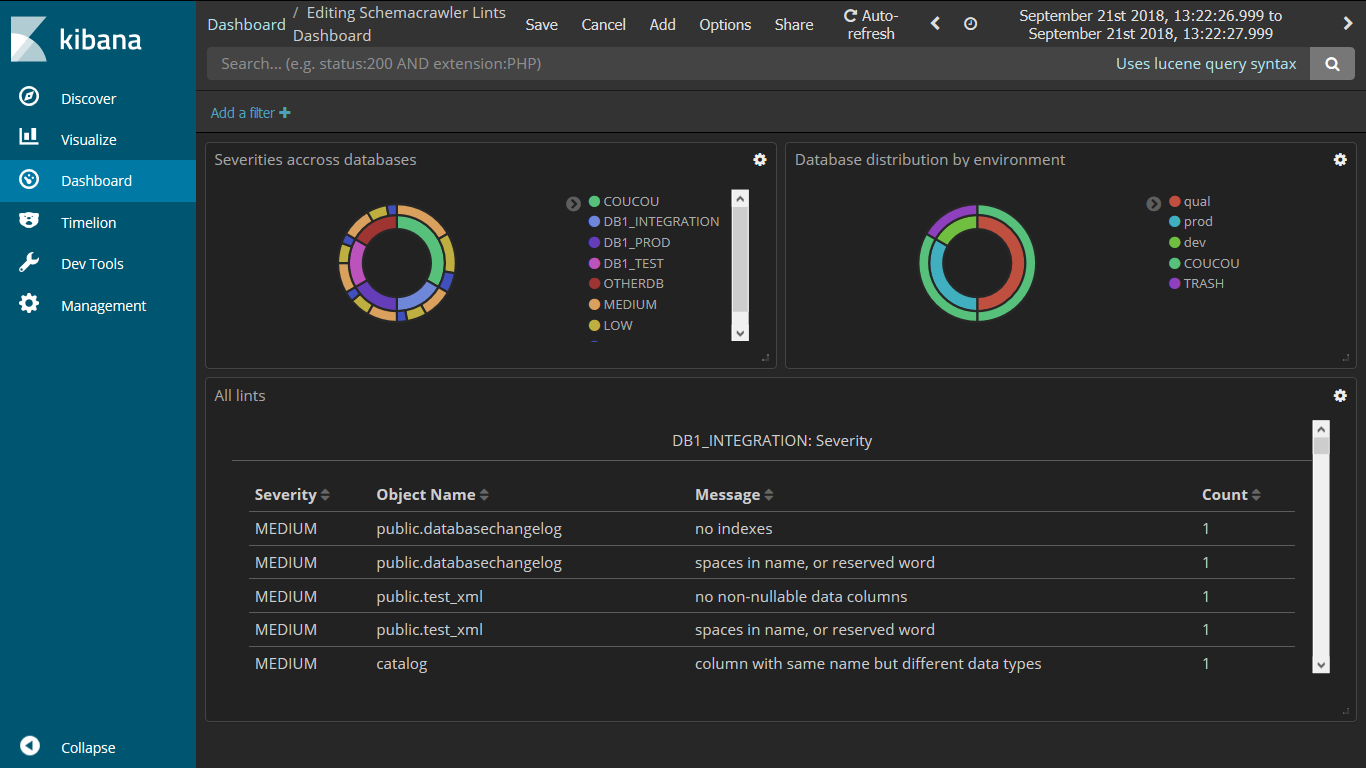

An additional command for schemacrawler to dump lints as csv files, with some additional fields.

See first linkedIn article for more details or check the Pinterest album for more samples

For people interested by gdpr, take a look at this other linkedIn article.

Usage

This additional schemacrawler command is dedicated to produce csv files that can be used to produce advanced reporting for :

- database lints

- table sizes (number of rows and columns), so you can report and monitor them, etc...

Thanks to the fact that csv is a very common data format, it can be used to

produce intelligence and reporting in numerous technologies. I'll focus on

Elastic Search reporting, but it would also be very efficient to produce

analytics with any other reporting tools like Jupyter Notebook

or R.

Install steps

Build the jar :

mvn clean package

then copy the jar to $SCHEMACRAWLER_HOME/lib and you're done with install steps.

To get help, simply run :

schemacrawler -help -c=csv

This jar adds the following command with the following options :

-c=csv -dbid=666 -dbenv=hell

-c=csv: tells that we want to dump the lints as a csv file-dbid: optional paramater if you want to stick on a given database-dbenv: optional paramater if you want to tag a database to an environment (typically prod, dev, test, ...)

For each run, you then get the following csv files in your working directory :

schemacrawler-lints-<UUID>.csv: this file contains lint outputsschemacrawler-tables-<UUID>.csv: this file contains datas reporting number of rows and columns of tables, with schema, tableName, ...schemacrawler-columns-<UUID>.csv: this file contains datas about table/columns

To load these files, you need the dedicated logstash configuration files. Therefore, you have two logstash configuration files samples :

- for lints, check

logstash-lints.conf - for table datas, check

logstash-tables.conf - for column datas, check

logstash-columns.conf

For each, you have to customize index names and input.file.path according to your needs.

Contribute

You can contribute code, but also your own dashoard realizations. Therefore, just make a PR that :

- add an image to the

imgdirectory - add the screenshot to the dedicated

SCREENSHOTS.mdfile or a link to a video : any cool demo is welcome - also you can ask (fill and issue on Github for that) to contribute to the dedicated Pinterest album

Contribute ideas

If you have ideas for dashboard but don't know how to create them, but still are convinced that the are interesting, please fill an issue on the project, explaining what you'd like to produce. A hand made drawing can also be a very good beginning !

Details and samples

See linkedIn article for more details or visit the dedicated Pinterest album.