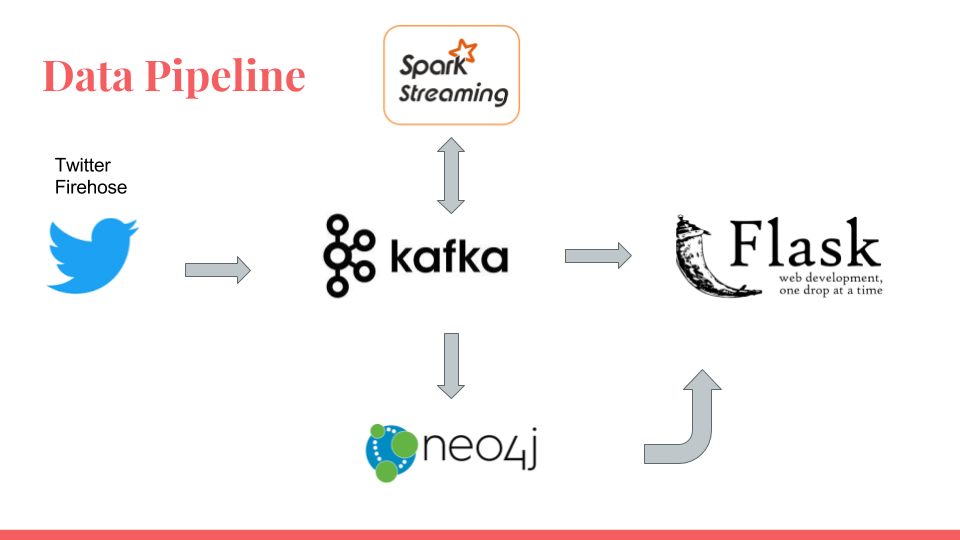

TopicMatch is a distributed data pipeline for topic clustering on streaming text data -- a fellowship project as an Insight Data Engineering fellow for Summer 2017. All clusters utilize open source software and are housed on AWS.

- Pegasus (https://github.com/InsightDataScience/pegasus)

- Apache Kafka (Kafka-python - http://kafka-python.readthedocs.io/en/master/install.html)

- Spark Streaming (Pyspark - https://spark.apache.org/docs/latest/streaming-programming-guide.html)

- Neo4j (https://neo4j.com/download/)

- Flask (http://flask.pocoo.org/)

- Twitter data producers compiled from a static bank of ~3 million tweet JSON objects saved in S3.

- Data is funneled into Kafka through producers at about ~10,000 JSON objects per second.

- Kafka cluster has 3 nodes -- distributing ingestion and controlling throughput for constant data production volume.

- Spark Streaming consumes from the Kafka cluster with 1 master/3 workers and pre-processes/aggregates tweets.

- Spark Streaming outputs are directed back to Kafka as a central broker which are consumed through batch Neo4j Cypher queries for graphDB storage. Overall tweet counts and trending hashtags are delivered straight to Flask from Kafka for dashboard visualization.

- Neo4j searches for nodes and returns the node with its top 5 edges.

Slide Deck: https://tinyurl.com/y9n92cy7