A javascript library and a React.js GUI that allows to create and test Genetic Algorithms experiments with focus on hyperparametric optimization for solving the most common problems present in the combinatorial optimization literature.

This project was developed under the context of the final work for the posgraduate course "Advanced Techniques for Evolutionary Computation" by Dr. Ignacio Ponzoni at DCIC (UNS).

I hope the reader find this project helpful. I have not much knowledge in software architecture or design patterns, but my intention was to make readable code and a useful tool for those who are teaching or learning about Genetic Algorithms or Numerical Optimization Techniques.

This library was written in javascript. This allows you to share the code through any medium and because its easy for someone to get access to an internet browser, almost every user will be able to run this code, on almost any smart device. Consider this as the only and most important advantage of this software, against other tools as Python or Matlab.

Lets solve the Subset Sum Problem using this library. Here I'm using Node.js, but with a few adjustments, you can make it work on the browser too.

import Ga from 'optimization/ga/index.mjs';

import SubsetSum from 'optimization/fitness/subsetsum.mjs';

// We're using the following numeric set of 20 elements

const set = [-96, -91, -87, -84, -82, -75, -71, -27, 12, 30, 46, 53, 73, 79, 80, 88, 90, 94, 94, 95];

const target = 0;

// We create the already implemented fitness model

const f = new SubsetSum(set, target);

// And the GA optimizer attached to this fitness model, configuring the mutation probability as 5%.

const ga = new Ga(f, {mut_prob: 0.05});

// Then, we run 100 generations

for(let gen = 0; gen < 100; gen++)

ga.evolve();

// Solution is in the first chromosome, as the population list is always sorted from best to worst

const solution = ga.population[0];

// Finally, we ṕrint results

process.stdout.write("Best subset: "+f.decode(solution.genotype)+"\n");

process.stdout.write("Objective value: "+solution.objective+"\n");And the output will be something like:

Best subset: -87,-82,-75,30,46,80,88

Objective value: S = 0, N = 7

Try the latest version here or use this application locally running the following commands (Node.js already installed is required):

$ git clone https://github.com/matiasmicheletto/dna-solver.git

$ cd dna-solver

$ npm install

$ npm run build

$ npm run startIf you need to use just the optimization module via scripting (without GUI), checkout the examples folder and run the scripts installing the optimization package only (and cli-progress for this example, but not necessary), using the following commands:

$ git clone https://github.com/matiasmicheletto/dna-solver.git

$ cd dna-solver

$ npm install cli-progress ./optimization

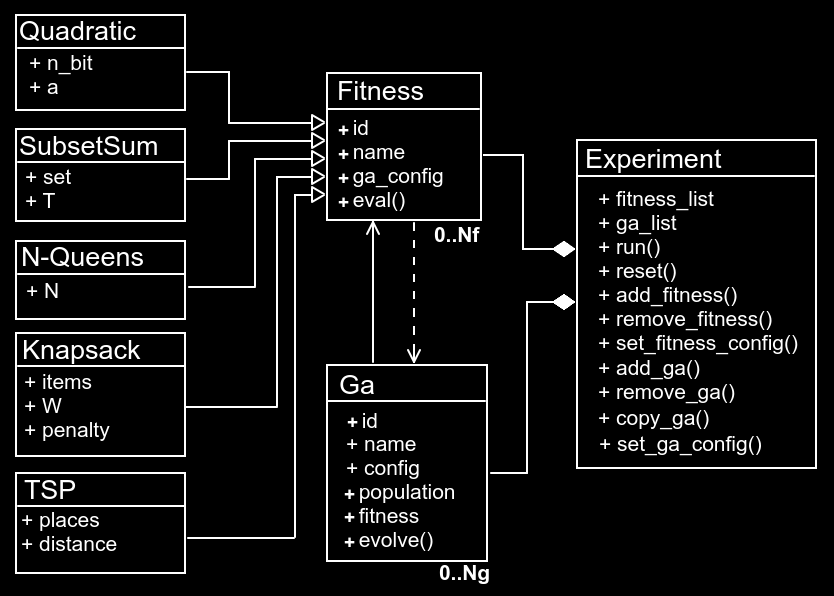

$ node examples/tsp/example_tsp_selection.mjsThis library provides a class to model any objective function with an interface to be optimized using Genetic Algorithms. Five class examples are provided to show how to extend this class in order to model common combinatorial optimization problems. The Ga class implements a Genetic Algorithm optimizer with many configuration options (see next section). Finally, the Experiment class allows to create and run different experiments to test the behaviour of GA optimizers when configuring different hyperparameters.

To create a new Fitness model, extend the prototype class, for example:

export default class MyNewFitness extends Fitness {

constructor(param1 = 1, param2 = 3) {

// First we need to call the constructor of the parent class,

// and pass the attributes or parameters:

super({

_param1: param1,

_param2: param2,

_name:"My new fitness model"

});

// Then we can use this._param1 or this._param2 as we need.

}

objective(x) {

// This example just implements a simple linear function:

return x * this._param1 + this._param2;

}

decode(g) {

// Suppose we're using 16 bit BCD to decimal conversion.

return parseInt(g.join("").slice(-16), 2);

}

objective_str(g) {

// This function shows the result of evaluating the objective function

// as a human-readable string.

return "F("+g+")="+this.objective(this.decode(g));

}

eval(g) {

// This is the fitness function. This function should return a numeric scalar

// value that represents the solution's quality.

return this.objective(this.decode(g));

}

rand_encoded() {

// As we're using binary strings, then the random solution generator will

// return a random binary array with 16 bit length length:

return new Array(16).fill(0).map(() => Math.round(Math.random()));

}

get ga_config() {

// Lets say you don't want the user to know how to properly configure

// the GA method to use your fitness model, so we can facilitate a

// default configuration:

return {

pop_size: 50

mut_prob: 0.01,

cross_prob: 0.1,

selection: selection.TOURNAMENT, // Remember to import "selection" from "ga"

mutation: mutation.BITFLIP, // Remember to import "mutation" from "ga"

tourn_k: 4 // As we're using tournament, we set the tournament size to 4

};

}

}And thats it, now we can make our first experiment to see how does this behave (spoiler: will behave pretty bad, as its just a linear function):

import Experiment from 'optimization/experiment/index.mjs';

import MyNewFitness from 'mynewfitness.mjs'

const experiment = new Experiment(); // Create the experiment manager

const f_id = experiment.add_fitness(MyNewFitness, [2, 8]); // Add our fitness with some parameters

experiment.add_ga(f_id); // Attach an optimizer to our fitness

// Run the experiment!

experiment.run({

rounds:100,

iters:25,

progressCallback:p => process.stdout.write("Progress = "+p+"% \n")

});

// Ptint results:

process.stdout.write(experiment.getPlainResults());The following table shows the configuration parameters and default values used by the "Ga" class module to implement GA optimization.

| Parameter | Type | Default value | Description |

|---|---|---|---|

pop_size |

Integer number greater than 4 | 20 |

Population size, number of chromosomes |

elitism |

Integer number between 0 and pop_size |

2 |

Number of elite individuals. Elite individuals are force-preserved through generations |

selection |

ROULETTE, RANK or TOURNAMENT |

ROULETTE |

Selection operator enumerator |

crossover |

SINGLE, DOUBLE, CYCLE or PMX |

SINGLE |

Crossover operator enumerator |

mutation |

BITFLIP, SWAP or RAND |

BITFLIP |

Mutation operator enumerator |

cross_prob |

Float number between 0 and 1 | 0.5 |

Crossover probability (probability that a pair of selected individuals to be crossovered) |

mut_prob |

Float number between 0 and 1 | 0.1 |

Mutation probability (probability of an gen to change). Usually 1/(bitstring length) |

rank_r |

Float number between 0 and 2/(pop_size*(pop_size-1)) |

0.002 |

Ranking parameter (In case of ranking based selection). High r increases selective pressure |

tourn_k |

Integer number between 2 and pop_size |

3 |

K parameter for tournament selection method. Usually between 2 and 5 |

best_fsw_factor |

Float number between 0 and 1 | 0.2 |

Window size for getting evolution slope value proportional to generation number |

param_control_enabled |

Boolean | false |

Enable or disable the automatic parameter control |

controlled_param |

CROSS_PROB, MUT_PROB, RANK_R or TOURN_K |

CROSS_PROB |

The controlled hyperparameter |

param_control_factor |

Number | 0.01 |

The incremental factor of the controller parameter |

controller_var |

GENERATION, POP_S2, EVOL_SLOPE or POP_AVG |

GENERATION |

The controller variable (static control by default) |

The last four parameters are used in automatic parameter control. There are two operation modes, static or adaptive. For static control, then GENERATION should be used as controller variable, then the controlled parameter will increment its value in factor (positive or negative) units on every generation until it reaches its maximum or minimum value. Otherwise, in the case of adaptive control, the controlled parameter will increase or decrease its value in factor*value units, where value is the numeric value of the controller variable, which can be EVOL_SLOPE (evolution slope), POP_S2 (population variance) or POP_AVG (population average fitness).

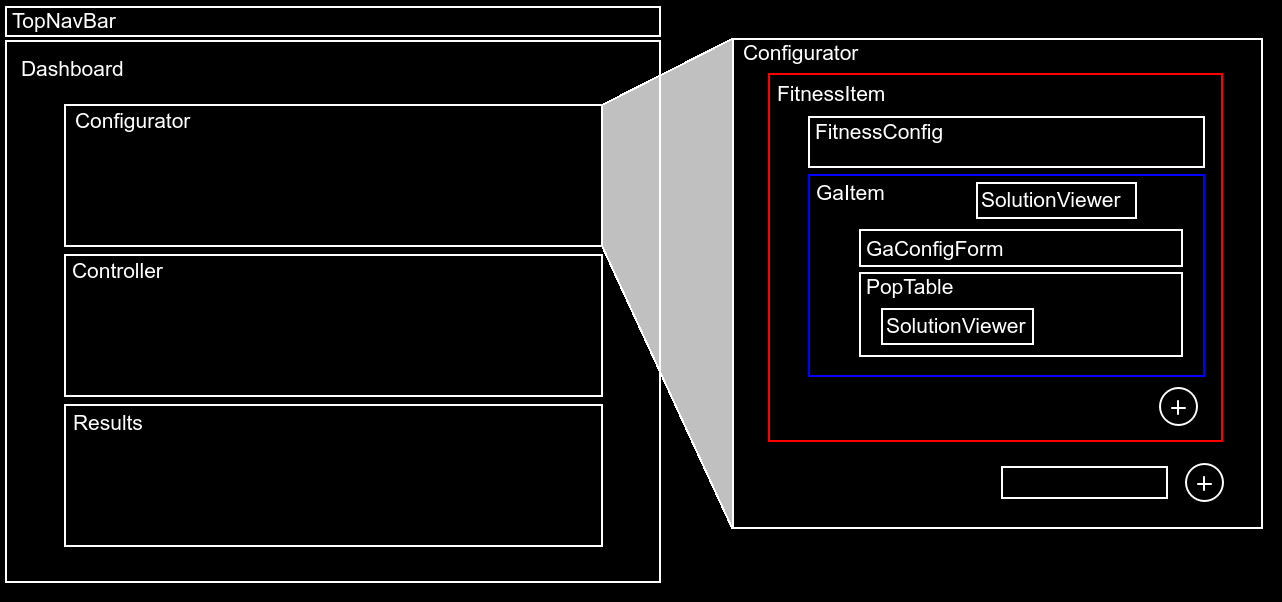

A React.js and Bootstrap GUI allows to build experiments graphically. There are two components that depend on the Fitness models, "FitnessConfig" and "SolutionViewer". If not appropiate components are provided to configurate the model, then the FitnessConfig section will be displayed as a blank or empty space, and the SolutionViewer will show the solution vectors as dash-separated-element strings. Some ReactJS knowledge is required to code and include the components for a new Fitness model, but the ones provided will be helpful to understand the idea.

Author: Matías Micheletto - matias.micheletto@uns.edu.ar

ICIC - CONICET - UNS

License: GPL-3.0

Module development (100%).

- Fitness function module (100%).

- Parabola.

- Subset sum problem.

- N-Queens problem.

- TSP Problem.

- Optimizer module (100%).

- Fitness model configuratinon.

- Population size and elitism configuration.

- Configuration of selection operators.

- Configuration of crossover operators.

- Configuration of mutation operators.

- Termination criteria (fixed to generations number).

- Parameter control (static and adaptive).

- Experiment manager module (100%).

- Fitness modules lists management.

- Optimizers list management.

- Optimizers duplication.

- Fitness and optimizers configuration.

- Experiment execution.

- Result summarization.

- Command line optimization example scripts (100%).

- Example 1: Simple TSP. Experiment configuration.

- Example 2: NQueens. Multiple fitness experiment.

- Example 3: Complex TSP. Parameter tunning.

- Export results as plain text file (100%).

- Generate NodeJS module (100%).

GUI development (100%)

- Graphical experiment configuration (100%).

- Add and remove fitness models.

- Add and remove optimizers.

- Graphical fitness model configuration (100%).

- Problem description.

- Parameter configuration.

- Graphical optimizer configuration (100%).

- Static parameters configuration.

- Name edition.

- Adaptive/static parameter configuration.

- Graphical experiment control (100%).

- Run and reset buttons.

- Iterations and rounds configuration.

- Timeout configuration.

- Graphical experiment output (100%).

- Experiment results summary.

- Solution evolution history.

- Optimizers comparative bar plot.

- Solution candidate visualization (100%).

- Quadratic function plot.

- Chess board for N-Queens.

- TSP destinations map.