This project is part of the Udacity Data Scientist Nanodegree. I analyzed the disaster data from Figure Eight and built an ML pipeline to classify the messages, so that they can be sent to the appropriate disaster relief agency. A web app with Flask provides the UI for data input (messages) and display the classification results (categories) in the UI. Plotly was used to provide data visualization.

Step 1. First I worked in 2 notebooks: ETL Pipeline Preparation.ipynb & ML Pipeline Preparation.ipynb.

Step 2. Then I completed the 2 scripts process_data.py and train_classifier.py with the code from the notebooks.

Step 3. For the web app, I used PyCharm as the IDE and used Virtualenv to create a

virtual environment (with all the required dependencies) for the project.

The main work for the web app was to build an UI for the ML model and data visualisation,

which was written in Python with the help of Plotly in run.py.

The following libraries were required for the project:

- Python 3.5+

- Data processing & ML: pandas, numpy, scikit-learn & sqlalchemy

- Natural Language Processing: NLTK

- Model saving and loading: Pickle

- Data visualization: Matplotlib & seaborn

- Web app: Flask & Plotly

There are 3 main components in this repo:

-

ETL Pipeline Python script

process_data.pyprovides the data pipeline:- loads the

messagesandcategoriesdatasets from 2 csv files - Merges the 2 dataframes

- Cleans the data

- Stores the data in a SQLite database called

disaster_response.db

Note: The code in

ETL Pipeline Preparation.ipynbnotebook is similar to theprocess_data.pyscript. - loads the

-

ML Pipeline Python script

train_classifier.pyprovides the ML pipeline:- Loads data from the SQLite database

- Splits the dataset into training and test sets

- Builds a text processing and machine learning pipeline

- Trains and tunes a model using GridSearchCV

- Outputs results on the test set

- Exports the final model as a pickle file:

classifier.pkl

Note: The code in

ML Pipeline Preparation.ipynbnotebook is similar to thetrain_classifier.pyscript. -

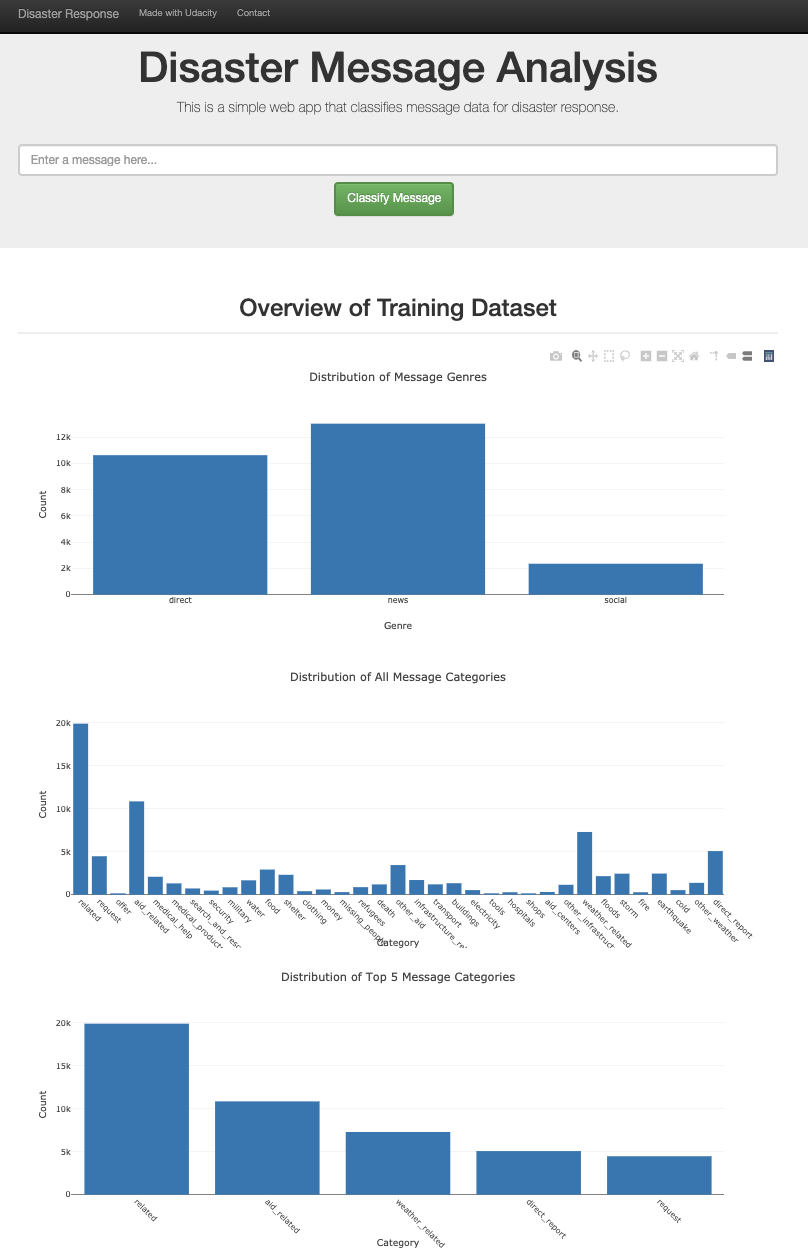

Flask Web App The web app provides the following functionality:

- Data visualization of the messages

- Allow user to enter a message

- Outputs the categories of the message, classified by the ML model

-

Run the following commands in the project's root directory to set up your database and model.

- To run ETL pipeline that cleans data and stores in database

python data/process_data.py data/disaster_messages.csv data/disaster_categories.csv data/DisasterResponse.db - To run ML pipeline that trains classifier and saves

python models/train_classifier.py data/DisasterResponse.db models/classifier.pkl

- To run ETL pipeline that cleans data and stores in database

-

Run the following command in the app's directory to run your web app.

python run.py -

Go to http://0.0.0.0:3001/ to view the web app.

Here is what the web app UI looks like: