- changes to cached_conv to reduce latency of

--causalmodels by one block - log KLD measured in bits/second in tensorboard

- scale beta (regularization parameter) appropriately with block size

- add several data augmentation options

- RandomEQ (randomized parametric EQ)

- RandomDelay (randomized comb delay)

- RandomGain (randomize gain without peaking over 1)

- RandomSpeed (random resampling to different speeds)

- RandomDistort (random drive + dry mix tanh waveshaping)

- add random cropping option to the spectral loss

- reduce default training window slightly (turns random cropping on by default)

- option not to freeze encoder once warmed up

- transfer learning: option to initialize just weights from another checkpoint

- export: option to specify exact number of latent dimensions (instead of fidelity)

clone the git repo and run RAVE_VERSION=2.3.0b CACHED_CONV_VERSION=2.6.0b pip install -e RAVE

See https://huggingface.co/Intelligent-Instruments-Lab/rave-models for pretrained checkpoints.

To use transfer learning, you add 3 flags: --transfer_ckpt /path/to/checkpoint/ --config /path/to/checkpoint/config.gin --config transfer . make sure to use all 3. transfer_ckpt and the first config will generally be the same path (less the config.gin part).

for example:

rave train --gpu XXX --config XXX/rave-models/checkpoints/organ_archive_b512_r48000/config.gin --config transfer --config mid_beta --transfer_ckpt XXX/rave-models/checkpoints/organ_archive_b512_r48000 --db_path XXX --name XXX this would do transfer learning from the low latency (512 sample block) organ model. You can also add more configs; in the above example --config mid_beta is resetting the regularization strength (the pretrained model used a low beta value). You could also adjust the sample rate or do other non-architectural changes. make sure to add these after the first --config with the checkpoint path.

Official implementation of RAVE: A variational autoencoder for fast and high-quality neural audio synthesis (article link) by Antoine Caillon and Philippe Esling.

If you use RAVE as a part of a music performance or installation, be sure to cite either this repository or the article !

If you want to share / discuss / ask things about RAVE you can do so in our discord server !

The original implementation of the RAVE model can be restored using

git checkout v1Install RAVE using

pip install acids-raveYou will need ffmpeg on your computer. You can install it locally inside your virtual environment using

conda install ffmpegA colab to train RAVEv2 is now available thanks to hexorcismos !

Training a RAVE model usually involves 3 separate steps, namely dataset preparation, training and export.

You can know prepare a dataset using two methods: regular and lazy. Lazy preprocessing allows RAVE to be trained directly on the raw files (i.e. mp3, ogg), without converting them first. Warning: lazy dataset loading will increase your CPU load by a large margin during training, especially on Windows. This can however be useful when training on large audio corpus which would not fit on a hard drive when uncompressed. In any case, prepare your dataset using

rave preprocess --input_path /audio/folder --output_path /dataset/path (--lazy)RAVEv2 has many different configurations. The improved version of the v1 is called v2, and can therefore be trained with

rave train --config v2 --db_path /dataset/path --name give_a_nameWe also provide a discrete configuration, similar to SoundStream or EnCodec

rave train --config discrete ...By default, RAVE is built with non-causal convolutions. If you want to make the model causal (hence lowering the overall latency of the model), you can use the causal mode

rave train --config discrete --config causal ...Many other configuration files are available in rave/configs and can be combined. Here is a list of all the available configurations

| Type | Name | Description |

|---|---|---|

| Architecture | v1 | Original continuous model |

| v2 | Improved continuous model (faster, higher quality) | |

| v3 | v2 with Snake activation, descript discriminator and Adaptive Instance Normalization for real style transfer | |

| discrete | Discrete model (similar to SoundStream or EnCodec) | |

| onnx | Noiseless v1 configuration for onnx usage | |

| raspberry | Lightweight configuration compatible with realtime RaspberryPi 4 inference | |

| Regularization (v2 only) | default | Variational Auto Encoder objective (ELBO) |

| wasserstein | Wasserstein Auto Encoder objective (MMD) | |

| spherical | Spherical Auto Encoder objective | |

| Discriminator | spectral_discriminator | Use the MultiScale discriminator from EnCodec. |

| Others | causal | Use causal convolutions |

| noise | Enable noise synthesizer V2 |

Once trained, export your model to a torchscript file using

rave export --run /path/to/your/run (--streaming)Setting the --streaming flag will enable cached convolutions, making the model compatible with realtime processing. If you forget to use the streaming mode and try to load the model in Max, you will hear clicking artifacts.

This section presents how RAVE can be loaded inside nn~ in order to be used live with Max/MSP or PureData.

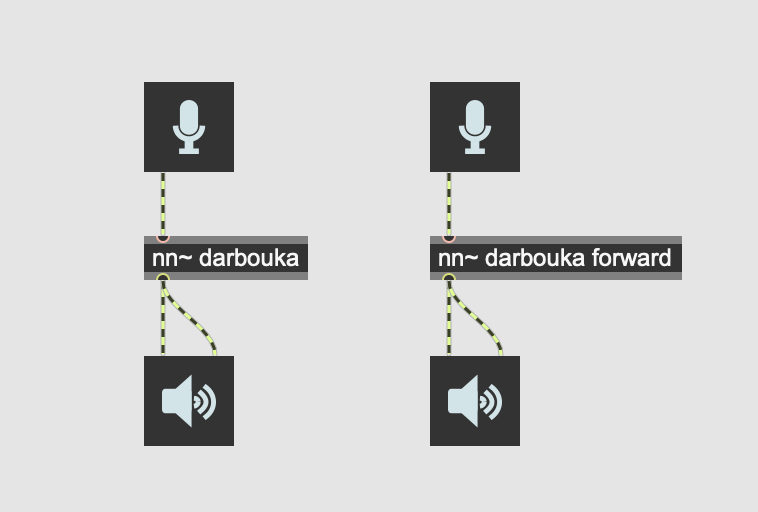

A pretrained RAVE model named darbouka.gin available on your computer can be loaded inside nn~ using the following syntax, where the default method is set to forward (i.e. encode then decode)

This does the same thing as the following patch, but slightly faster.

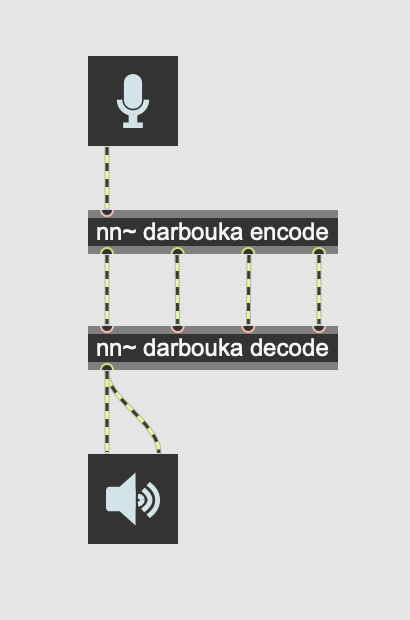

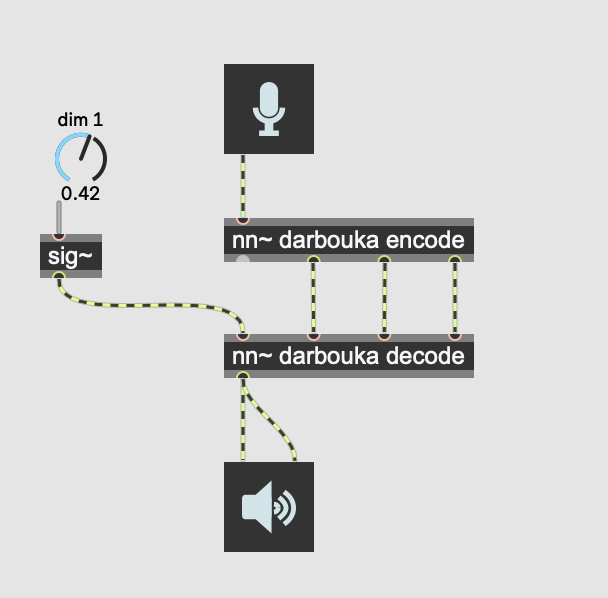

Having an explicit access to the latent representation yielded by RAVE allows us to interact with the representation using Max/MSP or PureData signal processing tools:

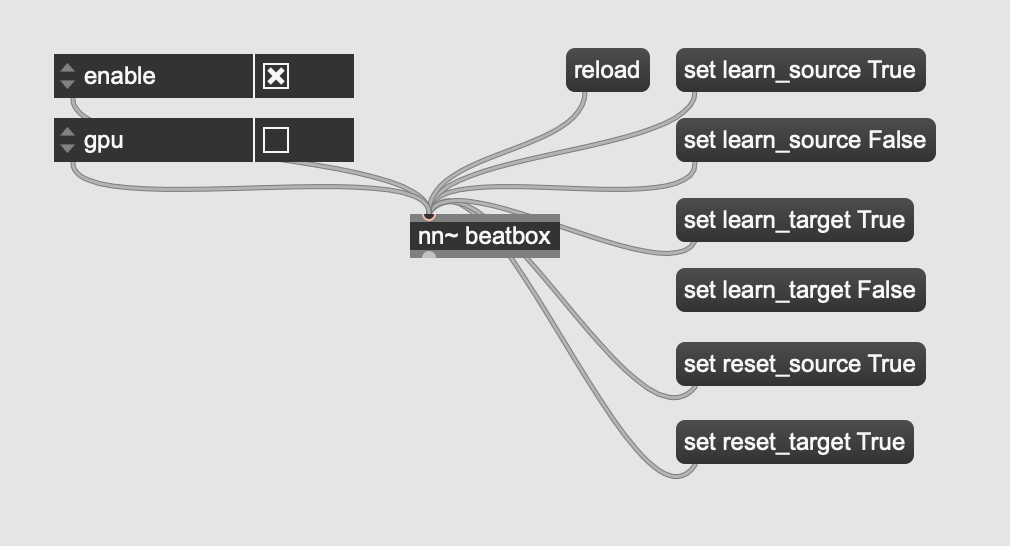

By default, RAVE can be used as a style transfer tool, based on the large compression ratio of the model. We recently added a technique inspired from StyleGAN to include Adaptive Instance Normalization to the reconstruction process, effectively allowing to define source and target styles directly inside Max/MSP or PureData, using the attribute system of nn~.

Other attributes, such as enable or gpu can enable/disable computation, or use the gpu to speed up things (still experimental).

Several pretrained streaming models are available here. We'll keep the list updated with new models.

If you have questions, want to share your experience with RAVE or share musical pieces done with the model, you can use the Discussion tab !

Demonstration of what you can do with RAVE and the nn~ external for maxmsp !

Using nn~ for puredata, RAVE can be used in realtime on embedded platforms !

This work is led at IRCAM, and has been funded by the following projects

- ANR MakiMono

- ACTOR

- DAFNE+ N° 101061548