This project fully implemented paper "3D Bounding Box Estimation Using Deep Learning and Geometry" based on previous work by image-to-3d-bbox(https://github.com/experiencor/image-to-3d-bbox).

- Python 3.6

- Tensorflow 1.12.0

-

No prior knowledge of the object location is needed. Instead of reducing configuration numbers to 64, the location of each object is solved analytically based on local orientation and 2D location.

-

Add soft constraints to improve the stability of 3D bounding box at certain locations.

-

MobileNetV2 backend is used to significantly reduce parameter numbers and make the model Fully Convolutional.

-

The orientation loss is changed to the correct form.

-

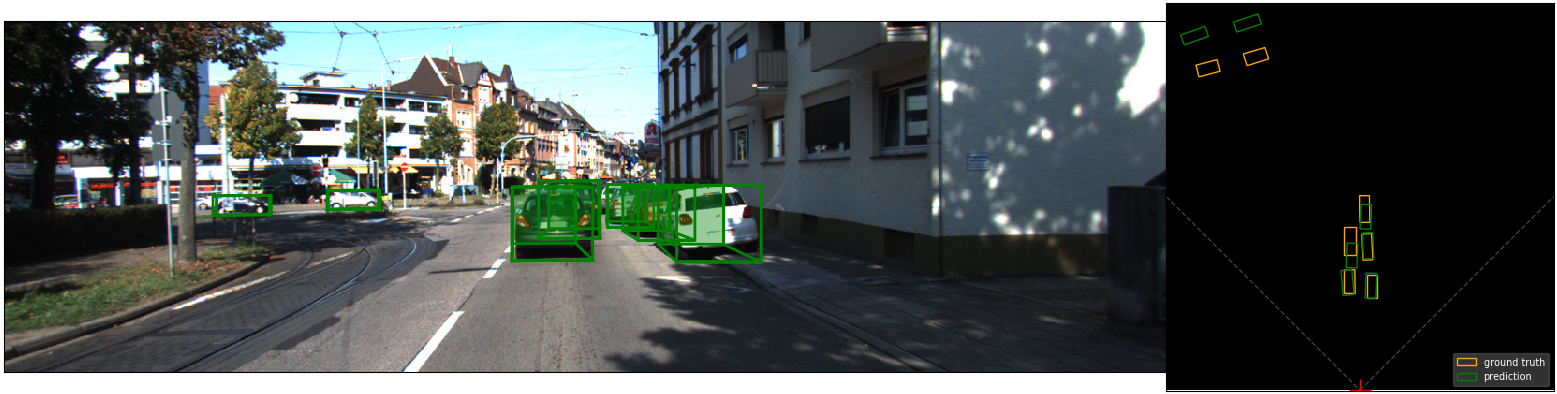

Bird-eye view visualization is added.

MobilenetV2 with ground truth 2D bounding box.

Video: https://www.youtube.com/watch?v=IIReDnbLQAE

First prepare your KITTI dataset in the following format:

kitti_dateset/

├── 2011_09_26

│ └── 2011_09_26_drive_0084_sync

│ ├── box_3d <- predicted data

│ ├── calib_02

│ ├── calib_cam_to_cam.txt

│ ├── calib_velo_to_cam.txt

│ ├── image_02

│ ├── label_02

│ └── tracklet_labels.xml

│

└── training

├── box_3d <- predicted data

├── calib

├── image_2

└── label_2

To train:

- Specify parameters in

config.py. - run

train.pyto train the model:

python3 train.pyTo predict:

- Change dir in

read_dir.pyto your prediction folder. - run

prediction.pyto predict 3D bounding boxes. Change-dto your dataset directory,-ato specify which type of dataset(train/val split or raw),-wto specify the training weights.

To visualize 3D bounding box:

- run

visualization3Dbox.py. Specify-sto if save figures or view the plot , specify-pto your output image folders.

| w/o soft constraint | w/ soft constraint | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| backbone | parameters / model size | inference time(s/img)(cpu/gpu) | type | Easy | Mode | Hard | Easy | Mode | Hard |

| VGG | 40.4 mil. / 323 MB | 2.041 / 0.081 | AP2D | 100 | 100 | 100 | 100 | 100 | 100 |

| AOS | 99.98 | 99.82 | 99.57 | 99.98 | 99.82 | 99.57 | |||

| APBV | 26.42 | 28.15 | 27.74 | 32.89 | 29.40 | 33.46 | |||

| AP3D | 20.53 | 22.17 | 25.71 | 27.04 | 27.62 | 27.06 | |||

| mobileNet v2 | 2.2 mil. / 19 MB | 0.410 / 0.113 | AP2D | 100 | 100 | 100 | 100 | 100 | 100 |

| AOS | 99.78 | 99.23 | 98.18 | 99.78 | 99.23 | 98.18 | |||

| APBV | 11.04 | 8.99 | 10.51 | 11.62 | 8.90 | 10.42 | |||

| AP3D | 7.98 | 7.95 | 9.32 | 10.42 | 7.99 | 9.32 | |||

cpu: core i5 7th

gpu: NVIDIA TITAN X