cloudquery transforms your cloud infrastructure into queryable SQL or Graphs for easy monitoring, governance, and security.

- What is cloudquery and why use it?

- Supported providers (Actively expanding)

- Installing cloudquery

- Quick Start

- Providers Authentication

- Common example queries

- Running cloudquery on AWS (Lambda, Terraform)

- Resources

- License

- Contribution

cloudquery pulls, normalize, exposes, and monitors your cloud infrastructure and SaaS apps as SQL database. This abstracts various scattered APIs enabling you to define security, governance, cost, and compliance policies with SQL.

- cloudquery can be easily extended to more resources and SaaS providers (open an Issue).

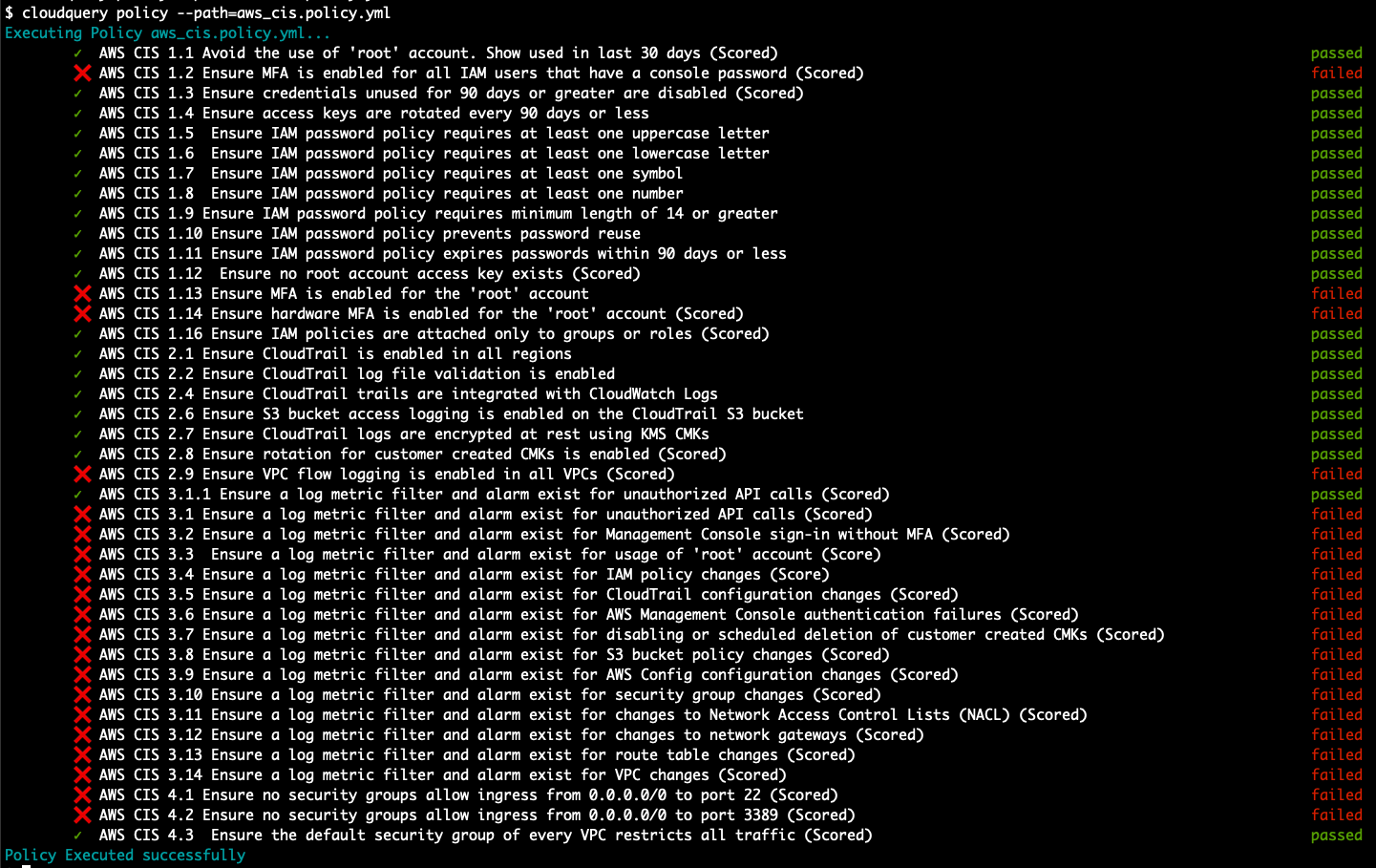

- cloudquery comes with built-in policy packs such as AWS CIS (more is coming!).

Think about cloudquery as a compliance-as-code tool inspired by tools like osquery and terraform, cool right?

Currently cloudquery supports multiple provides including

Check out https://hub.cloudquery.io for more details about the providers.

If you want us to add a new provider or resource please open an Issue.

- You can download the precompiled binary from releases, or using CLI

export OS=Darwin # Possible values: Linux,Windows,Darwin

curl -L https://github.com/cloudquery/cloudquery/releases/latest/download/cloudquery_${OS}_x86_64 -o cloudquery

chmod a+x cloudquery

./cloudquery --help

# If you want to download a specific version and not the latest use the following endpoint

export VERSION=v0.13.6 # specifiy a version, refer to releases https://github.com/cloudquery/cloudquery/releases

curl -L https://github.com/cloudquery/cloudquery/releases/download/${VERSION}/cloudquery_${OS}_x86_64 -o cloudquery- You can use homebrew on macOS to install cloudquery

brew install cloudquery/tap/cloudquery

# After initial install you can upgrade the version via:

brew upgrade cloudquerydocker pull ghcr.io/cloudquery/cloudquery:latest

# If you want to download a specific version and not the latest use the following format (refer to releases for tags)

docker pull ghcr.io/cloudquery/cloudquery:0.13.6

docker pull ghcr.io/cloudquery/cloudquery:0.13git clone https://github.com/cloudquery/cloudquery.git

cd cloudquery

# Make sure you have installed go and its required dependencies

go build .

./cloudquery # --help to see all options- First generate a

config.ymlfile that will describe which resources you want cloudquery to pull, normalize and transform resources to the specified SQL database by running the following command

cloudquery init aws # choose one or more from [aws azure gcp okta]

# cloudquery init gcp azure # This will generate a config containing gcp and azure providers

# cloudquery init --help # Show all possible auto-generated configs and flags- Once your

config.ymlis generated run the following command to fetch the resources from the provider

# you can spawn a local postgresql with docker

# docker run -p 5432:5432 -e POSTGRES_PASSWORD=pass -d postgres

cloudquery fetch --dsn "host=localhost user=postgres password=pass DB.name=postgres port=5432"

# cloudquery fetch --help # Show all possible fetch flags- Log in to the Postgres database using

psql -h localhost -p 5432 -U postgres -d postgres

postgres=# \dt

List of relations

Schema | Name | Type | Owner

--------+-------------------------------------------------------------+-------+----------

public | aws_autoscaling_launch_configuration_block_device_mapping | table | postgres

public | aws_autoscaling_launch_configurations | table | postgres- List AWS EC2 images

SELECT * FROM aws_ec2_images;- Find all public facing AWS load balancers

SELECT * FROM aws_elbv2_load_balancers WHERE scheme = 'internet-facing';cloudquery comes with some ready-to-use compliance policy pack (a curated list of queries for achieving the compliance) which you can use as-is or modify to fit your use case.

-

Currently, cloudquery support AWS CIS policy pack (it is under active development, so it doesn't cover the whole spec yet).

-

To run AWS CIS pack enter the following commands (make sure you fetched all the resources beforehand by the

fetchcommand)

./cloudquery policy --path=<PATH_TO_POLICY_FILE> --output=<PATH_TO_OUTPUT_POLICY_RESULT> --dsn "host=localhost user=postgres password=pass DB.name=postgres port=5432"- You can also create your own policy file, for example

views:

- name: "my_custom_view"

query: >

CREATE VIEW my_custom_view AS ...

queries:

- name: "Find thing that violates policy"

query: >

SELECT account_id, arn FROM ...The

policycommand uses the policy file path./policy.ymlby default, but this can be overridden via the--pathflag, or theCQ_POLICY_PATHenvironment variable

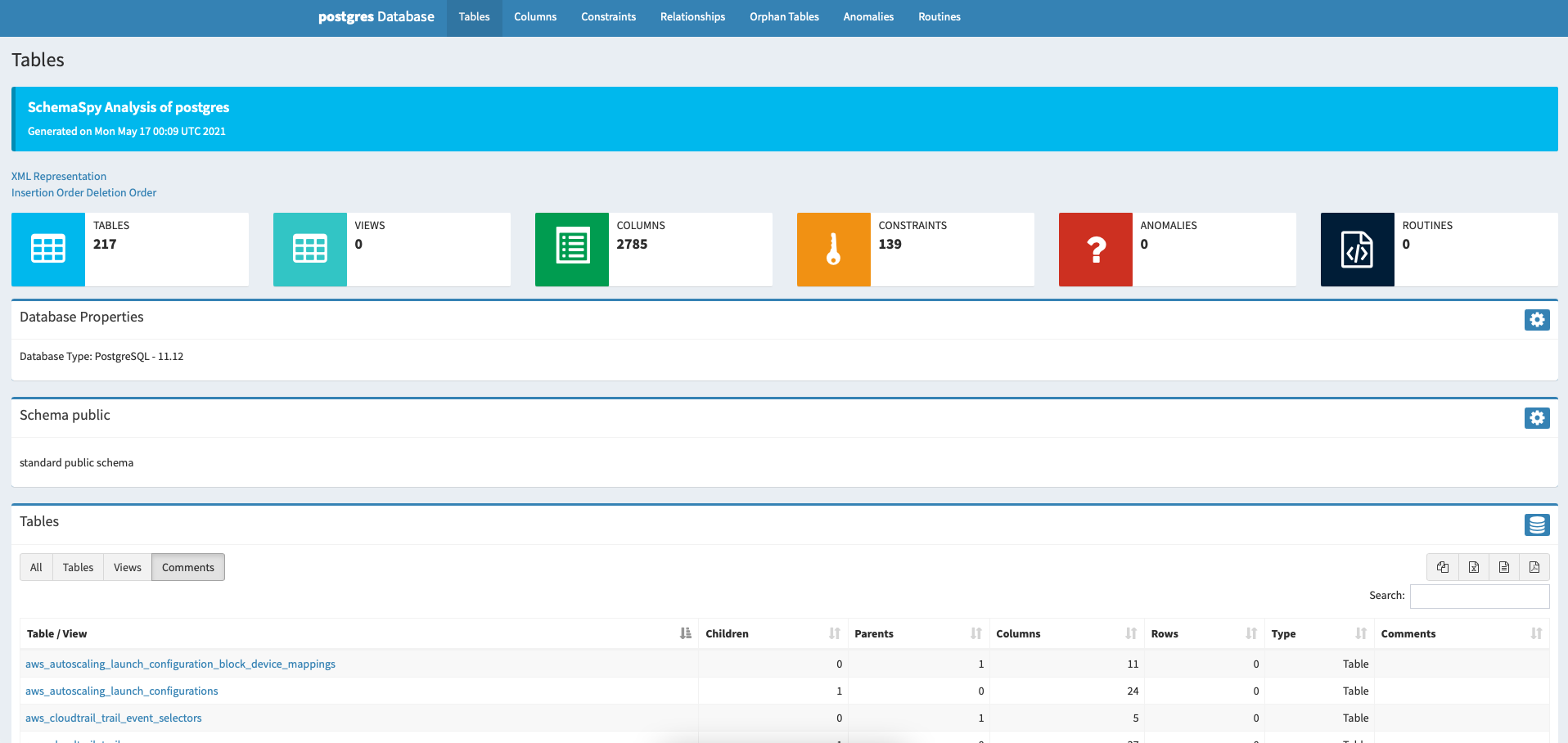

- Full documentation, resources, and SQL schema definitions are available here

-

You should be authenticated with an AWS account with correct permission with either option (see full documentation)

- You can specify using AWS access key

AWS_ACCESS_KEY_ID, and secret keyAWS_SECRET_ACCESS_KEYenvironment variables - Also, you can use the awscli configured credentials from

~/.aws/credentialscreated viaaws configure - You can use

AWS_PROFILEenvironment variable to specify the AWS profile you wanted to use for cloudquery when you have multiple profiles

- You can specify using AWS access key

-

Multi-account AWS support is available by using an account which can AssumeRole to other accounts

-

In your

config.hclyou need to specifyrole_arnsif you want to query multiple accounts in the following way

accounts "<YOUR ACCOUNT ID>"{

// Optional. Role ARN we want to assume when accessing this account

role_arn = "<YOUR_ROLE_ARN>"

}- Refer to https://github.com/cloudquery/cq-provider-aws for more details

You should set the following environment variables AZURE_CLIENT_ID, AZURE_CLIENT_SECRET, AZURE_TENANT_ID which you can generate via az ad sp create-for-rbac --sdk-auth. See full details at environment based authentication for sdk

- Refer to https://github.com/cloudquery/cq-provider-azure for more details

You should be authenticated with a GCP that has correct permissions for the data you want to pull. You should set GOOGLE_APPLICATION_CREDENTIALS to point to your downloaded credential file.

- Refer to https://github.com/cloudquery/cq-provider-gcp for more details

You need to set the OKTA_TOKEN environment variable.

- Refer to https://github.com/cloudquery/cq-provider-okta for more details

You can leverage the cloudquery provider SDK, which enables building providers to query any service or custom in-house solutions with SQL

- Refer to https://github.com/cloudquery/cq-provider-sdk for more details

The below are some example queries to perform basic operations like retrieving insecure buckets and information, identifying publicly exposed load balancers, and misconfigurations.

SELECT gcp_storage_buckets.name

FROM gcp_storage_buckets

JOIN gcp_storage_bucket_policy_bindings ON gcp_storage_bucket_policy_bindings.bucket_id = gcp_storage_buckets.id

JOIN gcp_storage_bucket_policy_binding_members ON gcp_storage_bucket_policy_binding_members.bucket_policy_binding_id = gcp_storage_bucket_policy_bindings.id

WHERE gcp_storage_bucket_policy_binding_members.name = 'allUsers' AND gcp_storage_bucket_policy_bindings.role = 'roles/storage.objectViewer';SELECT * FROM aws_elbv2_load_balancers WHERE scheme = 'internet-facing';SELECT * from aws_rds_clusters where storage_encrypted = 0;SELECT * from aws_s3_buckets

JOIN aws_s3_bucket_encryption_rules ON aws_s3_buckets.id != aws_s3_bucket_encryption_rules.bucket_id;More examples and information are available here

You can use the Makefile to build, deploy, and destroy the entire terraform infrastructure. The default execution configuration file can be found on: ./deploy/aws/terraform/tasks/us-east-1

- You can define more tasks by adding cloudwatch periodic events

- The default configuration will execute the cloudquery every one day with the default configuration.

resource "aws_cloudwatch_event_rule" "scan_schedule" {

name = "Cloudquery-us-east-1-scan"

description = "Run cloudquery everyday on us-east-1 resources"

schedule_expression = "rate(1 day)"

}

resource "aws_cloudwatch_event_target" "sns" {

rule = aws_cloudwatch_event_rule.scan_schedule.name

arn = aws_lambda_function.cloudquery.arn

input = file("tasks/us-east-1/input.json")

}-

Make sure you have installed the terraform, refer to https://learn.hashicorp.com/tutorials/terraform/install-cli

-

Build cloudquery binary from the source

make build- Deploy cloudquery infrastructure to AWS using terraform

make applyYou can also use

init,plan, anddestroyto perform different operations with terraform, refer to Terraform docs for more information.

- cloudquery homepage: https://cloudquery.io

- Releases: https://github.com/cloudquery/cloudquery/releases

- Documentation https://docs.cloudquery.io

- Schema explorer (schemaspy): https://schema.cloudquery.io/

- Database Configuration: https://docs.cloudquery.io/database-configuration

By contributing to cloudquery you agree that your contributions will be licensed as defined on the LICENSE file.

Feel free to open Pull-Request for small fixes and changes. For bigger changes and new providers please open an issue first to prevent double work and discuss relevant stuff.