Generative Adverserial Networks(GANs) have been fairly successful in image generation tasks.GANs produce sharp images with lot of details but are quite difficult to train due to being a competetive game between two networks - discriminator(D) and the generator(G). Each training step changes the nature of the optimization problem and as such may fall into myriad local minima leading to failures

To stablize the training, various architectures and mechanisms have been suggested with the earliest being Deep Convolutional GAN(DCGAN). DCGAN was a result of exhaustive emperical experiments with tuning of various hyperparameters and architectures.DCGAN are easy to setup and train. They don't offer the best output in terms of quality but are fairly adaptable to different type of image generation tasks.

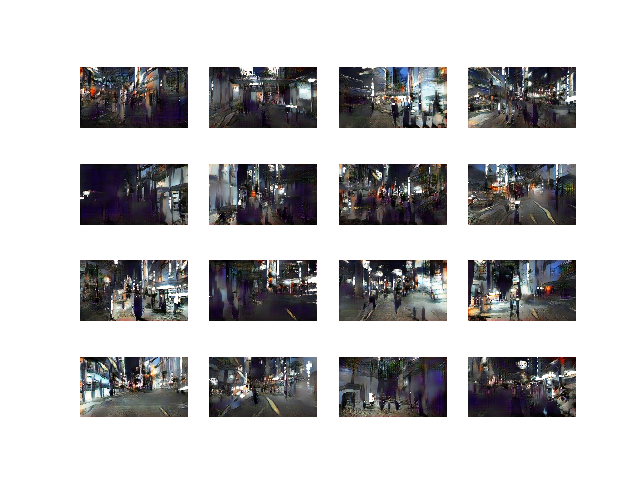

In this repo, I've attempted to generate individual frames from a city-walk video(video credit:Rambalac). The problem is interesting to me as a way to generate automatic city-walk videos. This DCGAN model generates 160x320 images which is non-standard as far as conventional implementations of GANs are concerned.

This implementation of DCGAN can be found here with slight architectural change.

First the video is split into individual frames using OpenCV -frame skipping is used to keep the frame count around 10k, and then fed to the GAN network and trained for 500 epochs. Due to the stochastic nature of problem , the training may not run fully upto 500 epochs and may fail after 100 epochs. The model starts generating natural looking images after 60-70 epochs. The inference scripts can then be used to generate sample plots and frames from the saved models.

For training:

python train_unconditional_gan.py

or

python train_unconditional_gan_big.py (slight change in training procedure which doesn't use shuffled images from the video)

For test:

python inference_unconditional_gan.py

2. Use of better optimization (loss) functions