This project is based on this material.

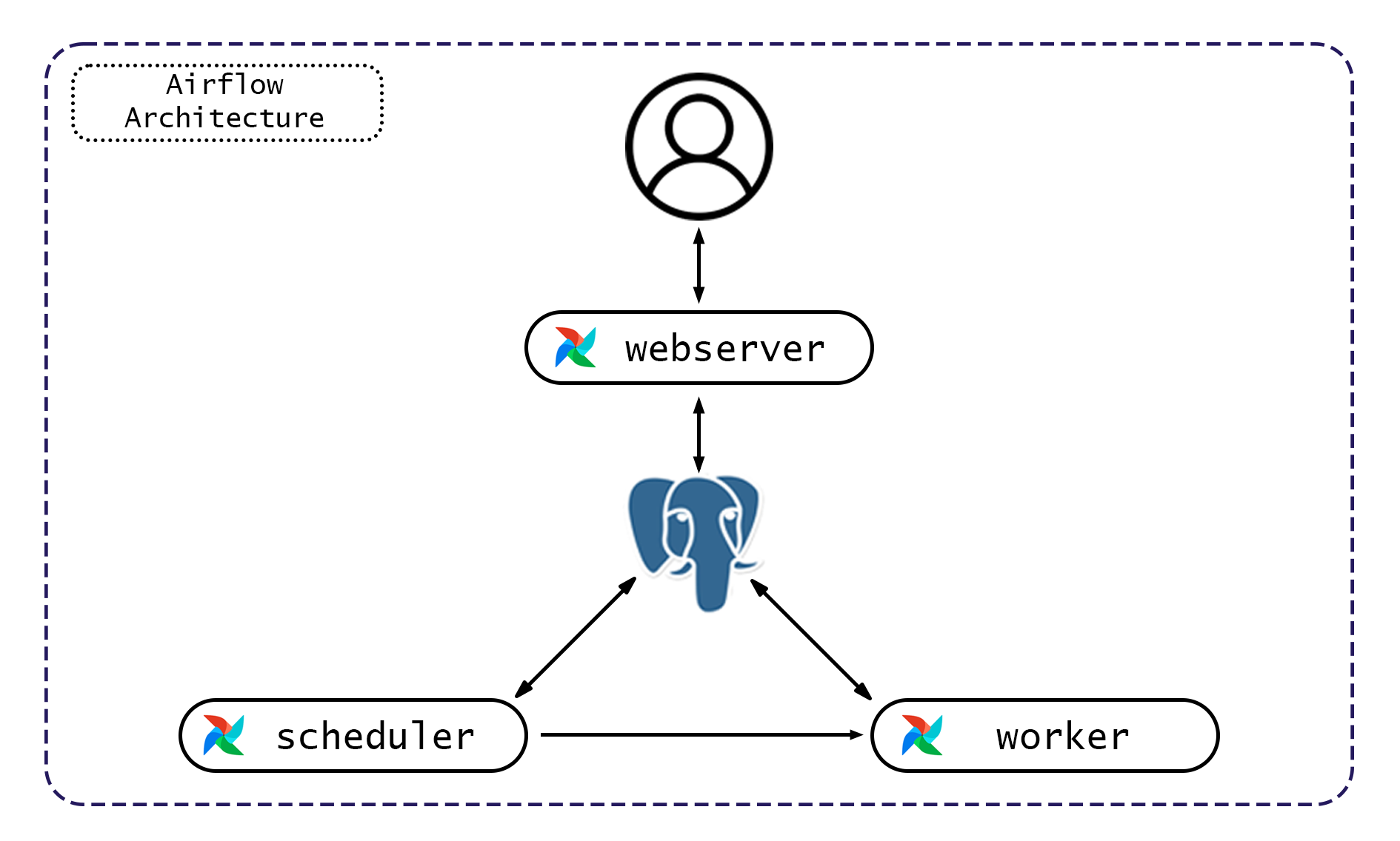

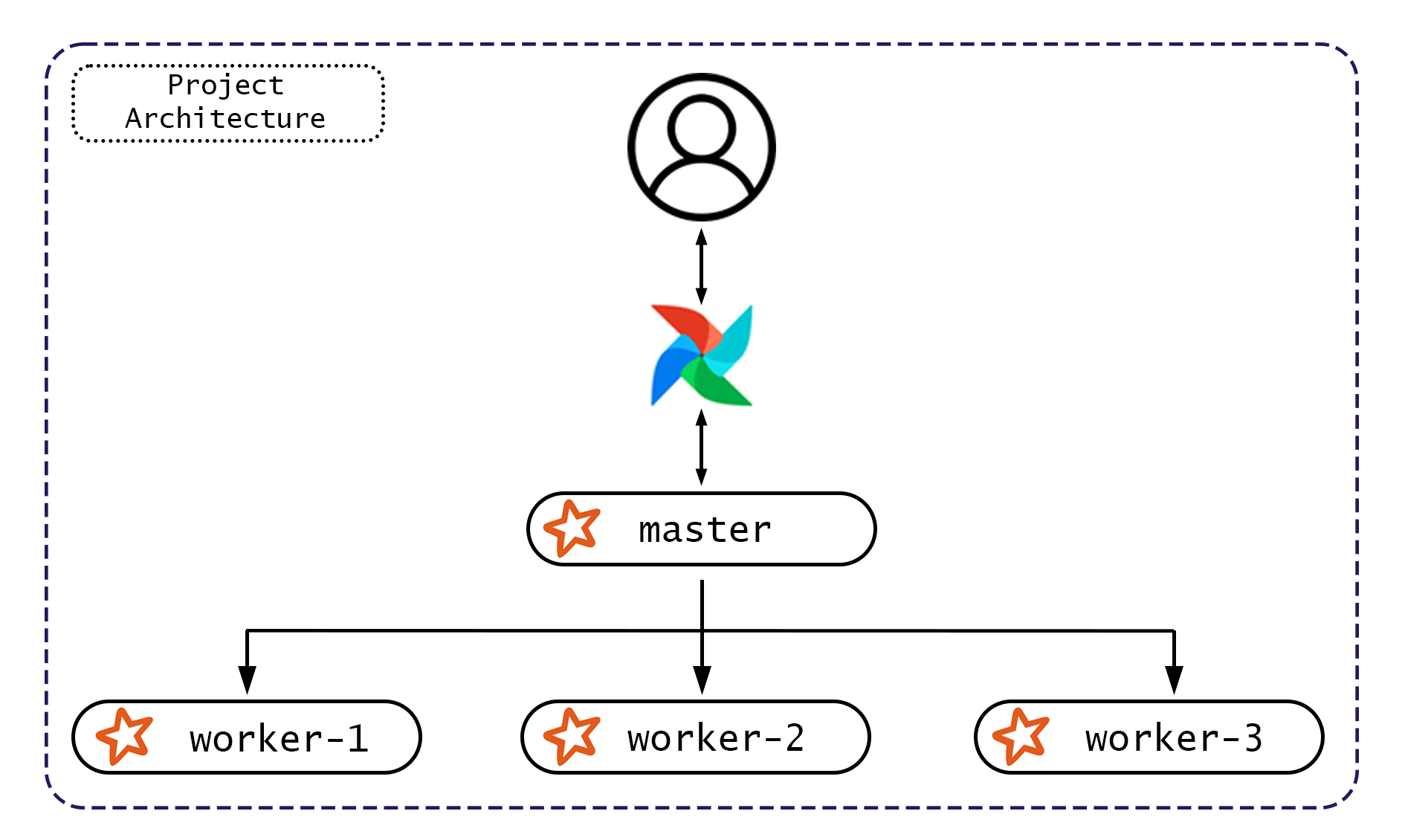

It gives you an Apache Airflow configured with LocalExecutor and Apache Spark cluster in standalone mode.

Apache Airflow version 2.3

with Postgres version 13

and Apache Spark version 3.1.2

with Hadoop version 3.2

| Application | Description |

|---|---|

| Postgres | Postgres database for Airflow metadata |

| Airflow-init | The initialization service |

| Airflow-worker | The worker that executes the tasks given by the scheduler |

| Airflow-scheduler | The scheduler monitors all tasks and DAGs |

| Airflow-webserver | The webserver. Available at http://localhost:8080 |

| Application | Description |

|---|---|

| Spark | Spark Master node. Available at http://localhost:8181 |

| Spark-worker-1 | Spark Worker node with 4 core and 4 gigabyte of memory (can be configured manually in docker-compose.yml) |

| Spark-worker-2 | Spark Worker node with 4 core and 4 gigabyte of memory (can be configured manually in docker-compose.yml) |

| Spark-worker-3 | Spark Worker node with 4 core and 4 gigabyte of memory (can be configured manually in docker-compose.yml) |

- Install Docker and Docker Compose

git clone https://github.com/mbvyn/AirflowSpark.git

Inside the AirflowSpark/docker/

docker compose up -d

There is a simple spark job written in Scala.

Jar file is already created in docker/Volume/spark/app,

but you also can check the Spark Application.

Datasets for this example which you can find in docker/Volume/spark/resources

can also be downloaded from www.kaggle.com

(link1,

link2,

link3)

-

Go to Airflow Web UI.

-

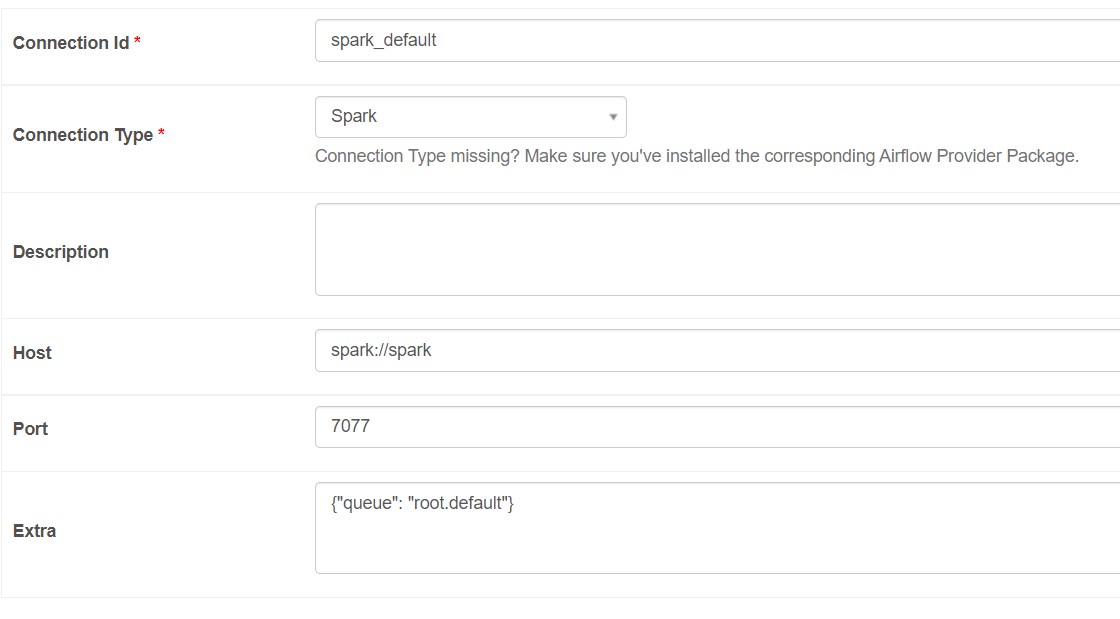

Configure Spark Connection.

2.1 Go to Connection

2.2 Add a new record like in the image below

-

Import variables.

3.1 Go to Variables

3.2 Import variables from

docker/variables.json -

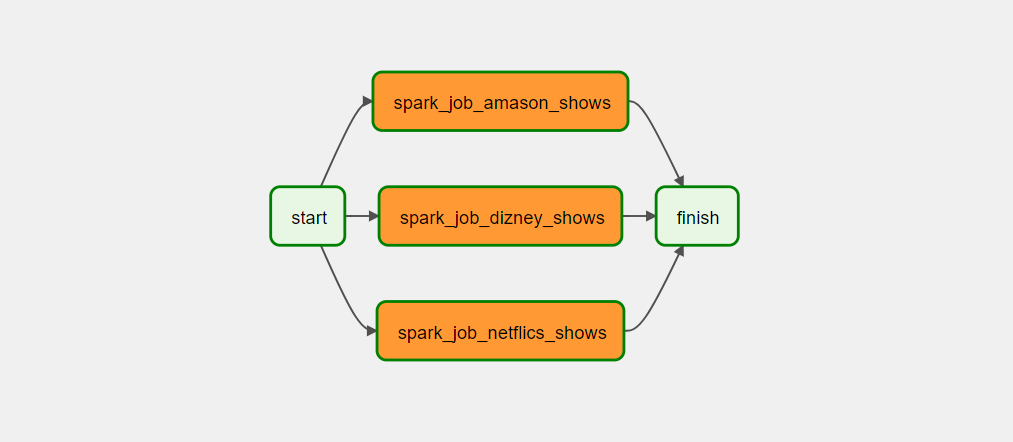

Run simple_dag

-

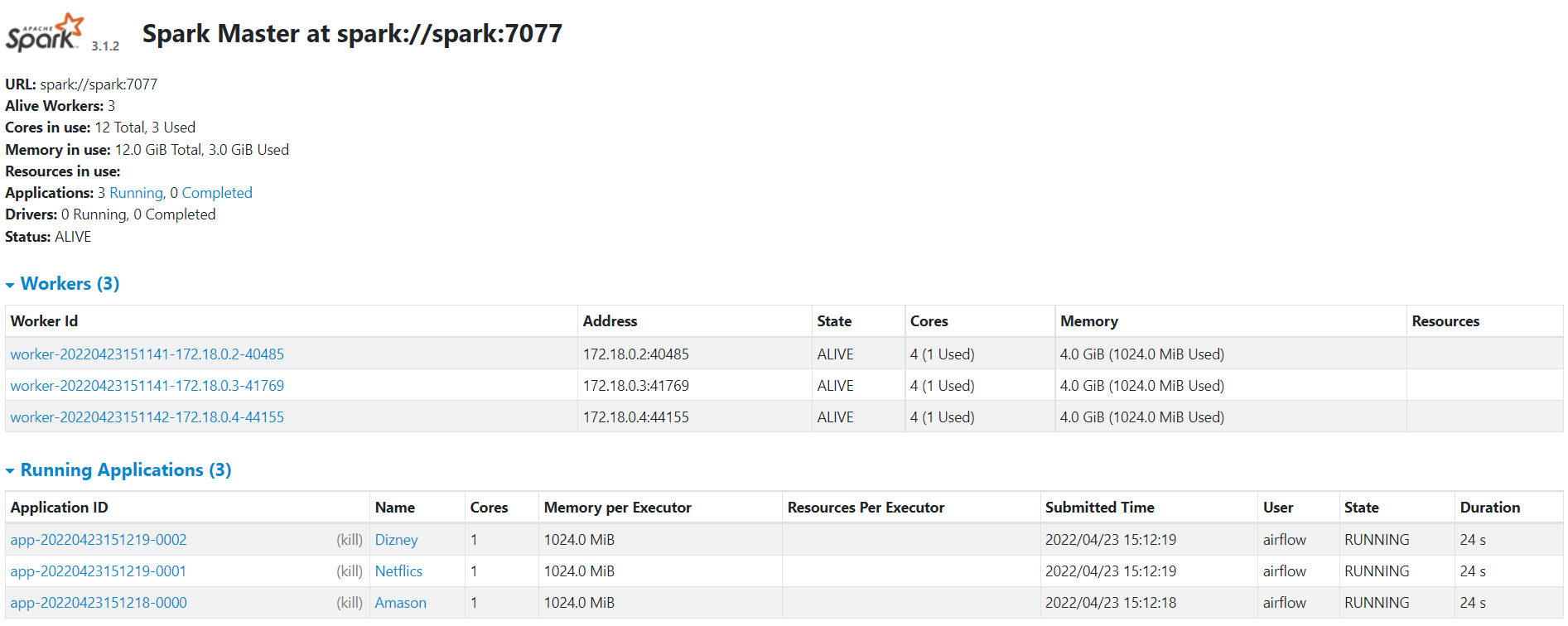

You can go to Spark UI and see that spark has taken the jobs

- In the graph section you will see something like this

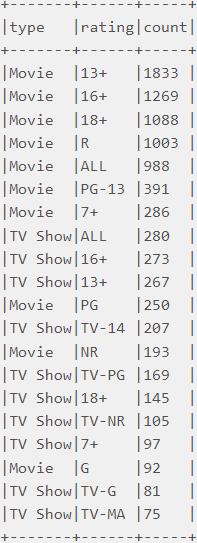

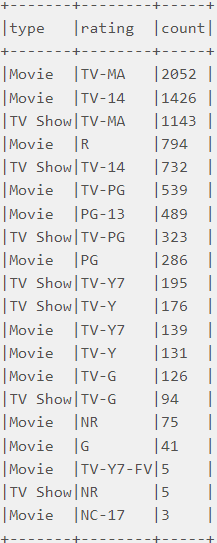

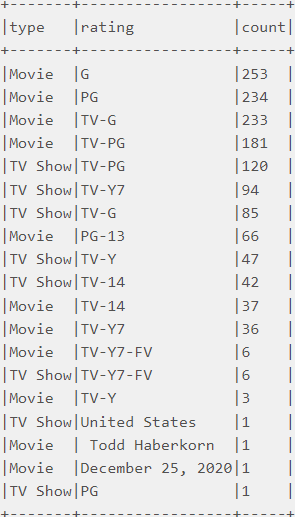

- And in logs you will find the output of our jobs