This folder contains Karpathy's implementation of a mini version of GPT. You can run it to train a character-level language model on your laptop to generate shakespearean (well kind of 🙈) text. He did a very nice tutorial to walk through the code almost line by line. You can watch it here. If you are completely new to language modelling, this video may help you to understand more basics.

You can find much more details about the code in Karpathy's original repo. The code in this folder has been adapted to contain the minimal running code.

If you don't know what LoRA is, you can watch this Toutube video here, or read the LoRA paper1 first.

-

Toy problem: I wrote a notebook to show how to fine-tune a reeeeaaaal simple binary classification model with LoRA, see here.

-

The real deal: of course, some amazing people already implemented LoRA as a library. Here's the notebook on how to fine-tune LLaMA 2 with the LoRA library.

As discussed in the LoRA for LLMs notebook, we only need to train about 12% of the original parameter count by applying this low rank representation. However, we still have to load the entire model, as the low rank weight matrix is added to the orginal weights. For the smallest Llama 2 model with 7 billion parameters, it will require 28G memory on the GPU allocated just to store the parameters, making it impossible to train on lower-end GPUs such as T4 or V100.

Therefore, (...drum rolls...) QLoRA2 was proposed. QLoRA loads the 4-bit quantized weights from a pretrained model, and then apply LoRA to fine tune the model. There are more technical details you may be interested in. If so, you can read the paper or watch this video here.

With the LoRA library (check the notebook), it is very easy to adopt QLoRA. All you need to do is to specify in the configuration as below:

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-chat-hf",

device_map='auto',

torch_dtype=torch.float16,

use_auth_token=True,

load_in_4bit=True, # <------ *here*

# load_in_8bit=True,

)

Unfortunately, quantization leads to an information loss. This is a tradeoff between memory and accuracy. If needed, there's also an 8-bit option.

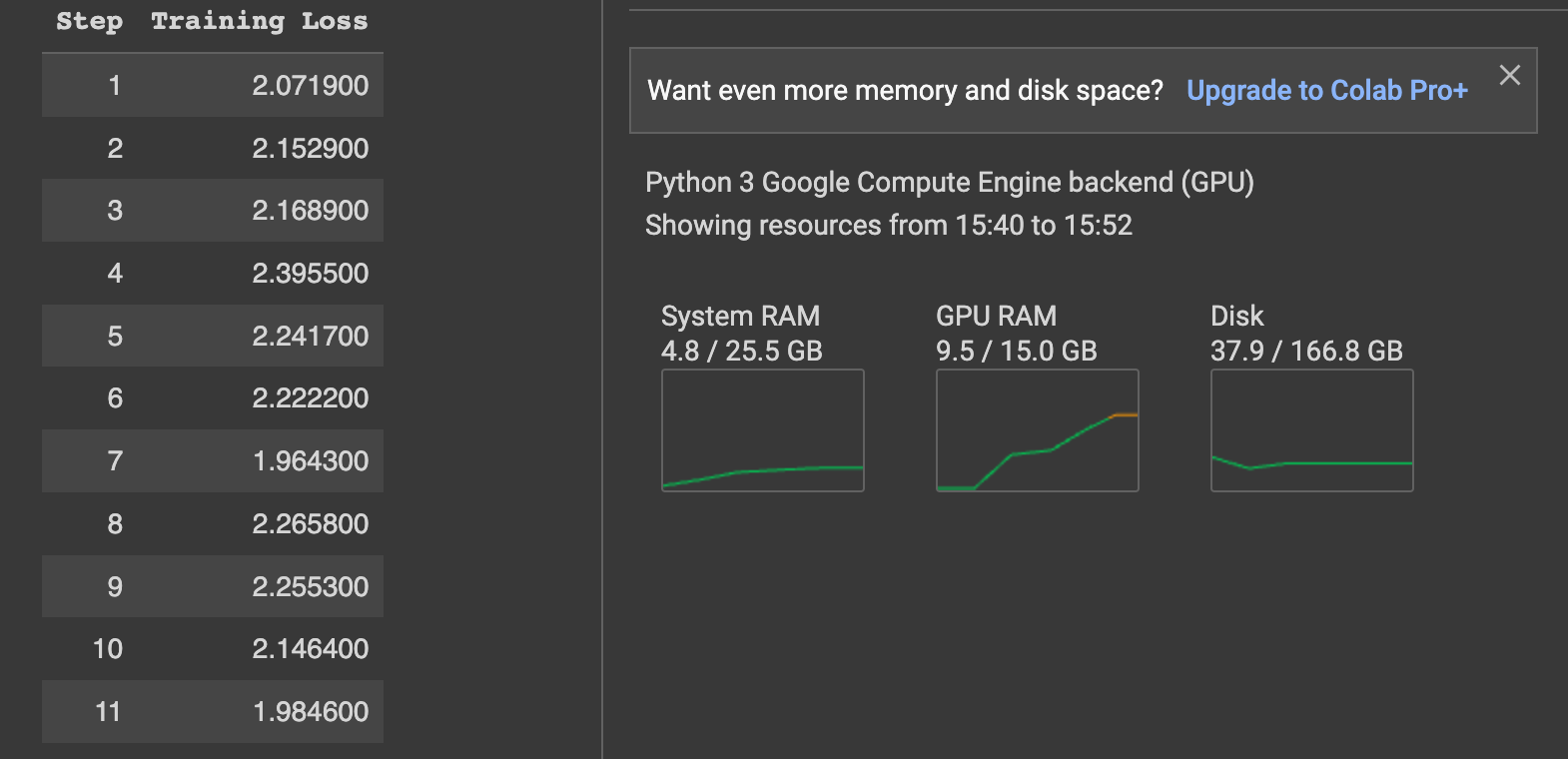

By choosing to load the entire pre-trained model in 4-bit, we can fine-tune a 7-billon-parameter model on a single T4 GPU. Check out the RAM usage during training:

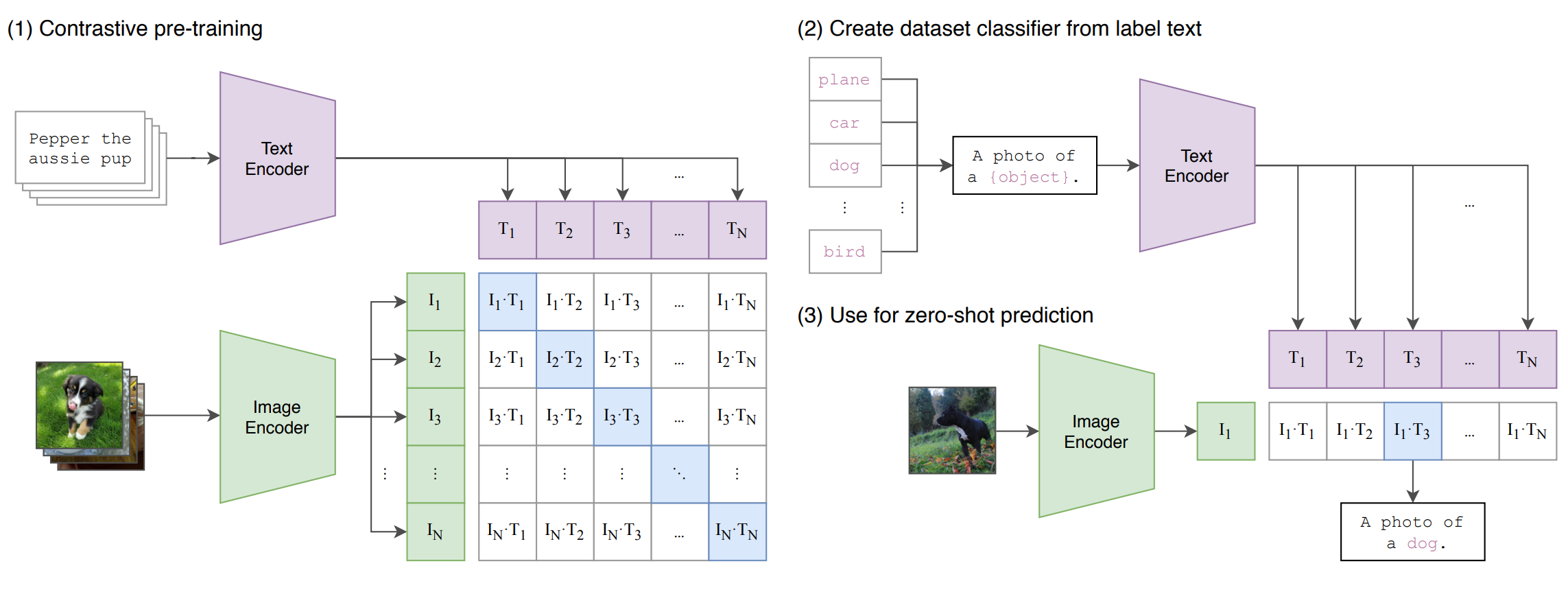

Concenptually, CLIP is very simple. The figure in the CLIP paper3 says it all.

For this visual-language application, step (1) in the figure needs a few components:

- data: images with text describing them

- a visual encoder to extract image features

- a language encoder to extract text features

- learn by maximising the similarity between the paired image and text features indicated by the blue squares in the matrix in the figure (contrastive learning)

I wrote a (very) simple example in this notebook which implements and explains the contrastive learning objective, and describes the components in step (2) and (3). However, I used the same style of text labels for training and testing. So no zero-shot here.

GLIDE4 is a text-to-image diffusion model with CLIP as the guidance. If you aren't familiar with diffusion models, you can watch this video for a quick explaination to the concept. If you want more technical details, you can start with these papers: diffusion generative model5, DDPM6, DDIM7, and a variational perspective of diffusion models8.

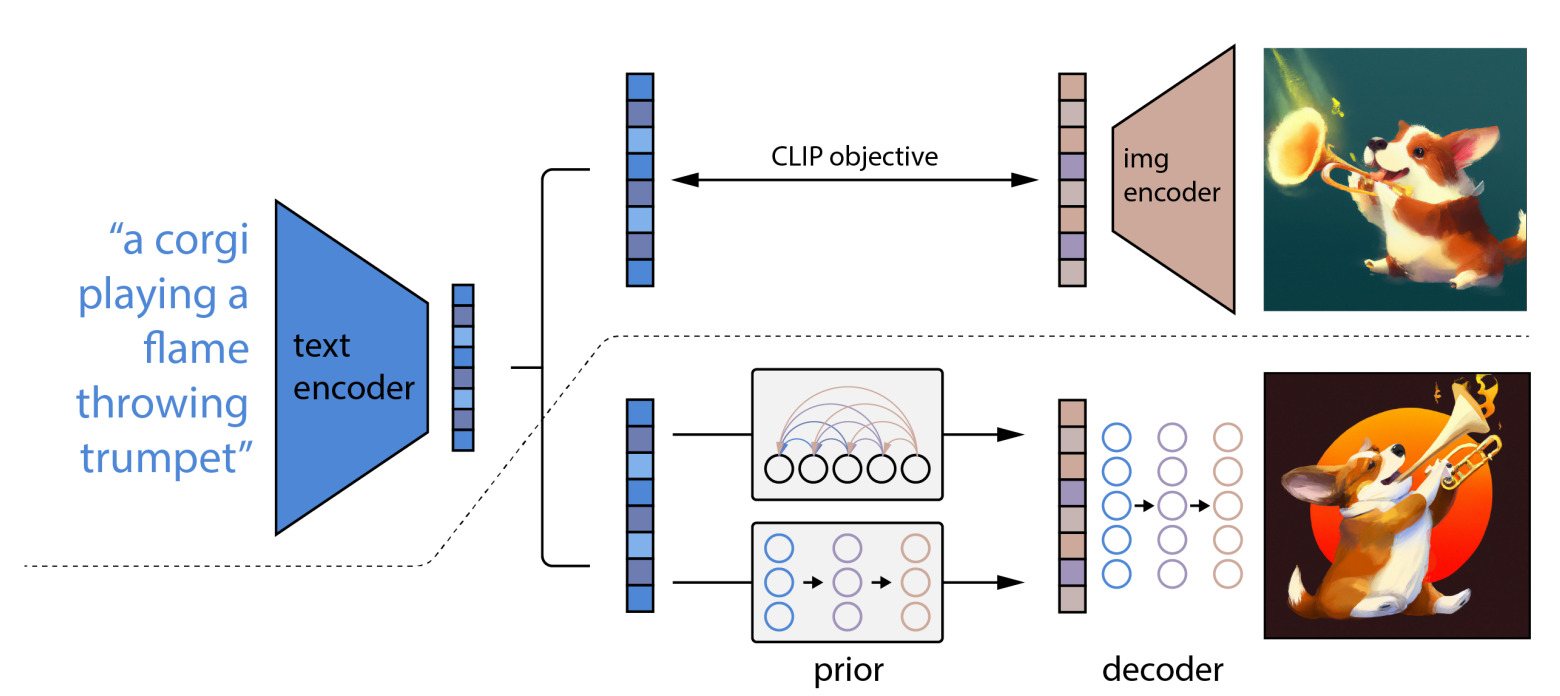

DALL·E 2 is another concenptually simply model that produces amazing results.

The first half of the model is a pre-trained CLIP (frozen once trained), i.e., the part above the dash line in the figure in the DALL·E 2 paper9, see below.

In CLIP, we have trained two encoders to extract features from image and text inputs.

Footnotes

-

Hu, E.J., Shen, Y., Wallis, P., Allen-Zhu, Z., Li, Y., Wang, S., Wang, L. and Chen, W., 2021. LoRA: Low-Rank Adaptation of Large Language Models, arXiv preprint arXiv:2106.09685 ↩

-

Dettmers, T., Pagnoni, A., Holtzman, A. and Zettlemoyer, L., 2023. QLoRA: Efficient Finetuning of Quantized LLMs. arXiv preprint arXiv:2305.14314. ↩

-

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J. and Krueger, G., 2021, July. Learning transferable visual models from natural language supervision. In International conference on machine learning (pp. 8748-8763). PMLR. ↩

-

Nichol, A., Dhariwal, P., Ramesh, A., Shyam, P., Mishkin, P., McGrew, B., Sutskever, I. and Chen, M., 2021. Glide: Towards photorealistic image generation and editing with text-guided diffusion models. arXiv preprint arXiv:2112.10741. ↩

-

Sohl-Dickstein, Jascha; Weiss, Eric; Maheswaranathan, Niru; Ganguli, Surya (2015-06-01). Deep Unsupervised Learning using Nonequilibrium Thermodynamics. Proceedings of the 32nd International Conference on Machine Learning. PMLR. 37: 2256–2265 ↩

-

Ho, J., Jain, A. and Abbeel, P., 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems, 33, pp.6840-6851. ↩

-

Song, J., Meng, C. and Ermon, S., 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502. ↩

-

Kingma, D., Salimans, T., Poole, B. and Ho, J., 2021. Variational diffusion models. Advances in neural information processing systems, 34, pp.21696-21707. ↩

-

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C. and Chen, M., 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, 1(2), p.3. ↩