This repository contains the code for building the Temporally-linked Multi-input Attention (TMA) model, which was presented in the work Egocentric Video Description based on Temporally-Linked Sequences, submitted to the Journal of Visual Communication and Image Representation. With this module, you can replicate our experiments and easily deploy new models. TMA is built upon our fork of Keras framework (version 1.2) and tested for the Theano backend.

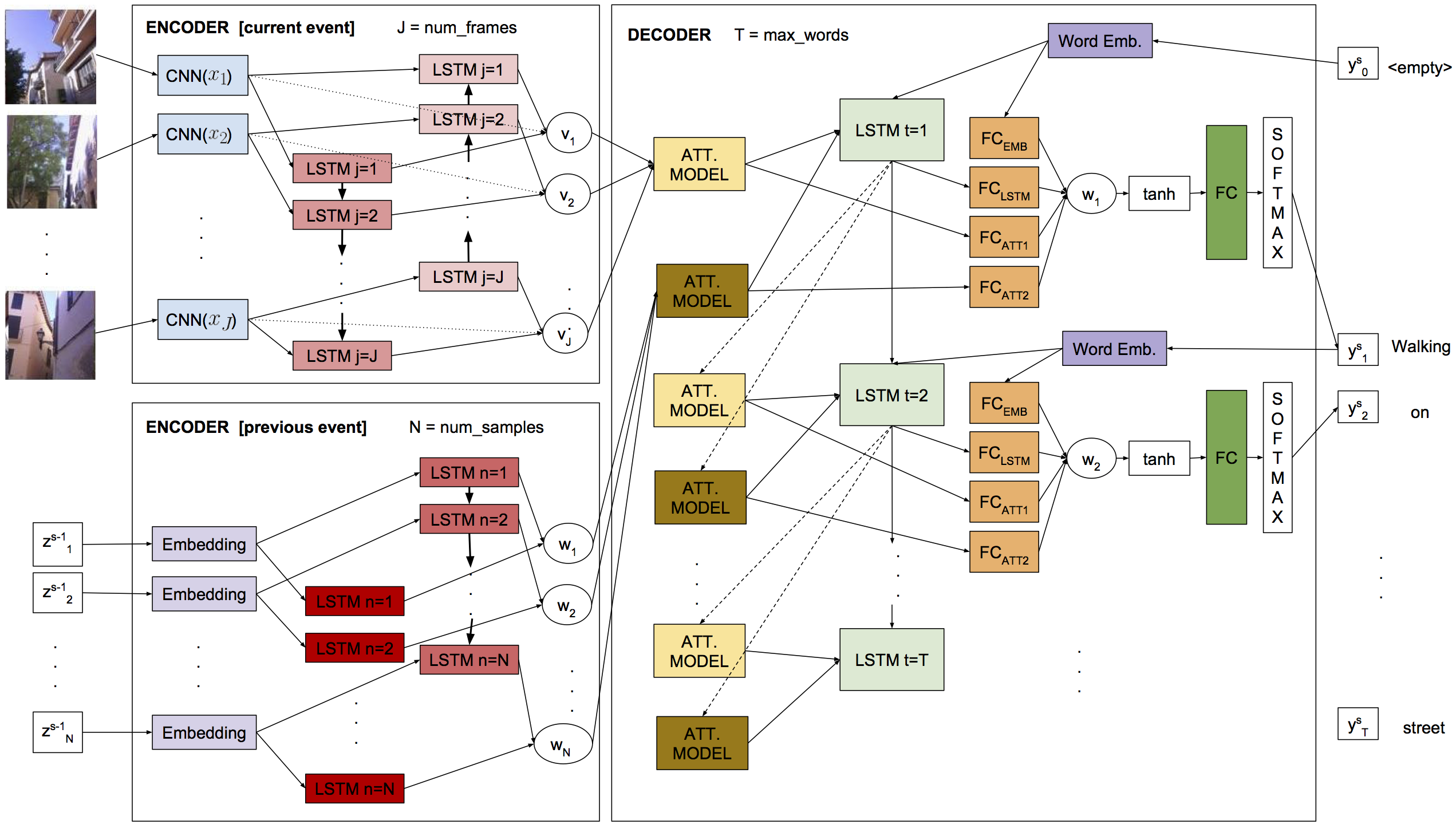

- Temporally-linked mechanism for learning using information from previous events.

- Multi-input Attention LSTM model over any of the input multimodal sequences.

- Peeked decoder LSTM: The previously generated word is an input of the current LSTM timestep

- MLPs for initializing the LSTM hidden and memory state

- Beam search decoding

TMA requires the following libraries:

Assuming you have a dataset and features extracted from the video frames:

-

Set the paths to Keras and Multimodal Keras Wraper in train.sh

-

Prepare data:

python data_engine/subsample_frames_features.py

python data_engine/generate_features_lists.py

python data_engine/generate_descriptions_lists.py

See data_engine/README.md for detailed information.

-

Prepare the inputs/outputs of your model in

data_engine/prepare_data.py -

Set a model configuration in

config.py -

Train!:

python main.py

The dataset EDUB-SegDesc was used to evaluate this model. It was acquired by the wearable camera Narrative Clip, taking a picture every 30 seconds (2 fpm). It consists of 55 days acquired by 9 people. Containing a total of 48,717 images, divided in 1,339 events (or image sequences) and 3,991 captions.

If you use this code for any purpose, please, do not forget to cite the following paper:

Marc Bolaños, Álvaro Peris, Francisco Casacuberta, Sergi Soler and Petia Radeva.

Egocentric Video Description based on Temporally-Linked Sequences

In Special Issue on Egocentric Vision and Lifelogging Tools.

Journal of Visual Communication and Image Representation (VCIR), (SUBMITTED).

Joint collaboration between the Computer Vision at the University of Barcelona (CVUB) group at Universitat de Barcelona-CVC and the PRHLT Research Center at Universitat Politècnica de València.

Marc Bolaños (web page): marc.bolanos@ub.edu

Álvaro Peris (web page): lvapeab@prhlt.upv.es