This is the PyTorch[1] implemention of our paper Federated Learning from Only Unlabeled Data with Class-Conditional-Sharing Clients by Nan Lu, Zhao Wang , Xiaoxiao Li, Gang Niu , Qi Dou, and Masashi Sugiyama.

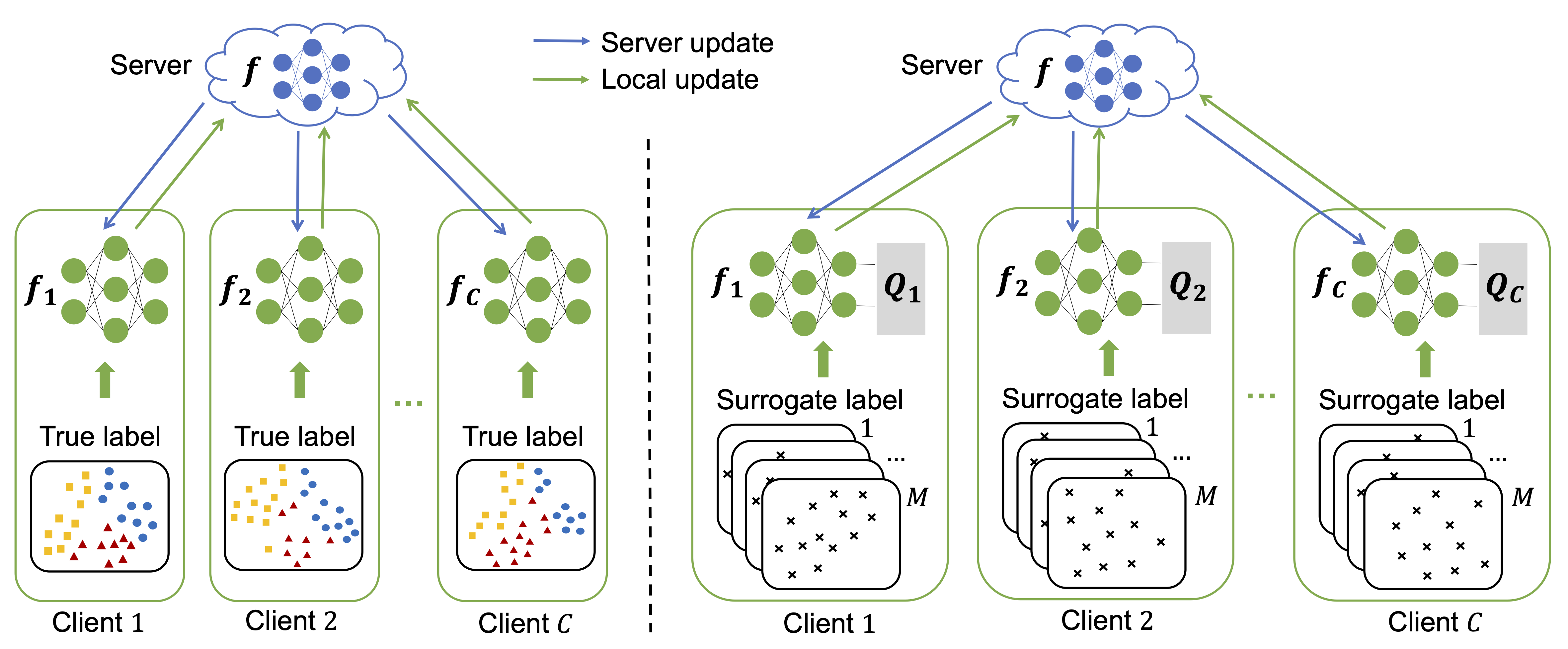

Supervised federated learning (FL) enables multiple clients to share the trained model without sharing their labeled data. However, potential clients might even be reluctant to label their own data, which could limit the applicability of FL in practice. In this paper, we show the possibility of unsupervised FL whose model is still a classifier for predicting class labels, if the class-prior probabilities are shifted while the class-conditional distributions are shared among the unlabeled data owned by the clients. We propose federation of unsupervised learning (FedUL), where the unlabeled data are transformed into surrogate labeled data for each of the clients, a modified model is trained by supervised FL, and the wanted model is recovered from the modified model. FedUL is a very general solution to unsupervised FL: it is compatible with many supervised FL methods, and the recovery of the wanted model can be theoretically guaranteed as if the data have been labeled. Experiments on benchmark and real-world datasets demonstrate the effectiveness of FedUL.

We suggest using Anaconda to setup environment on Linux, if you have installed anaconda, you can skip this step.

wget https://repo.anaconda.com/archive/Anaconda3-2020.11-Linux-x86_64.sh && zsh Anaconda3-2020.11-Linux-x86_64.shThen, we can install packages using provided environment.yaml.

cd FedUL

conda env create -f environment.yaml

conda activate fedulWe employ two benchmark datasets (MNIST and CIFAR10) for experiments.

As for size limitation, we provide the pretrained weights of our method FedUL on MNIST and CIFAR10 running with seed 0

for directly testing, the pretrained weights can be downloaded from Google Drive and put in checkpoint directory.

cd experiments

# MNIST IID 10 sets

python ../federated/fedul_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --test

# MNIST Non-IID 10 sets

python ../federated/fedul_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --test --noniid

# MNIST IID 20 sets

python ../federated/fedul_mnist.py --setnum 20 --wdecay 5e-6 --seed 0 --test

# MNIST Non-IID 20 sets

python ../federated/fedul_mnist.py --setnum 20 --wdecay 5e-6 --seed 0 --test --noniid

# MNIST IID 40 sets

python ../federated/fedul_mnist.py --setnum 40 --wdecay 2e-6 --seed 0 --test

# MNIST Non-IID 40 sets

python ../federated/fedul_mnist.py --setnum 40 --wdecay 2e-6 --seed 0 --test --noniid

# CIFAR10 IID 10 sets

python ../federated/fedul_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --test

# CIFAR10 Non-IID 10 sets

python ../federated/fedul_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --test --noniid

# CIFAR10 IID 20 sets

python ../federated/fedul_cifar.py --setnum 20 --wdecay 1e-5 --seed 0 --test

# CIFAR10 Non-IID 20 sets

python ../federated/fedul_cifar.py --setnum 20 --wdecay 1e-5 --seed 0 --test --noniid

# CIFAR10 IID 40 sets

python ../federated/fedul_cifar.py --setnum 40 --wdecay 4e-6 --seed 0 --test

# CIFAR10 Non-IID 40 sets

python ../federated/fedul_cifar.py --setnum 40 --wdecay 4e-6 --seed 0 --test --noniidThe training logs will be saved in logs directory and the best checkpoints will be saved in checkpoint

directory.

Run all experiments on MNIST and CIFAR10:

cd experiments

# IID task

python iid.py

# Non-IID task

python noniid.pyObtain results in mean (std) format:

cd experiments

python get_result.pyIf you want to run a specific experiment:

cd experiments

# FedUL MNIST IID with 10 sets

python ../federated/fedul_mnist.py --setnum 10 --wdecay 1e-5 --seed 0

# FedUL MNIST Non-IID with 10 sets

python ../federated/fedul_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --noniid

# FedUL CIFAR10 IID with 10 sets

python ../federated/fedul_cifar.py --setnum 10 --wdecay 2e-5 --seed 0

# FedUL CIFAR10 Non-IID with 10 sets

python ../federated/fedul_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --noniid

# FedPL MNIST IID with 10 sets

python ../federated/fedpl_mnist.py --setnum 10 --wdecay 1e-5 --seed 0

# FedPL MNIST Non-IID with 10 sets

python ../federated/fedpl_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --noniid

# FedPL CIFAR10 IID with 10 sets

python ../federated/fedpl_cifar.py --setnum 10 --wdecay 2e-5 --seed 0

# FedPL CIFAR10 Non-IID with 10 sets

python ../federated/fedpl_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --noniidIf you want to test a specific model, just add --test in command:

cd experiments

# FedUL MNIST IID with 10 sets

python ../federated/fedul_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --test

# FedUL MNIST Non-IID with 10 sets

python ../federated/fedul_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --noniid --test

# FedUL CIFAR10 IID with 10 sets

python ../federated/fedul_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --test

# FedUL CIFAR10 Non-IID with 10 sets

python ../federated/fedul_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --noniid --test

# FedPL MNIST IID with 10 sets

python ../federated/fedpl_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --test

# FedPL MNIST Non-IID with 10 sets

python ../federated/fedpl_mnist.py --setnum 10 --wdecay 1e-5 --seed 0 --noniid --test

# FedPL CIFAR10 IID with 10 sets

python ../federated/fedpl_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --test

# FedPL CIFAR10 Non-IID with 10 sets

python ../federated/fedpl_cifar.py --setnum 10 --wdecay 2e-5 --seed 0 --noniid --testIf you find this code useful, please cite in your research papers.

@inproceedings{

lu2022unsupervised,

title={Federated Learning from Only Unlabeled Data with Class-Conditional-Sharing Clients},

author={Nan Lu and Zhao Wang and Xiaoxiao Li and Gang Niu and Qi Dou and Masashi Sugiyama},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=WHA8009laxu}

}

For further questions, pls feel free to contact Nan Lu or Zhao Wang.

[1] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” in Advances in neural information processing systems, 2019, pp. 8026–8037.