This repository contains code, notebooks, and datasets used to build a machine learning pipeline for a high energy physics particle classifier using Apache Spark, ROOT, Parquet, TensorFlow and Jupyter with Python notebooks

- Machine Learning Pipelines with Modern Big Data Tools for High Energy Physics Comput Softw Big Sci 4, 8 (2020).

- Related blog entries:

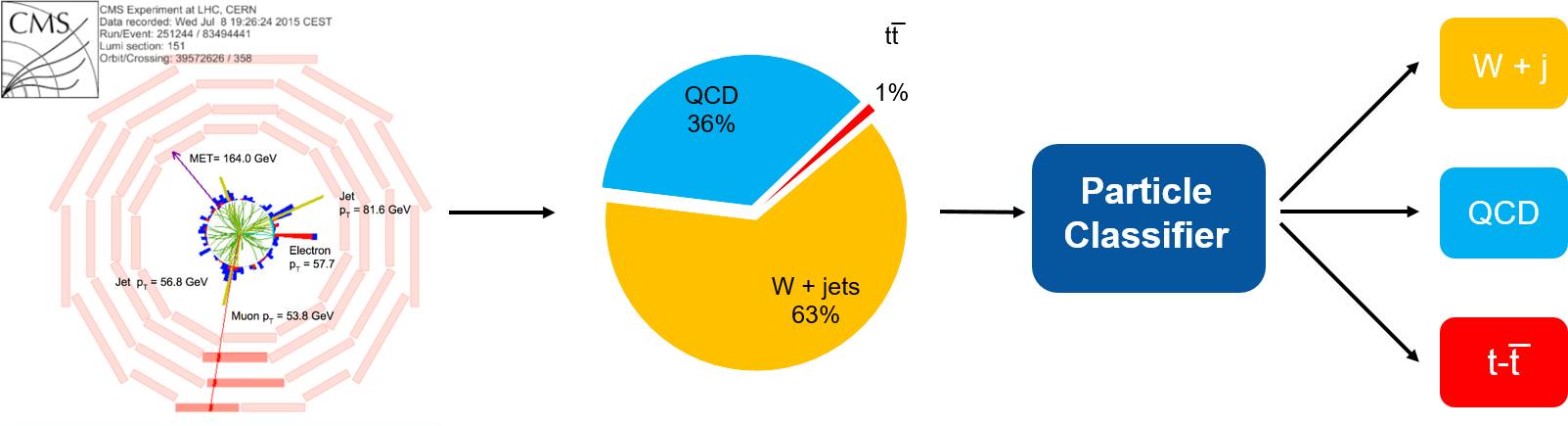

Event data flows collected from the particle detector (CMS experiment) contains different types

of event topologies of interest.

A particle classifier built with neural networks can be used as event filter,

improving state of the art in accuracy.

This work reproduces the findings of the paper

Topology classification with deep learning to improve real-time event selection at the LHC

re-implemented using tools from the Big Data ecosystem, notably Apache Spark and Tensorflow/Keras APIs at scale.

- Authors and contacts: Matteo.Migliorini@cern.ch, Riccardo.Castellotti@cern.ch, Luca.Canali@cern.ch

- Original research article, raw data and neural network models by: T.Q. Nguyen et al., Comput Softw Big Sci (2019) 3: 12

- Acknowledgements: Marco Zanetti, Thong Nguyen, Maurizio Pierini, Viktor Khristenko, CERN openlab, members of the Hadoop and Spark service at CERN, CMS Bigdata project, Intel team for BigDL and Analytics Zoo consultancy: Jiao (Jennie) Wang and Sajan Govindan.

- Download datasets.

- Data preparation using Apache Spark

- Model tuning

- Model training

- HLF classifier with Keras, a simple model and small dataset

- This is a simple classifier with DNN

- The notebooks illustrate also various methods for feeding Parquet data to TensorFlow, via memory, via Pandas and using TFReconds and tf.data

- Inclusive classifier, training of a complex model with large-scale data

- This classifier uses an LSTM and is data-intensive

- This shows a case when the training when data cannot fit into memory

- Methods for distributed training

- Training using tree-based models run in parallel using Spark

- Methods with Spark MLlib Random forest, XGBoost and LightGBT

- Saved models

- HLF classifier with Keras, a simple model and small dataset

Note: See also the archived work in branch article_2020

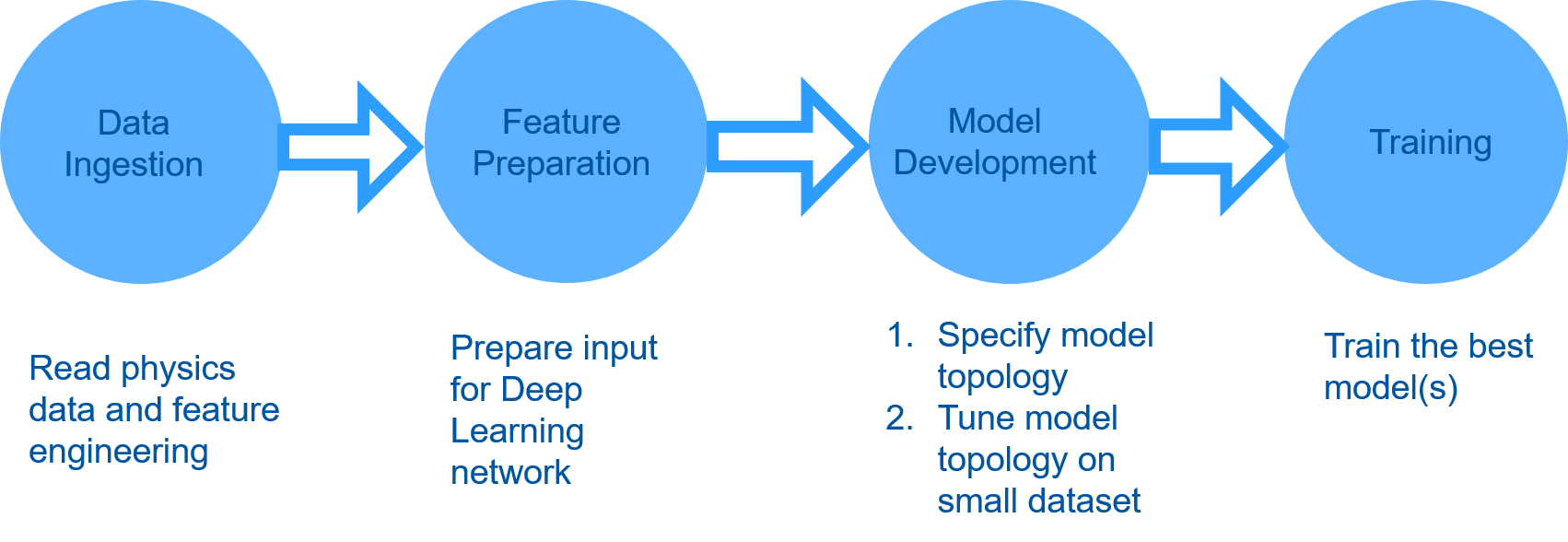

Data pipelines are of paramount importance to make machine learning projects successful, by integrating multiple components and APIs used for data processing across the entire data chain. A good data pipeline implementation can accelerate and improve the productivity of the work around the core machine learning tasks. The four steps of the pipeline we built are:

- Data Ingestion: where we read data from ROOT format and from the CERN-EOS storage system, into a Spark DataFrame and save the results as a table stored in Apache Parquet files

- Feature Engineering and Event Selection: where the Parquet files containing all the events details processed in Data Ingestion are filtered and datasets with new features are produced

- Parameter Tuning: where the best set of hyperparameters for each model architecture are found performing a grid search

- Training: where the best models found in the previous step are trained on the entire dataset.

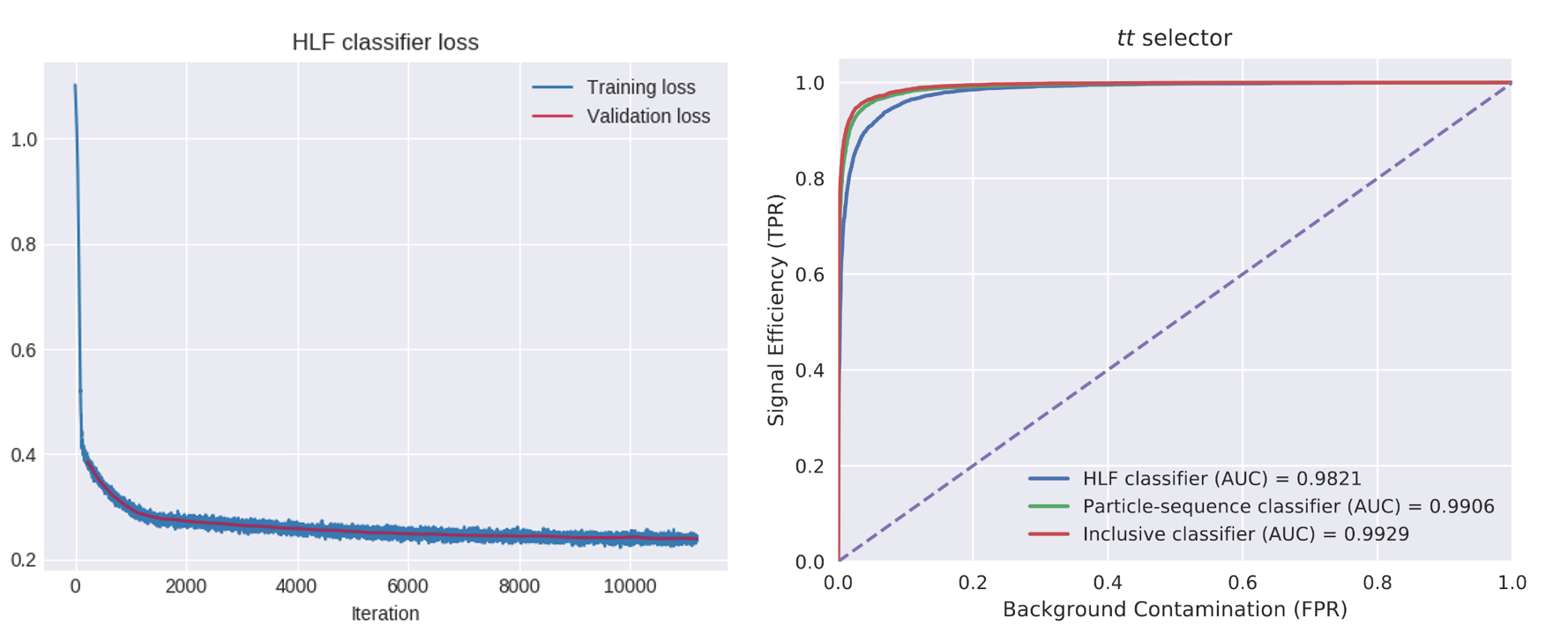

The results of the DL model(s) training are satisfactoy and match the results of the original research paper.

- Article "Machine Learning Pipelines with Modern Big DataTools for High Energy Physics" Comput Softw Big Sci 4, 8 (2020), and arXiv.org

- Blog post "Machine Learning Pipelines for High Energy Physics Using Apache Spark with BigDL and Analytics Zoo"

- Blog post "Distributed Deep Learning for Physics with TensorFlow and Kubernetes"

- Poster at the CERN openlab technical workshop 2019

- Presentation at Spark Summit SF 2019

- Presentation at Spark Summit EU 2019

- Presentation at CERN EP-IT Data science seminar