Code for our paper A Causal View of Entity Bias in (Large) Language Models in Findings of EMNLP 2023.

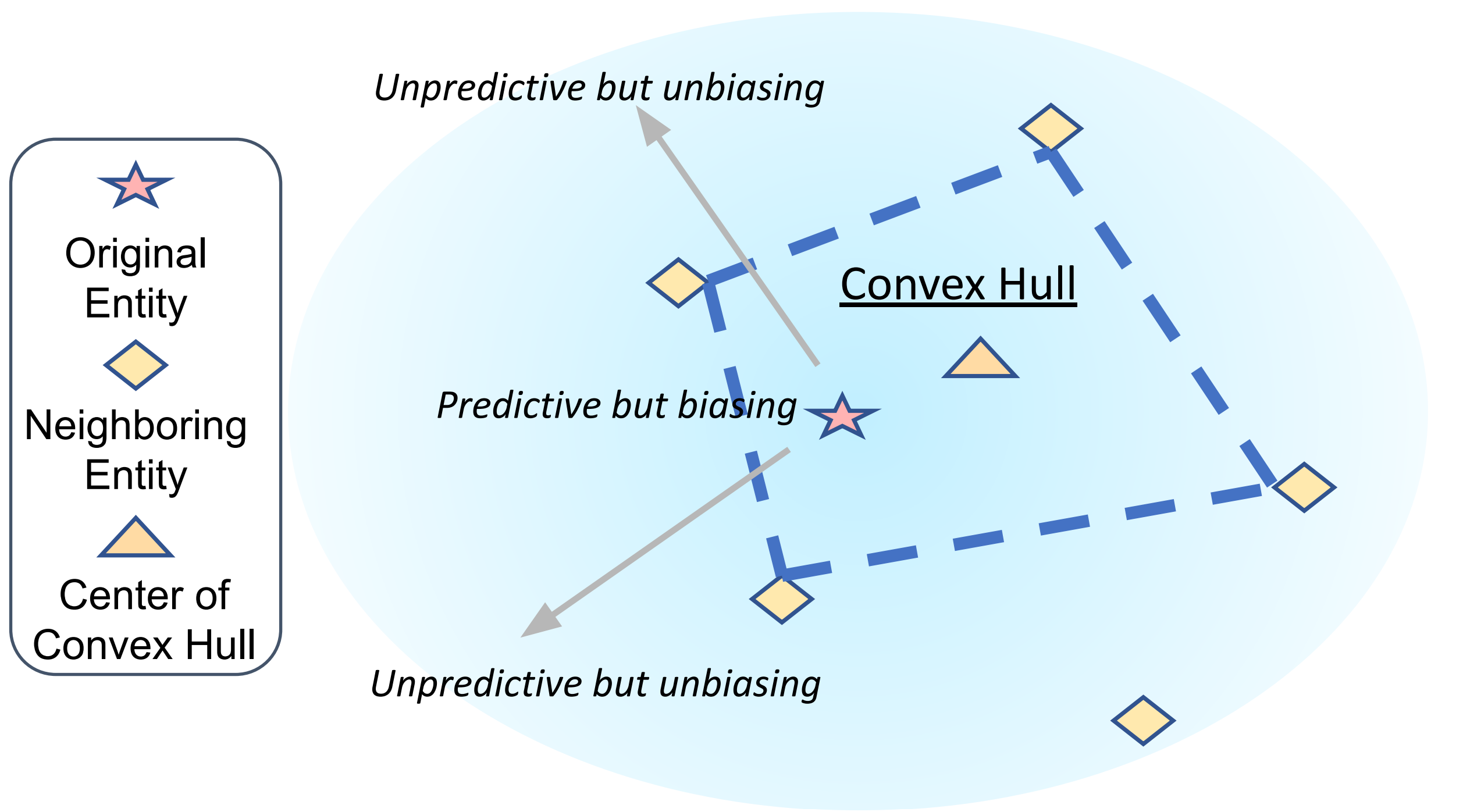

- We conduct a causal analysis of entity bias and its mitigation methods.

- We propose a training-time causal intervention for mitigating entity bias of white-box LLMs.

- We propose an in-context causal intervention for mitigating entity bias of black-box LLMs.

The TACRED dataset can be obtained from this link. The ENTRED dataset can be obtained from this link. The expected structure of files is:

|-- data

| |-- tacred

| | |-- train.json

| | |-- dev.json

| | |-- test.json

| | |-- test_entred.json

pip install -r requirements.txtTo train and evaluate roberta-large with training-time causal intervention, run

bash run.shIf you use our code in your work, please cite the following paper.

@inproceedings{wang2023causal,

title={A Causal View of Entity Bias in (Large) Language Models},

author={Wang, Fei and Mo, Wenjie and Wang, Yiwei and Zhou, Wenxuan and Chen, Muhao},

booktitle={Findings of the Association for Computational Linguistics: EMNLP 2023},

year={2023}

}

Our code is based on this repo of the following paper.

@inproceedings{zhou2022improved,

title={An Improved Baseline for Sentence-level Relation Extraction},

author={Zhou, Wenxuan and Chen, Muhao},

booktitle={Proceedings of the 2nd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 12th International Joint Conference on Natural Language Processing (Volume 2: Short Papers)},

year={2022}

}