Todo:

- Instructions for training code

- Interactive interface

Done:

- Basic training code

- Environment

- Pretrained model checkpoint

- Sampling code

- Dataset preparation

Project page | arXiv (LowRes version) | ACM TOG Paper | BibTex

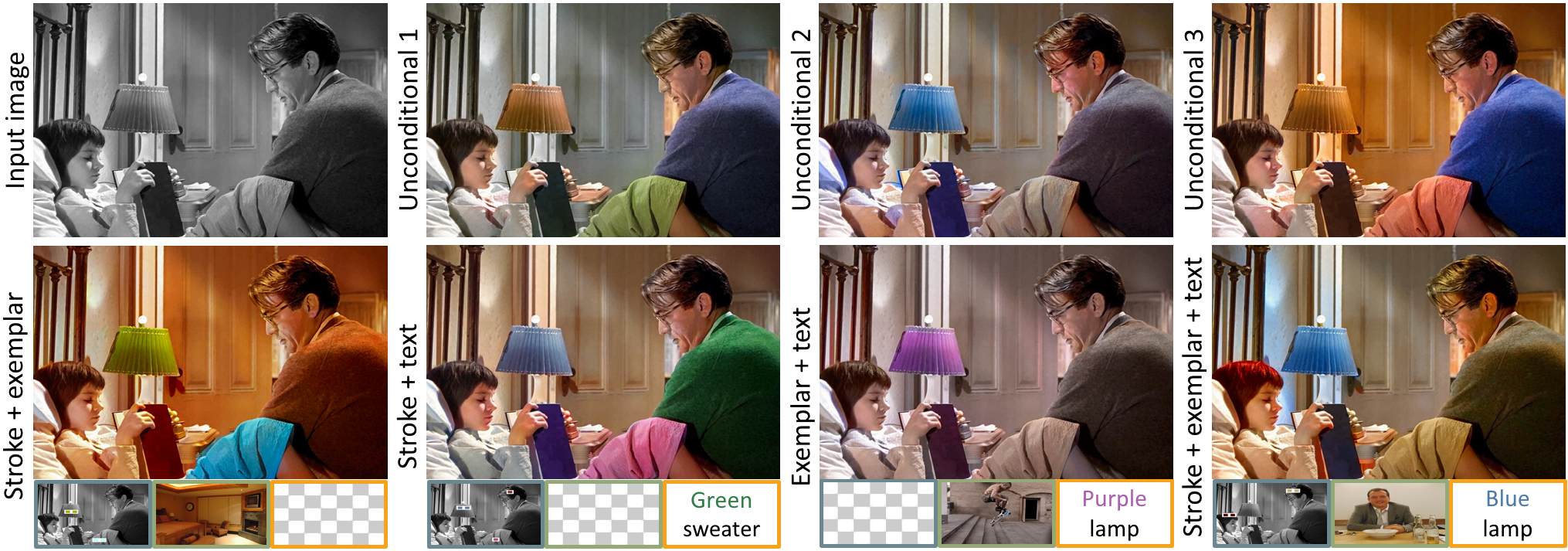

Zhitong Huang

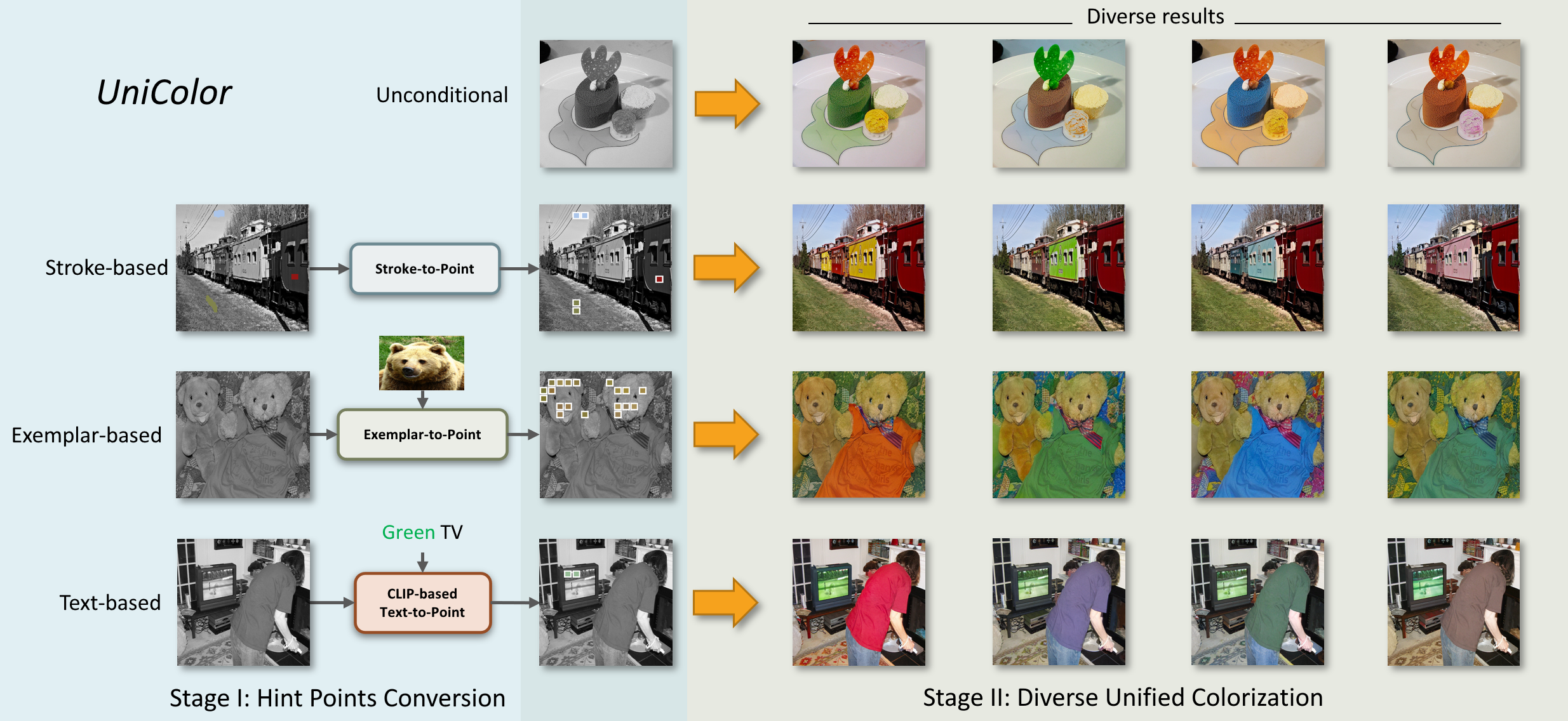

We propose the first unified framework UniColor to support colorization in multiple modalities, including both unconditional and conditional ones, such as stroke, exemplar, text, and even a mix of them. Rather than learning a separate model for each type of condition, we introduce a two-stage colorization framework for incorporating various conditions into a single model. In the first stage, multi-modal conditions are converted into a common representation of hint points. Particularly, we propose a novel CLIP-based method to convert the text to hint points. In the second stage, we propose a Transformer-based network composed of Chroma-VQGAN and Hybrid-Transformer to generate diverse and high-quality colorization results conditioned on hint points. Both qualitative and quantitative comparisons demonstrate that our method outperforms state-of-the-art methods in every control modality and further enables multi-modal colorization that was not feasible before. Moreover, we design an interactive interface showing the effectiveness of our unified framework in practical usage, including automatic colorization, hybrid-control colorization, local recolorization, and iterative color editing.

Our framework consists of two stages. In the first stage, all different conditions (stroke, exemplar, and text) are converted to a common form of hint points. In the second stage, diverse results are generated automatically either from scratch or based on the condition of hint points.

To setup Anaconda environment:

$ conda env create -f environment.yaml

$ conda activate unicolor

Download the pretrained models (including both Chroma-VQGAN and Hybrid-Transformer) from Hugging Face:

- Trained model with ImageNet - put the file

mscoco_step259999.ckptunder folderframework/checkpoints/unicolor_imagenet. - Trained model with MSCOCO - put the file

imagenet_step142124.ckptunder folderframework/checkpoints/unicolor_mscoco.

To use exemplar-based colorization, download the pretrained models from Deep-Exemplar-based-Video-Colorization, unzip the file and place the files into the corresponding folders:

video_moredata_l1under thesample/ImageMatch/checkpointsfoldervgg19_conv.pthandvgg19_gray.pthunder thesample/ImageMatch/datafolder

In sample/sample.ipynb, we show how to use our framework to perform unconditional, stroke-based, exemplar-based, and text-based colorization.

Run framework/datasets/data_prepare.py to preprocess the datasets. This process will generate .yaml files for training and superpixel images which are used to extract hint points for training.

- For ImageNet, place the dataset in a folder with two sub-folders

trainandval, and run:

python framework/datasets/data_prepare.py $your_imagenet_dir$ imagenet --num_process $num_of_process$

- For COCO2017 dataset, place the dataset in a folder with three sub-folders

train2017,unlabeled2017andval2017, and run:

python framework/datasets/data_prepare.py $your_coco2017_dir$ coco --num_process $num_of_process$

Thanks to the authors who make their code and pretrained models publicly available:

- Our code is built based on "Taming Transformers for High-Resolution Image Synthesis".

- In exemplar-based colorization, we rely on code from "Deep Exemplar-based Video Colorization" to calculate the similarity between grayscale and reference images.

- In text-based colorization, we use "CLIP (Contrastive Language-Image Pre-Training)" to calculate text-image relevance.

arXiv:

@misc{huang2022unicolor

author = {Huang, Zhitong and Zhao, Nanxuan and Liao, Jing},

title = {UniColor: A Unified Framework for Multi-Modal Colorization with Transformer},

year = {2022},

eprint = {2209.11223},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}

ACM Transactions on Graphics:

@article{10.1145/3550454.3555471,

author = {Huang, Zhitong and Zhao, Nanxuan and Liao, Jing},

title = {UniColor: A Unified Framework for Multi-Modal Colorization with Transformer},

year = {2022},

issue_date = {December 2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {41},

number = {6},

issn = {0730-0301},

url = {https://doi.org/10.1145/3550454.3555471},

doi = {10.1145/3550454.3555471},

journal = {ACM Trans. Graph.},

month = {nov},

articleno = {205},

numpages = {16},

}