| title | date | layout | draft | path | category | tags | description | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

The Prosecutor's Fallacy |

2019-06-12T23:24:37.121Z |

post |

false |

/posts/the-prosecutors-fallacy/ |

Data Science |

|

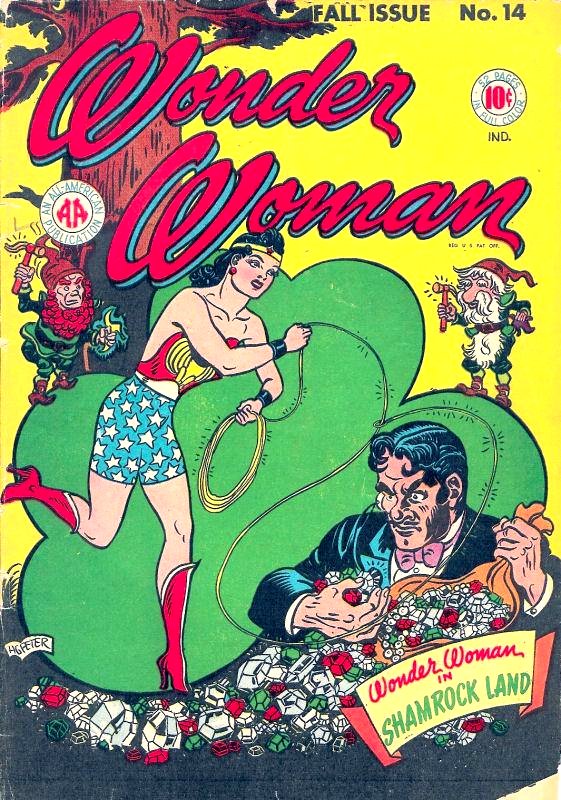

in which Thomas Bayes and Wonder Woman teach you about the law of total probability and the law in general |

The lasso of truth

Imagine you have been arrested for murder. You know that you are innocent, but physical evidence at the scene of the crime matches your description. The prosecutor argues that you are guilty because the odds of finding this evidence given that you are innocent are so small that the jury should discard the probability that you did not actually commit the crime.

But those numbers don't add up. The prosecutor has misapplied conditional probability and neglected the prior odds of you, the defendant, being guilty before they introduced the evidence.

The prosecutor's fallacy is a courtroom misapplication of Bayes' Theorem. Rather than ask the probability that the defendant is innocent given all the evidence, the prosecution, judge, and jury make the mistake of asking what the probability is that the evidence would occur if the defendant were innocent (a much smaller number):

- What we want to know in the name of justice:

P(defendant is guilty|all the evidence)

- What the prosecutor is usually actually demonstrating to the court:

P(all the evidence|defendant is innocent)

To illustrate why this difference can spell life or death, imagine yourself the defendant again. You want to prove to the court that you're really telling the truth, so you agree to a polygraph test.

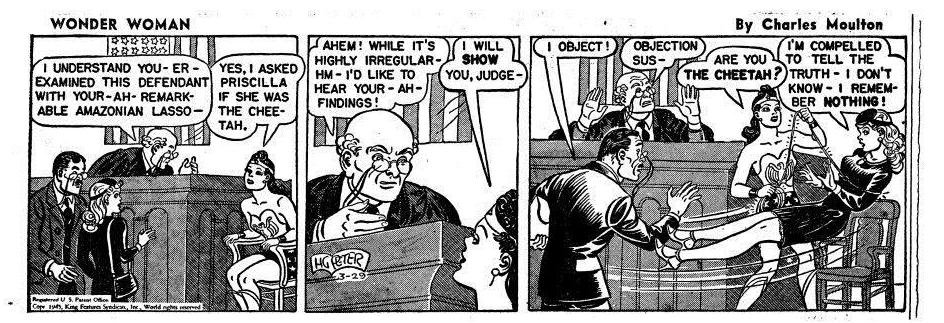

Coincidentally, the same man who invented the lie detector later created Wonder Woman and her lasso of truth.

What are the odds?

William Moulton Marston debuted his invention in the case of James Alphonso Frye, who was accused of murder in 1922.

Frye being polygraphed by Marston

Frye being polygraphed by Marston

For our simulation, we'll take the mean of a more modern polygraph from this paper ("Accuracy estimates of the CQT range from 74% to 89% for guilty examinees, with 1% to 13% false-negatives, and 59% to 83% for innocent examinees, with a false-positive ratio varying from 10% to 23%...")

| Lying (0.15) | Not Lying (0.85) | |

|---|---|---|

| Test (+) | 0.81 | 0.17 |

| Test (-) | 0.08 | 0.71 |

Examine these percentages a moment. Given that this study found that a vast majority of people are honest most of the time, and that "big lies" are things like "not telling your partner who you have really been with", let's generously assume that 15% of people would lie about murder under a polygraph test, and 85% would tell the truth.

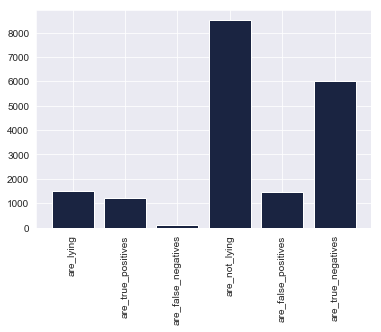

If we tested 10,000 people with this lie detector under these assumptions...

1500 people out of 10000 are_lying

1215 people out of 10000 are_true_positives

120 people out of 10000 are_false_negatives

8500 people out of 10000 are_not_lying

1445 people out of 10000 are_false_positives

6035 people out of 10000 are_true_negatives

The important distinctions to know before we apply Bayes' Theorem are these:

- The true positives are the people who lied and failed the polygraph (they were screened correctly)

- The false negatives are the people who lied and beat the polygraph (they were screened incorrectly)

- The false positives are the people who told the truth but failed the polygraph anyway

- The true negatives are the people who told the truth and passed the polygraph

Got it? Good.

Now: If you, defendant, got a positive lie detector test, what is the chance you were actually lying?

What the polygraph examiner really wants to know is not P(+|L), which is the accuracy of the test; but rather P(L|+), or the probability you were lying given that the test was positive. We know how P(+|L) relates to P(L|+).

P(L|+) = P(+|L)P(L) / P(+)

To figure out what P(+) is independent of our prior knowledge of whether or not someone was lying, we need to compute the total sample space of the event of testing positive using the Law of Total Probability:

P(L|+) = P(+|L)P(L) / P(+|L)P(L) + P(+|L^c)P(L^c)

That is to say, we need to know not only the probability of testing positive given that you are lying, but also the probability of testing positive given that you're not lying (our false positive rate). The sum of those two terms gives us the total probability of testing positive. That allows us to finally determine the conditional probability that you are lying:

def P_L_pos(pct_liars, pct_non_liars):

P_pos_L = 0.81

P_L = pct_liars

P_pos_not_L = 0.17

P_not_L = pct_non_liars

P_pos = (P_pos_L*P_L)+(P_pos_not_L*P_not_L)

P_L_pos = (P_pos_L*P_L)/(P_pos)

print(f'The probability that you are actually lying, \

given that you tested positive on the polygraph, is {round(P_L_pos*100, 2)}%.')P_L_pos(0.15, 0.85)The probability that you are actually lying, given

that you tested positive on the polygraph, is 45.68%.

def false_pos(pct_liars, pct_non_liars):

P_pos_L = 0.81

P_L = pct_liars

P_pos_not_L = 0.17

P_not_L = pct_non_liars

P_pos = (P_pos_L*P_L)+(P_pos_not_L*P_not_L)

P_not_L_pos = (P_pos_not_L*P_not_L)/P_pos

print(f'The probability of a false positive is {round(P_not_L_pos*100, 2)}%.')false_pos(0.15, 0.85)The probability of a false positive is 54.32%.

The probability that you're actually lying, given a positive test result, is only 45.68%. That's worse than chance. Note how it differs from the test's accuracy levels (81% true positives and 71% true negatives). Meanwhile, your risk of being falsely accused of lying, even if you're telling the truth, is also close to–indeed, slightly higher than–chance, at 54.32%. Not reassuring.

Marston was, in fact, a notorious fraud.

The Frye court ruled that the polygraph test could not be trusted as evidence. To this day, lie detector tests are inadmissible in court because of their unreliability. But that does not stop the prosecutor's fallacy from creeping in to court in other, more insidious ways.

This statistical reasoning error runs rampant in the criminal justice system and corrupts criminal cases that rely on everything from fingerprints to DNA evidence to cell tower data. What's worse, courts often reject the expert testimony of statisticians because "it's not rocket science"–it's "common sense":

-

In the Netherlands, a nurse named Lucia de Berk went to prison for life because she had been proximate to "suspicious" deaths that a statistical expert calculated had less than a 1 in 342 million chance of being random. The calculation, tainted by the prosecutor's fallacy, was incorrect. The true figure was more like 1 in 50 (or even 1 in 5). What's more, many of the "incidents" were only marked suspicious after investigators knew that she had been close by.

-

A British nurse, Ben Geen, was accused of inducing respiratory arrest for the "thrill" of reviving his patients, on the claim that respiratory arrest was too rare a phenomenon to occur by chance given that Green was near.

-

Mothers in the U.K. have been prosecuted for murdering their children, when really they died of SIDS, after experts erroneously quoted the odds of two children in the same family dying of SIDS as 1 in 73 million

The data in Ben Geen's case are available thanks to Freedom of Information requests -- so I have briefly analyzed them.

# Hospital data file from the expert in Ben Geen's exoneration case

# Data acquired through FOI requests

# Admissions: no. patients admitted to ED by month

# CardioED: no. patients admitted to CC from ED by month with cardio-respiratory arrest

# RespED: no. patients admitted to CC from ED by month with respiratory arrest

import pandas as pd

hdf = pd.read_csv('Hdf.csv')

hdf.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Year | Month | CardioTot | CardioED | HypoTot | HypoED | RespTot | RespED | Admissions | AllCases | MonthNr | Hospital | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1999 | Nov | 4.0 | 3.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1189.0 | 4.0 | -1 | Oxford Radcliffe |

| 1 | 1999 | Dec | 8.0 | 5.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1378.0 | 8.0 | 0 | Oxford Radcliffe |

| 2 | 2000 | Jan | 7.0 | 2.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1185.0 | 8.0 | 1 | Oxford Radcliffe |

| 3 | 2000 | Feb | 5.0 | 3.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1139.0 | 6.0 | 2 | Oxford Radcliffe |

| 4 | 2000 | Mar | 7.0 | 2.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1215.0 | 7.0 | 3 | Oxford Radcliffe |

The most comparable hospitals to the one in which Geen worked are large hospitals that saw at least one case of respiratory arrest (although "0" in the data most likely means "missing data" and not that zero incidents occurred).

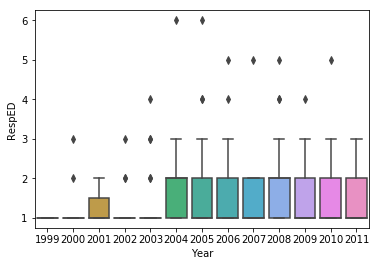

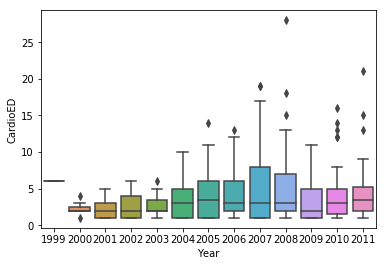

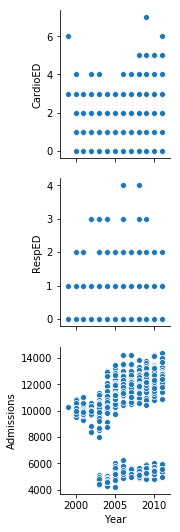

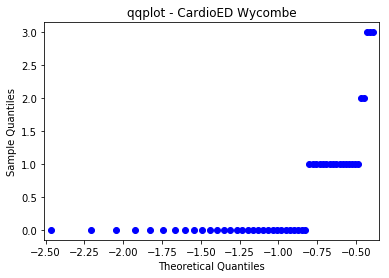

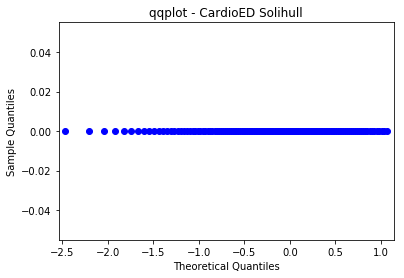

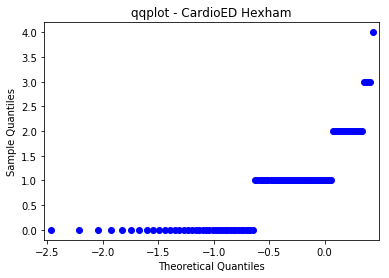

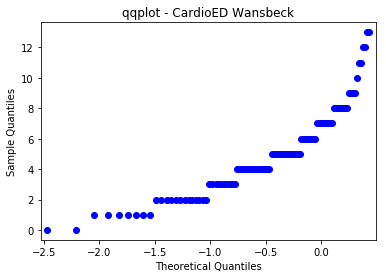

ax = sns.boxplot(x='Year', y='CardioED', data=df)ax = sns.pairplot(df, x_vars=['Year'], y_vars=['CardioED', 'RespED', 'Admissions'])The four hospitals that are comparable to the one where Geen worked are Hexham, Solihull, Wansbeck, and Wycombe. The data for Solihull (for both CardioED and RespED) are anomalous:

import re

regex = re.compile(r'(Hexham|Solihull|Wansbeck|Wycombe)')

compdf = hdf[hdf['Hospital'].str.match(regex) == True]compdf.pivot_table(index='Hospital').reset_index().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Hospital | Admissions | AllCases | CardioED | CardioTot | HypoED | HypoTot | MonthNr | RespED | RespTot | Year | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Hexham | 382.520408 | 2.724490 | 0.887755 | 0.887755 | 1.673469 | 1.673469 | 71.5 | 0.163265 | 0.163265 | 2005.410959 |

| 1 | Solihull | 557.306818 | 0.301587 | 0.000000 | 0.261905 | 0.000000 | 0.039683 | 71.5 | 0.000000 | 0.000000 | 2005.410959 |

| 2 | Wansbeck | 1706.285714 | 16.969388 | 5.010204 | 5.010204 | 10.918367 | 10.918367 | 71.5 | 1.040816 | 1.040816 | 2005.410959 |

| 3 | Wycombe | NaN | 0.980392 | 0.568627 | 0.627451 | 0.039216 | 0.058824 | 71.5 | 0.235294 | 0.294118 | 2005.410959 |

import statsmodels.api as sm

from matplotlib import pyplot as plt

for hospital in compdf['Hospital'].unique():

data = compdf[(compdf['Hospital'] == hospital)].CardioED.values.flatten()

fig = sm.qqplot(data, line='s')

h = plt.title(f'qqplot - CardioED {hospital}')

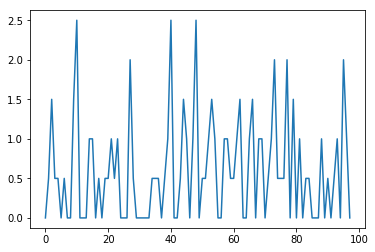

plt.show()After accounting for the discrepancies in the data, we can calculate that respiratory events without accompanying cardiac events happen, on average, roughly a little under 5 times as often as cardiac events (4.669 CardioED admissions on average per RespED admission).

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| CardioTot | CardioED | HypoTot | HypoED | RespTot | RespED | Admissions | AllCases | MonthNr | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Year | Month | |||||||||

| 2003 | Apr | 3.5 | 3.5 | 2.0 | 2.0 | 0.0 | 0.0 | 739.0 | 5.5 | 40 |

| Aug | 1.5 | 1.5 | 2.0 | 2.0 | 0.5 | 0.5 | 792.5 | 4.0 | 44 | |

| Dec | 3.0 | 3.0 | 5.0 | 5.0 | 1.5 | 1.5 | 865.5 | 9.5 | 48 | |

| Jul | 1.0 | 1.0 | 4.5 | 4.5 | 0.5 | 0.5 | 843.5 | 6.0 | 43 | |

| Jun | 2.0 | 2.0 | 5.5 | 5.5 | 0.5 | 0.5 | 819.0 | 8.0 | 42 | |

| May | 1.5 | 1.5 | 2.5 | 2.5 | 0.0 | 0.0 | 793.0 | 4.0 | 41 | |

| Nov | 1.5 | 1.5 | 3.0 | 3.0 | 0.5 | 0.5 | 771.5 | 5.0 | 47 | |

| Oct | 1.0 | 1.0 | 2.5 | 2.5 | 0.0 | 0.0 | 813.5 | 3.5 | 46 | |

| Sep | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 782.0 | 2.0 | 45 | |

| 2004 | Apr | 4.0 | 4.0 | 4.0 | 4.0 | 1.5 | 1.5 | 828.5 | 9.5 | 52 |

| Aug | 3.0 | 3.0 | 3.5 | 3.5 | 2.5 | 2.5 | 837.5 | 9.0 | 56 | |

| Dec | 3.0 | 3.0 | 3.5 | 3.5 | 0.0 | 0.0 | 972.0 | 6.5 | 60 | |

| Feb | 1.5 | 1.5 | 3.5 | 3.5 | 0.0 | 0.0 | 757.0 | 5.0 | 50 | |

| Jan | 1.0 | 1.0 | 3.5 | 3.5 | 0.0 | 0.0 | 846.0 | 4.5 | 49 | |

| Jul | 2.0 | 2.0 | 4.0 | 4.0 | 1.0 | 1.0 | 864.0 | 7.0 | 55 | |

| Jun | 4.0 | 4.0 | 4.0 | 4.0 | 1.0 | 1.0 | 885.5 | 9.0 | 54 | |

| Mar | 4.0 | 4.0 | 3.5 | 3.5 | 0.0 | 0.0 | 855.5 | 7.5 | 51 | |

| May | 2.0 | 2.0 | 5.0 | 5.0 | 0.5 | 0.5 | 863.0 | 7.5 | 53 | |

| Nov | 3.5 | 3.5 | 2.5 | 2.5 | 0.0 | 0.0 | 823.5 | 6.0 | 59 | |

| Oct | 5.0 | 5.0 | 0.5 | 0.5 | 0.5 | 0.5 | 871.0 | 6.0 | 58 | |

| Sep | 2.0 | 2.0 | 3.5 | 3.5 | 0.5 | 0.5 | 853.5 | 6.0 | 57 | |

| 2005 | Apr | 5.5 | 5.5 | 8.0 | 8.0 | 1.0 | 1.0 | 1015.5 | 14.5 | 64 |

| Aug | 4.0 | 4.0 | 5.5 | 5.5 | 0.5 | 0.5 | 1109.0 | 10.0 | 68 | |

| Dec | 3.0 | 3.0 | 10.0 | 10.0 | 1.0 | 1.0 | 1080.5 | 14.0 | 72 | |

| Feb | 1.0 | 1.0 | 3.5 | 3.5 | 0.0 | 0.0 | 893.0 | 4.5 | 62 | |

| Jan | 2.5 | 2.5 | 3.5 | 3.5 | 0.0 | 0.0 | 972.5 | 6.0 | 61 | |

| Jul | 3.5 | 3.5 | 5.0 | 5.0 | 0.0 | 0.0 | 1115.5 | 8.5 | 67 | |

| Jun | 1.5 | 1.5 | 9.0 | 9.0 | 2.0 | 2.0 | 1133.0 | 12.5 | 66 | |

| Mar | 2.0 | 2.0 | 3.0 | 3.0 | 0.5 | 0.5 | 990.0 | 5.5 | 63 | |

| May | 2.0 | 2.0 | 7.0 | 7.0 | 0.0 | 0.0 | 1039.5 | 9.0 | 65 | |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2008 | Sep | 3.5 | 3.5 | 2.5 | 2.5 | 1.0 | 1.0 | 1107.0 | 7.0 | 105 |

| 2009 | Apr | 1.5 | 1.5 | 7.5 | 7.5 | 1.0 | 1.0 | 1143.0 | 10.0 | 112 |

| Aug | 0.5 | 0.5 | 10.5 | 10.5 | 0.0 | 0.0 | 1083.5 | 11.0 | 116 | |

| Dec | 5.0 | 5.0 | 6.5 | 6.5 | 0.5 | 0.5 | 1150.0 | 12.0 | 120 | |

| Feb | 2.0 | 2.0 | 8.5 | 8.5 | 1.0 | 1.0 | 1087.0 | 11.5 | 110 | |

| Jan | 4.5 | 4.5 | 7.0 | 7.0 | 2.0 | 2.0 | 1103.0 | 13.5 | 109 | |

| Jul | 1.5 | 1.5 | 10.5 | 10.5 | 0.5 | 0.5 | 1153.0 | 12.5 | 115 | |

| Jun | 3.5 | 3.5 | 8.0 | 8.0 | 0.5 | 0.5 | 1108.5 | 12.0 | 114 | |

| Mar | 1.5 | 1.5 | 13.0 | 13.0 | 0.5 | 0.5 | 1175.5 | 15.0 | 111 | |

| May | 2.5 | 2.5 | 6.0 | 6.0 | 2.0 | 2.0 | 1120.0 | 10.5 | 113 | |

| Nov | 3.5 | 3.5 | 6.0 | 6.0 | 0.0 | 0.0 | 1067.5 | 9.5 | 119 | |

| Oct | 6.0 | 6.0 | 8.0 | 8.0 | 1.5 | 1.5 | 1189.5 | 15.5 | 118 | |

| Sep | 3.5 | 3.5 | 8.5 | 8.5 | 0.0 | 0.0 | 1177.5 | 12.0 | 117 | |

| 2010 | Apr | 5.5 | 5.5 | 9.0 | 9.0 | 1.0 | 1.0 | 1158.5 | 15.5 | 124 |

| Aug | 2.0 | 2.0 | 9.5 | 9.5 | 0.0 | 0.0 | 1212.0 | 11.5 | 128 | |

| Dec | 3.5 | 3.5 | 6.5 | 6.5 | 0.5 | 0.5 | 1251.5 | 10.5 | 132 | |

| Feb | 2.5 | 2.5 | 8.5 | 8.5 | 0.5 | 0.5 | 1073.0 | 11.5 | 122 | |

| Jan | 7.0 | 7.0 | 10.5 | 10.5 | 0.0 | 0.0 | 1074.0 | 17.5 | 121 | |

| Jul | 5.0 | 5.0 | 9.5 | 9.5 | 0.0 | 0.0 | 1190.0 | 14.5 | 127 | |

| Jun | 4.5 | 4.5 | 11.0 | 11.0 | 0.0 | 0.0 | 1213.0 | 15.5 | 126 | |

| Mar | 2.5 | 2.5 | 8.0 | 8.0 | 1.0 | 1.0 | 1229.5 | 11.5 | 123 | |

| May | 5.0 | 5.0 | 7.5 | 7.5 | 0.0 | 0.0 | 1203.5 | 12.5 | 125 | |

| Nov | 6.5 | 6.5 | 7.0 | 7.0 | 0.5 | 0.5 | 1186.0 | 14.0 | 131 | |

| Oct | 4.0 | 4.0 | 10.0 | 10.0 | 0.0 | 0.0 | 1267.5 | 14.0 | 130 | |

| Sep | 1.0 | 1.0 | 5.5 | 5.5 | 0.5 | 0.5 | 1226.5 | 7.0 | 129 | |

| 2011 | Apr | 3.5 | 3.5 | 14.5 | 14.5 | 1.0 | 1.0 | 1151.0 | 19.0 | 136 |

| Feb | 1.0 | 1.0 | 11.0 | 11.0 | 0.0 | 0.0 | 1136.0 | 12.0 | 134 | |

| Jan | 3.0 | 3.0 | 9.5 | 9.5 | 2.0 | 2.0 | 1224.0 | 14.5 | 133 | |

| Mar | 3.0 | 3.0 | 14.0 | 14.0 | 1.0 | 1.0 | 1246.5 | 18.0 | 135 | |

| May | 7.0 | 7.0 | 12.5 | 12.5 | 0.0 | 0.0 | 1189.5 | 19.5 | 137 |

The average number of respiratory arrests per month unaccompanied by cardiac failure is approximately 1-2, with large fluctuations. That's not particularly rare, and certainly not rare enough to send a nurse to prison for life. (You can read more about the case and this data here and see my jupyter notebook here.)

Common sense, it would seem, is hardly common--a problem which the judicial system should take much more seriously than it does.