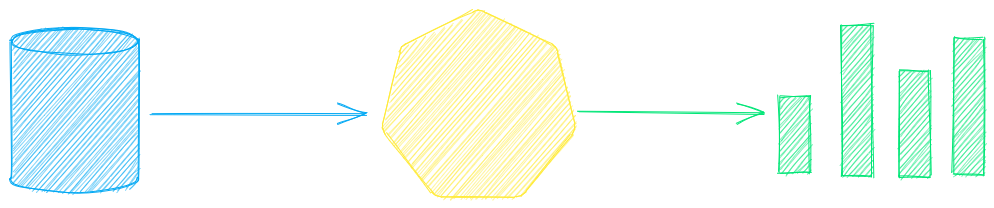

txtai is an open-source platform for semantic search and workflows powered by language models.

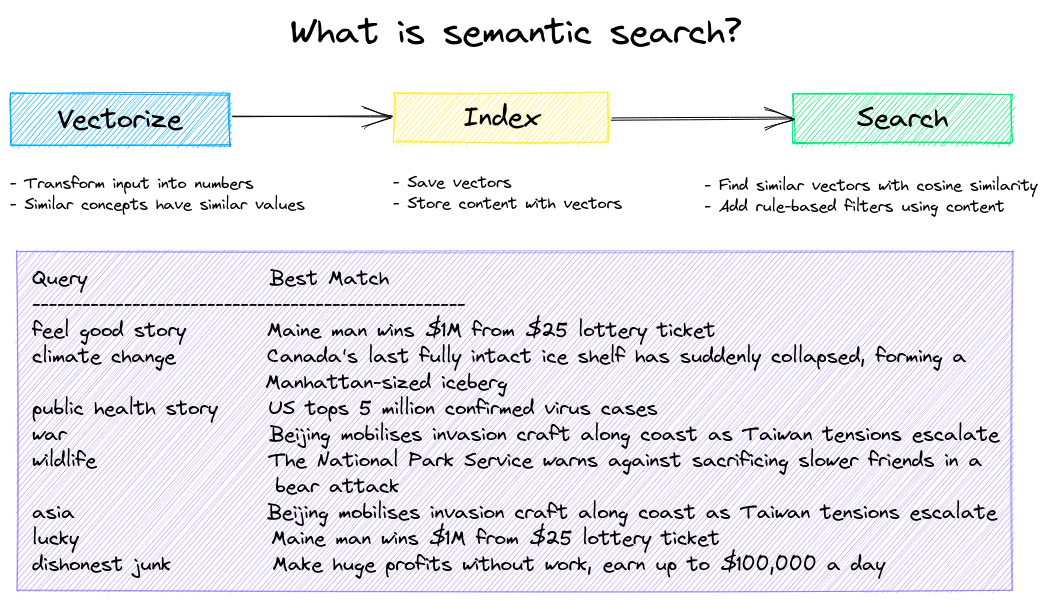

Traditional search systems use keywords to find data. Semantic search has an understanding of natural language and identifies results that have the same meaning, not necessarily the same keywords.

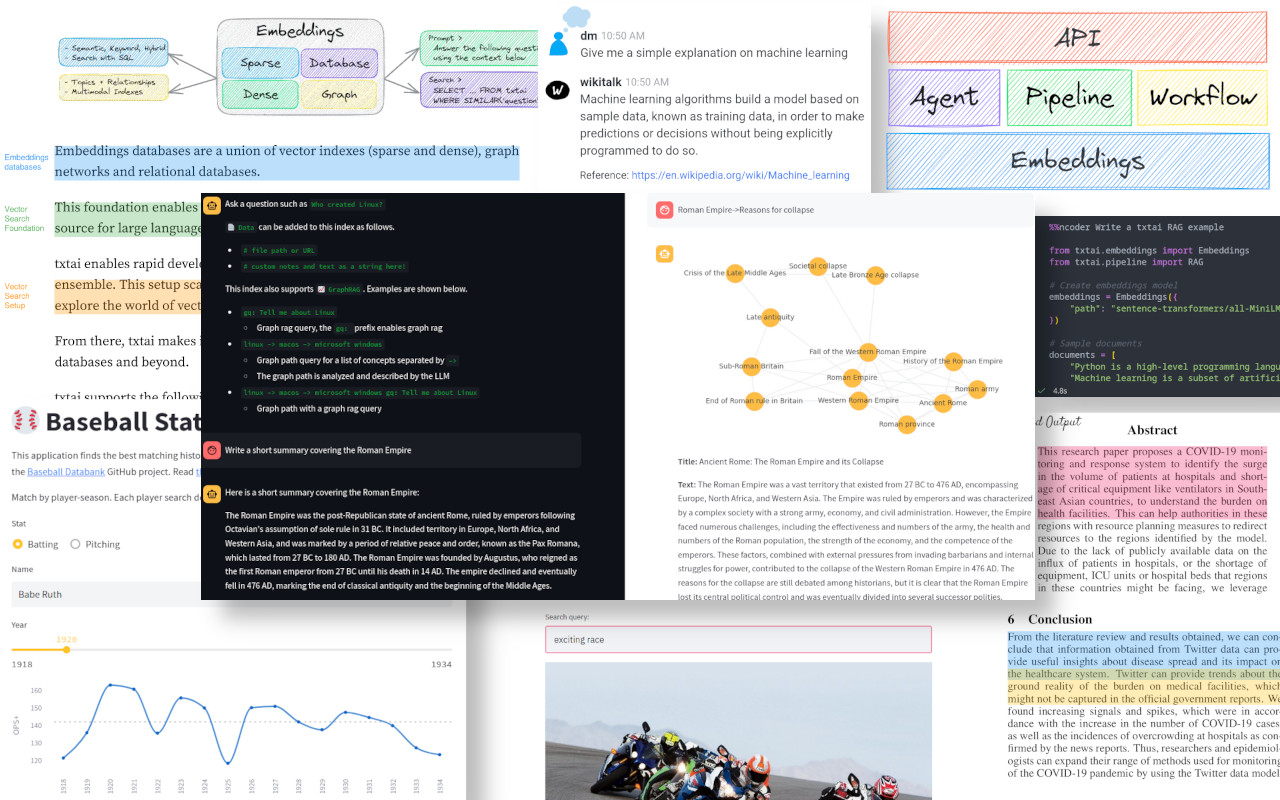

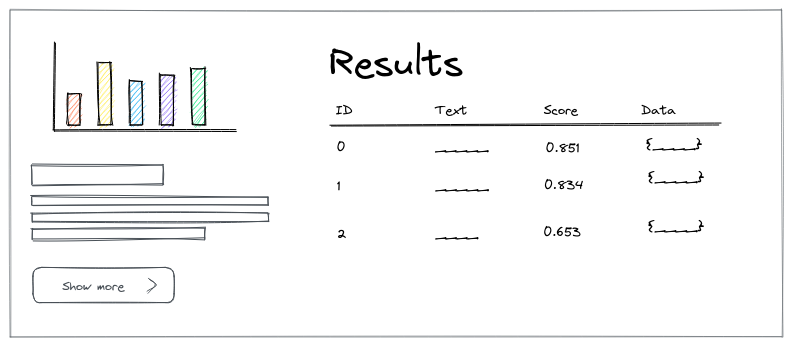

txtai builds embeddings databases, which are a union of vector indexes and relational databases. This enables vector search with SQL. Embeddings databases can stand on their own and/or serve as a powerful knowledge source for large language model (LLM) prompts.

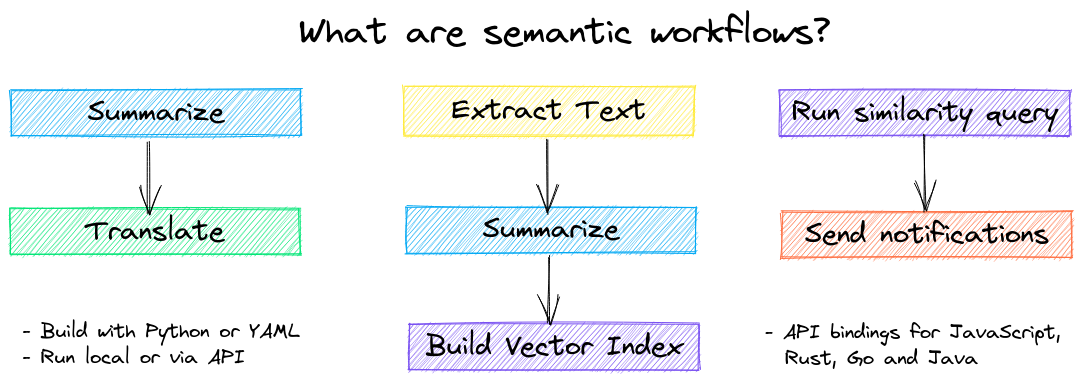

Semantic workflows connect language models together to build intelligent applications.

Integrate conversational search, retrieval augmented generation (RAG), LLM chains, automatic summarization, transcription, translation and more.

Summary of txtai features:

- 🔎 Vector search with SQL, object storage, topic modeling, graph analysis, multiple vector index backends (Faiss, Annoy, Hnswlib) and support for external vector databases

- 📄 Create embeddings for text, documents, audio, images and video

- 💡 Pipelines powered by language models that run LLM prompts, question-answering, labeling, transcription, translation, summarizations and more

- ↪️️ Workflows to join pipelines together and aggregate business logic. txtai processes can be simple microservices or multi-model workflows.

- ⚙️ Build with Python or YAML. API bindings available for JavaScript, Java, Rust and Go.

- ☁️ Cloud-native architecture that scales out with container orchestration systems (e.g. Kubernetes)

txtai is built with Python 3.8+, Hugging Face Transformers, Sentence Transformers and FastAPI

The following applications are powered by txtai.

| Application | Description |

|---|---|

| txtchat | Conversational search and workflows for all |

| paperai | Semantic search and workflows for medical/scientific papers |

| codequestion | Semantic search for developers |

| tldrstory | Semantic search for headlines and story text |

In addition to this list, there are also many other open-source projects, published research and closed proprietary/commercial projects that have built on txtai in production.

New vector databases, LLM frameworks and everything in between are sprouting up daily. Why build with txtai?

# Get started in a couple lines

from txtai.embeddings import Embeddings

embeddings = Embeddings()

embeddings.index(["Correct", "Not what we hoped"])

embeddings.search("positive", 1)

#[(0, 0.29862046241760254)]- Built-in API makes it easy to develop applications using your programming language of choice

# app.yml

embeddings:

path: sentence-transformers/all-MiniLM-L6-v2CONFIG=app.yml uvicorn "txtai.api:app"

curl -X GET "http://localhost:8000/search?query=positive"- Run local - no need to ship data off to disparate remote services

- Work with micromodels all the way up to large language models (LLMs)

- Low footprint - install additional dependencies and scale up when needed

- Learn by example - notebooks cover all available functionality

The easiest way to install is via pip and PyPI

pip install txtai

Python 3.8+ is supported. Using a Python virtual environment is recommended.

See the detailed install instructions for more information covering optional dependencies, environment specific prerequisites, installing from source, conda support and how to run with containers.

An abbreviated list of example notebooks and applications giving an overview of txtai are shown below. See the documentation for the full set of examples.

Build semantic/similarity/vector/neural search applications.

| Notebook | Description | |

|---|---|---|

| Introducing txtai |

Overview of the functionality provided by txtai | |

| Build an Embeddings index with Hugging Face Datasets | Index and search Hugging Face Datasets | |

| Add semantic search to Elasticsearch | Add semantic search to existing search systems | |

| Semantic Graphs | Explore topics, data connectivity and run network analysis | |

| Embeddings in the Cloud | Load and use an embeddings index from the Hugging Face Hub | |

| Customize your own embeddings database | Ways to combine vector indexes with relational databases |

Prompt-driven search, retrieval augmented generation (RAG), pipelines and workflows that interface with large language models (LLMs).

| Notebook | Description | |

|---|---|---|

| Prompt-driven search with LLMs | Embeddings-guided and Prompt-driven search with Large Language Models (LLMs) | |

| Prompt templates and task chains | Build model prompts and connect tasks together with workflows |

Transform data with language model backed pipelines.

| Notebook | Description | |

|---|---|---|

| Extractive QA with txtai | Introduction to extractive question-answering with txtai | |

| Apply labels with zero shot classification | Use zero shot learning for labeling, classification and topic modeling | |

| Building abstractive text summaries | Run abstractive text summarization | |

| Extract text from documents | Extract text from PDF, Office, HTML and more | |

| Text to speech generation | Generate speech from text | |

| Transcribe audio to text | Convert audio files to text | |

| Translate text between languages | Streamline machine translation and language detection | |

| Generate image captions and detect objects | Captions and object detection for images |

Efficiently process data at scale.

| Notebook | Description | |

|---|---|---|

| Run pipeline workflows |

Simple yet powerful constructs to efficiently process data | |

| Workflow Scheduling | Schedule workflows with cron expressions | |

| Push notifications with workflows | Generate and push notifications with workflows |

Train NLP models.

| Notebook | Description | |

|---|---|---|

| Train a text labeler | Build text sequence classification models | |

| Train a QA model | Build and fine-tune question-answering models | |

| Train a language model from scratch | Build new language models |

Series of example applications with txtai. Links to hosted versions on Hugging Face Spaces also provided.

| Application | Description | |

|---|---|---|

| Basic similarity search | Basic similarity search example. Data from the original txtai demo. | 🤗 |

| Baseball stats | Match historical baseball player stats using vector search. | 🤗 |

| Book search | Book similarity search application. Index book descriptions and query using natural language statements. | Local run only |

| Image search | Image similarity search application. Index a directory of images and run searches to identify images similar to the input query. | 🤗 |

| Summarize an article | Summarize an article. Workflow that extracts text from a webpage and builds a summary. | 🤗 |

| Wiki search | Wikipedia search application. Queries Wikipedia API and summarizes the top result. | 🤗 |

| Workflow builder | Build and execute txtai workflows. Connect summarization, text extraction, transcription, translation and similarity search pipelines together to run unified workflows. | 🤗 |

See the table below for the current recommended models. These models all allow commercial use and offer a blend of speed and performance.

Models can be loaded as either a path from the Hugging Face Hub or a local directory. Model paths are optional, defaults are loaded when not specified. For tasks with no recommended model, txtai uses the default models as shown in the Hugging Face Tasks guide.

See the following links to learn more.

Full documentation on txtai including configuration settings for embeddings, pipelines, workflows, API and a FAQ with common questions/issues is available.

- Introducing txtai, semantic search and workflows built on Transformers

- Tutorial series on Hashnode | dev.to

- What's new in txtai 5.0 | 4.0

- Getting started with semantic search | workflows

- Run workflows to transform data and build semantic search applications with txtai

- Semantic search on the cheap

- Serverless vector search with txtai

- Insights from the txtai console

- The big and small of txtai

For those who would like to contribute to txtai, please see this guide.