Mingdeng Cao, Yanbo Fan, Yong Zhang, Jue Wang and Yujiu Yang.

[arXiv]

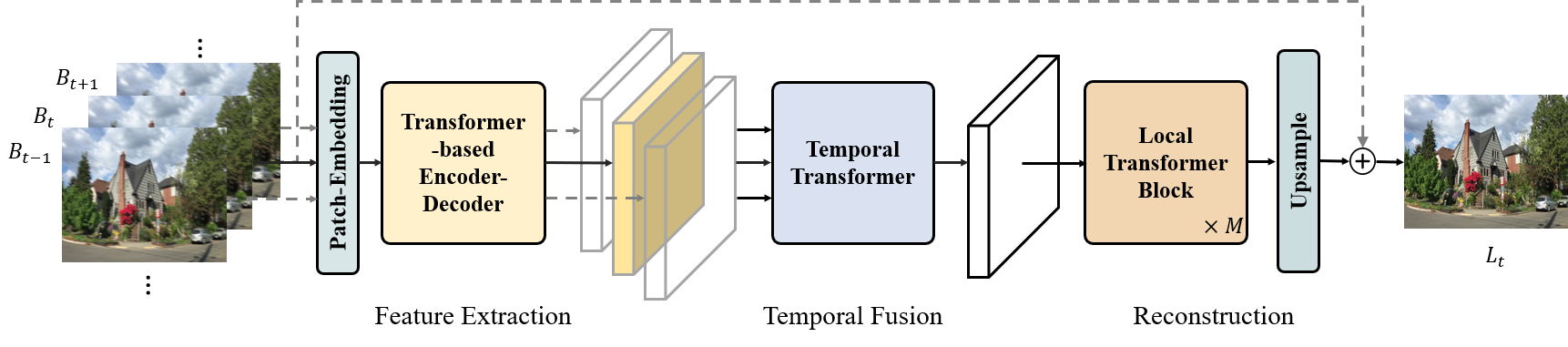

We propose Video Deblurring Transformer (VDTR), a simple yet effective model that takes advantage of the long-range and relation modeling characteristics of the Transformer for video deblurring. VDTR utilizes pure Transformer for both spatial and temporal modeling and obtains highly competitive performance on the popular video deblurring benchmarks.

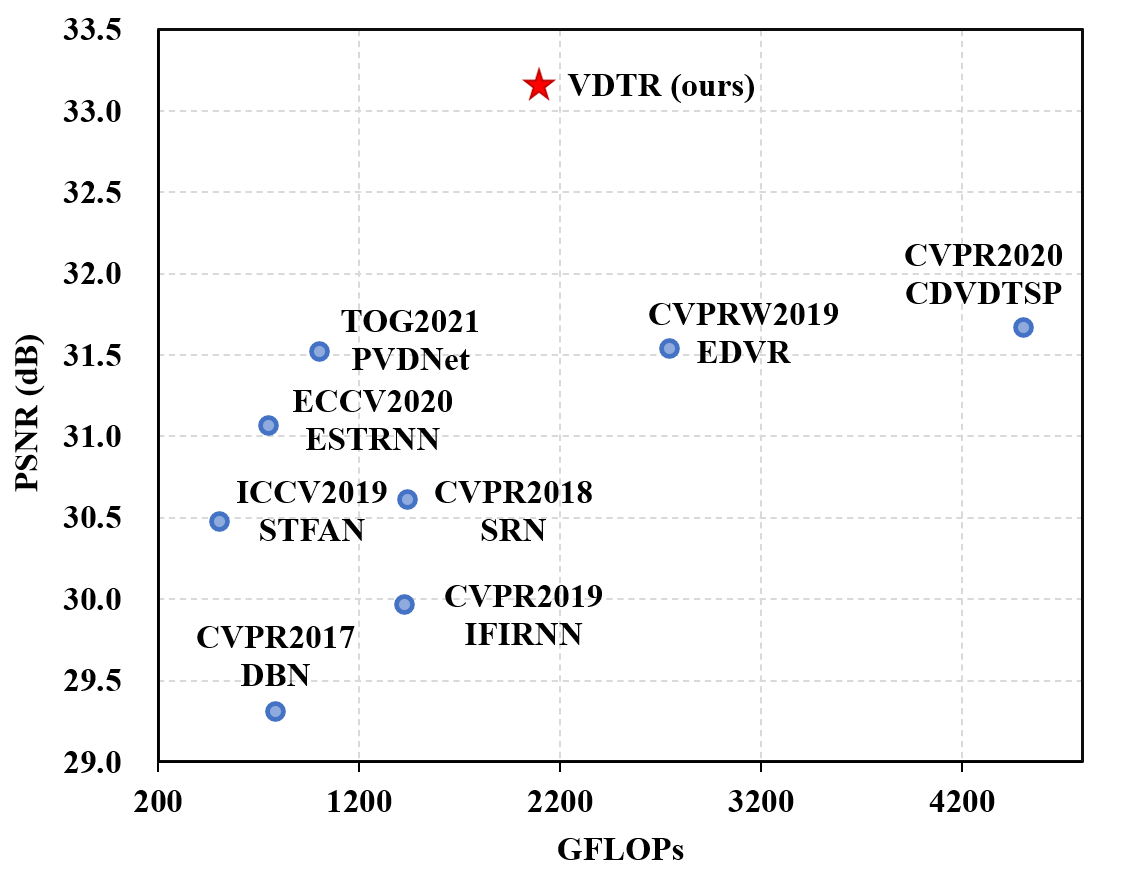

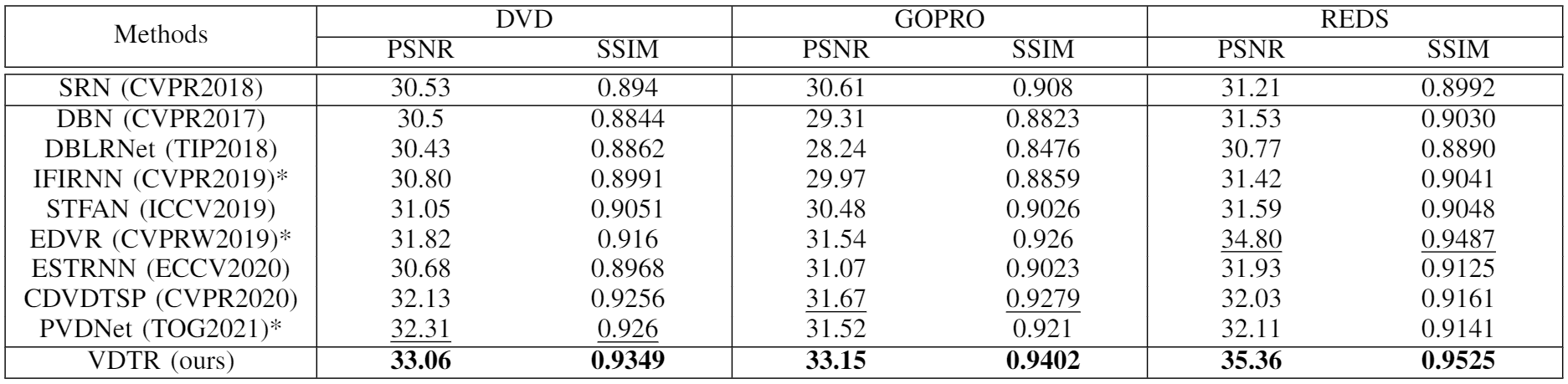

VDTR surpasses CNN-based state-of-the-art methods more than 1.5dB PSNR with moderate computational costs:

Spatio-temporal learning is significant for video deblurring, which is dominated by convolution-based methods. This paper presents VDTR, an effective Transformer-based model that makes the first attempt to adapt the Transformer for video deblurring. VDTR exploits the superior long-range and relation modeling capabilities of Transformer for both spatial and temporal modeling. However, it is challenging to design an appropriate Transformer-based model for video deblurring due to the high computational costs for high-resolution spatial modeling and the misalignment across frames for temporal modeling. To address these problems, VDTR advocates performing attention within non-overlapping windows and exploiting the hierarchical structure for long-range dependencies modeling. For frame-level spatial modeling, we propose an encoder-decoder Transformer that utilizes multi-scale features for deblurring. For multi-frame temporal modeling, we adapt the Transformer to fuse multiple spatial features efficiently. Compared with CNN-based methods, the proposed method achieves highly competitive results on both synthetic and real-world video deblurring benchmarks, including DVD, GOPRO, REDS and BSD. We hope such a pure Transformer-based architecture can serve as a powerful alternative baseline for video deblurring and other video restoration tasks.

- Python 3.8

- PyTorch >= 1.5

git clone git clone https://github.com/ljzycmd/SimDeblur.git

# install the SimDeblur

cd SimDeblur

bash Install.sh- Clone the codes of VDTR

git clone https://github.com/ljzycmd/VDTR.git- Download and unzip the datasets

Then create the soft links of the datasets to the ./datasets folder.

- Run the training script

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 --master_port=10086 train.py ./configs/vdtr/vdtr_dvd.yaml --gpus=4the training logs are saved in ./workdir/*

- Run the testing script (single GPU, minimum requirement RTX 2080Ti)

python test.py ./configs/vdtr/vdtr_dvd.yaml $Checkpoint_paththe testing logs and frames are saved in ./workdir/*.

Pretrained checkpoints are listed:

| Model | Dataset | Download |

|---|---|---|

| VDTR | DVD | Google drive |

| VDTR | GOPRO | Google drive |

| VDTR | BSD-1ms | Goodle drive |

| VDTR | BSD-2ms | Goodle drive |

| VDTR | BSD-3ms | Goodle drive |

VDTR achieves competitive PSNR and SSIM on both synthetic and real-world deblurring datasets.

Quantitative results on popular video deblurring datasets: DVD, GOPRO, REDS

Qualitative comparison to state-of-the-art video deblurring methods on GOPRO

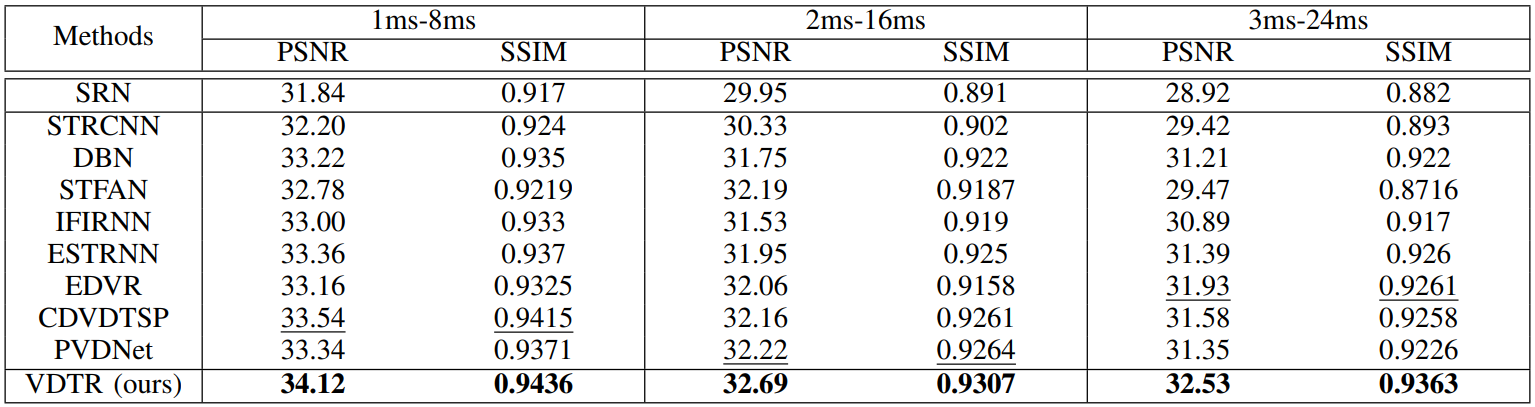

Quantitative results on real-world video deblurring datasets: BSD

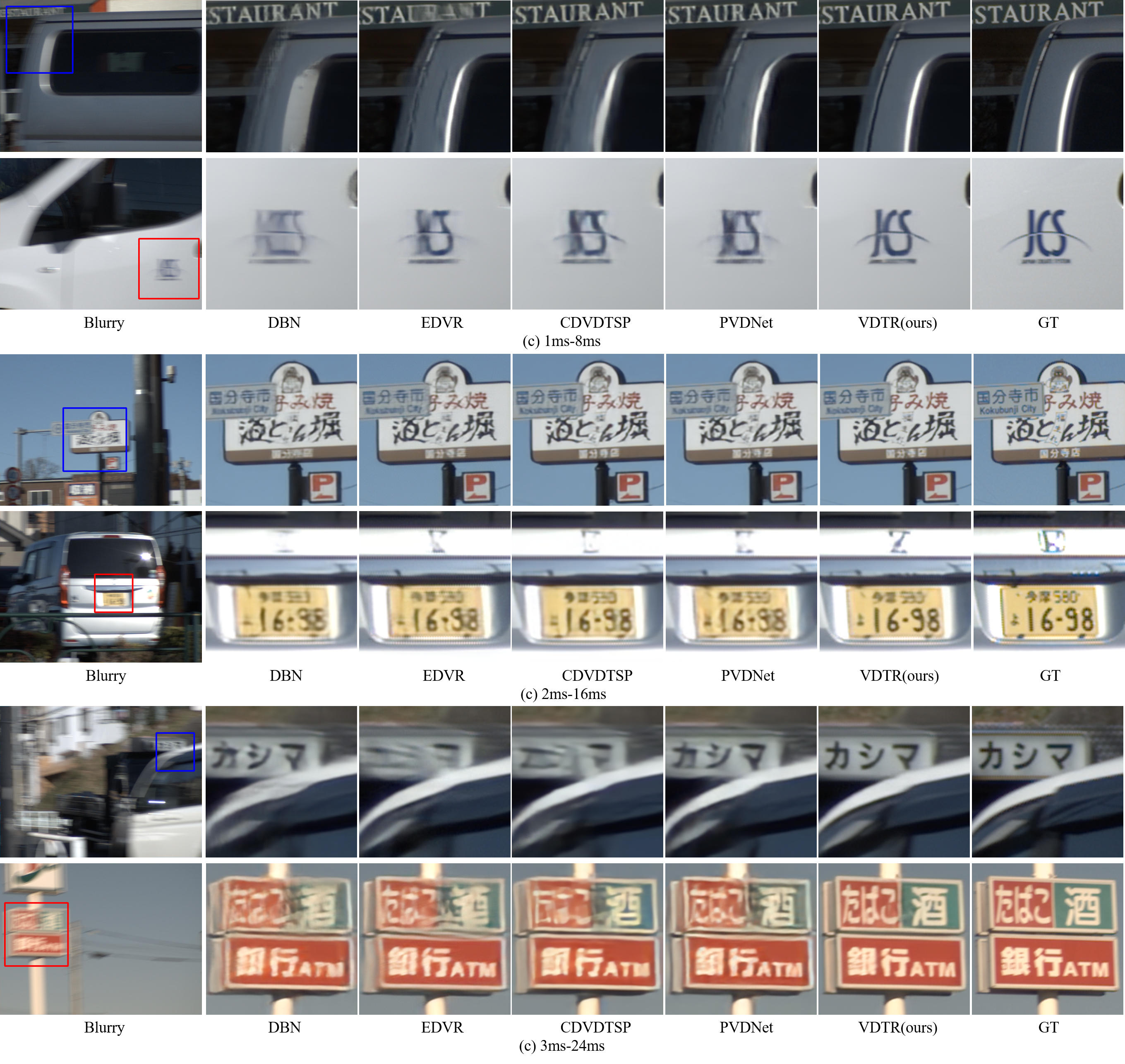

Qualitative comparison to state-of-the-art video deblurring methods on BSD

If the proposed model is useful for your research, please consider citing

@article{cao2022vdtr,

title = {VDTR: Video Deblurring with Transformer},

author = {Mingdeng Cao and Yanbo Fan and Yong Zhang and Jue Wang and Yujiu Yang},

journal = {arXiv:2204.08023},

year = {2022}

}