This repository contains the code to reproduce experiments in the paper: Policy Optimization in RLHF: The Impact of Out-of-preference Data.

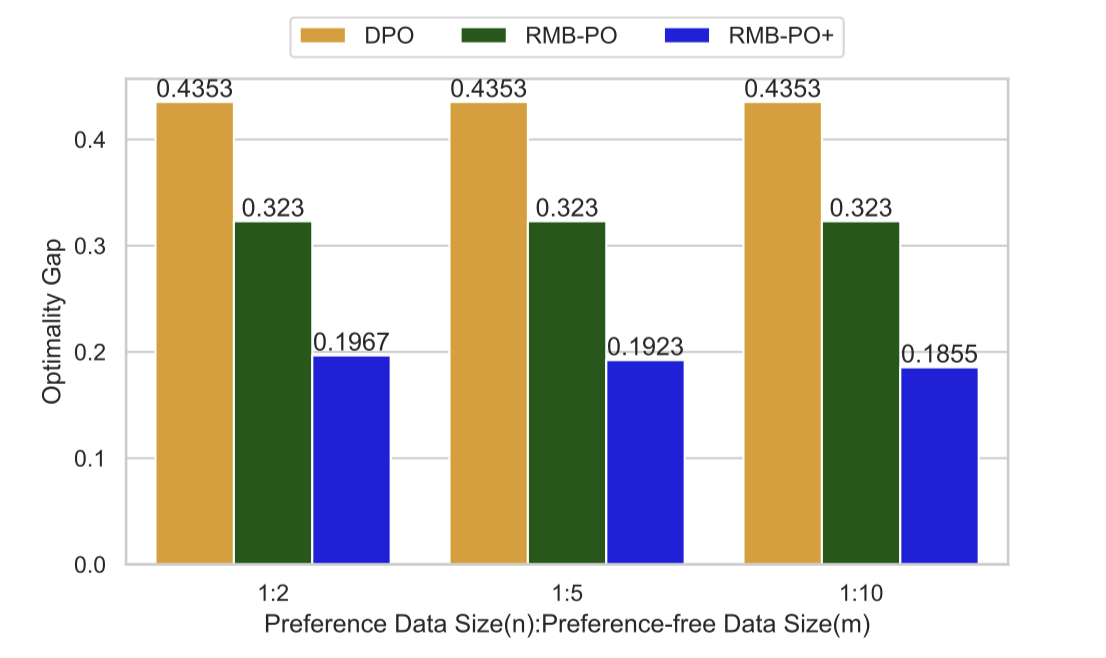

The experiments show that policy optimization with out-of-preference data is key to unlocking the reward model's generalization power.

The Python environment can be set up using Anaconda with the provided environment.yml file.

conda env create -f environment.yml

conda activate bandit

bash scripts/run_linear_bandit.sh

bash scripts/run_neural_bandit.sh

If you find this code is helpful, please cite our paper in the following format.

@article{li2023policy,

title = {Policy Optimization in RLHF: The Impact of Out-of-preference Data},

author = {Li, Ziniu and Xu, Tian and Yu, Yang},

journal = {arXiv preprint arXiv:2312.10584},

year = {2023},

}