Yuchi Liu, Jaskirat Singh, Wen Gao, Ali Payani, Liang Zheng

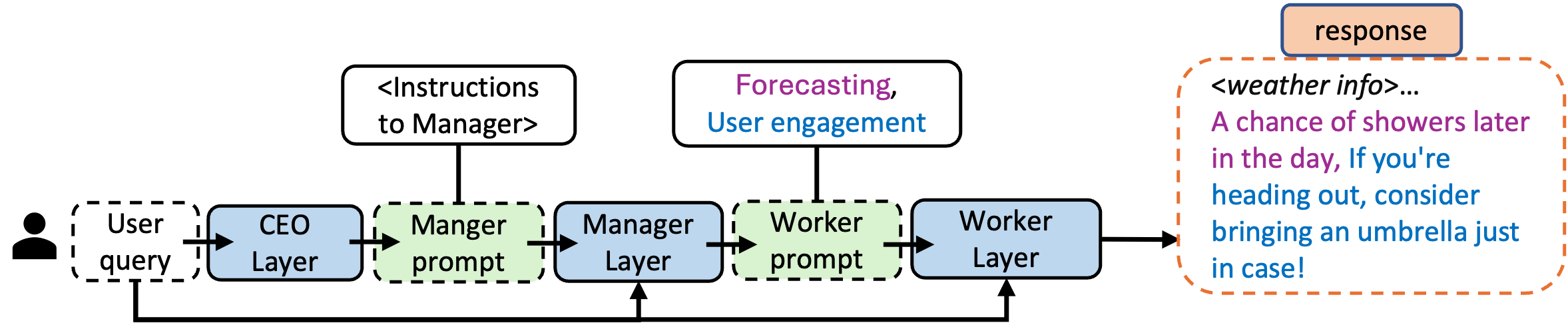

This repository contains the implementation of the Hierarchical Multi-Agent Workflow (HMAW) for prompt optimization in large language models (LLMs).

Before running the scripts, ensure you have Python installed along with the necessary packages. You can install these packages using the provided requirements file.

- fire==0.5.0

- ipython==8.12.3

- numpy==1.26.4

- openai==1.30.4

- pandas==2.2.2

- setuptools==68.2.2

- tqdm==4.66.1

To install the required packages, execute the following command:

pip install -r requirements.txtFor installing and running the Mixtral model using vLLM, refer to the official vLLM documentation.

To utilize the provided scripts effectively, please organize your data according to the following directory structure:

├── data

│ ├── Education

│ ├── FED

│ ├── GSM8K

│ ├── ATLAS

│ └── CodeNet

├── log

│ ├── compare_ATLAS_HMAW_base_mixtral_gpt3.5.json

│ ├── response_ATLAS_HMAW_mixtral.json

│ ├── response_ATLAS_base_mixtral.json

│ ├── compare_Education_HMAW_base_mixtral_gpt3.5.json

│ ├── response_Education_HMAW_mixtral.json

│ ├── response_Education_base_mixtral.json

│ └── ...

├── eval_ATLAS.py

├── ...

├── eval_compare.py

├── eval_correctness.py

└── ...

-

compare_ATLAS_HMAW_base_mixtral_gpt3.5.json: Contains comparison results of evaluator preferences between responses generated using HMAW prompts and basic user queries. Themixtralmodel is the LLM agent, andgpt3.5is the evaluator. -

response_Education_HMAW_mixtral.json: Contains responses generated using the HMAW method with themixtralmodel as the agent.

- The

logdirectory contains detailed comparison and response logs for various models and methods. - The root directory includes essential evaluation scripts (

eval_ATLAS.py,eval_compare.py,eval_correctness.py, etc.).

By maintaining this structure, you ensure that the scripts can locate and process the data and logs correctly, facilitating seamless evaluation and comparison of model responses.

To execute prompt optimization and response generation, use the following command:

python eval_{dataset}.pyReplace {dataset} with one of the following options to represent different datasets: ATLAS, education, fed, codenet, or GSM.

Testing on ATLAS, education, fed, and codenet:

Run the following command:

python eval_compare.pyPlease ensure to update the related file names in eval_compare.py to test under various settings.

Testing on GSM:

Run the following command:

python eval_correctness.pyFor details on how to change the prompting methods used in response generation, please refer to the prompter.py file.

If you find our code helpful, please consider citing our paper:

@misc{liu2024hierarchical,

title={Towards Hierarchical Multi-Agent Workflows for Zero-Shot Prompt Optimization},

author={Yuchi Liu and Jaskirat Singh and Gaowen Liu and Ali Payani and Liang Zheng},

year={2024},

eprint={2405.20252},

archivePrefix={arXiv},

primaryClass={cs.CL}

}This project is open source and available under the MIT License.