Official PyTorch implementation of our paper

- Title: Sparse-Tuning: Adapting Vision Transformers with Efficient Fine-tuning and Inference

- Authors: Ting Liu, Xuyang Liu, Liangtao Shi, Zunnan Xu, Siteng Huang, Yi Xin, Quanjun Yin

- Institutes: National University of Defense Technology, Sichuan University, Hefei University of Technology, Tsinghua Universtity, Zhejiang University, and Nanjing University

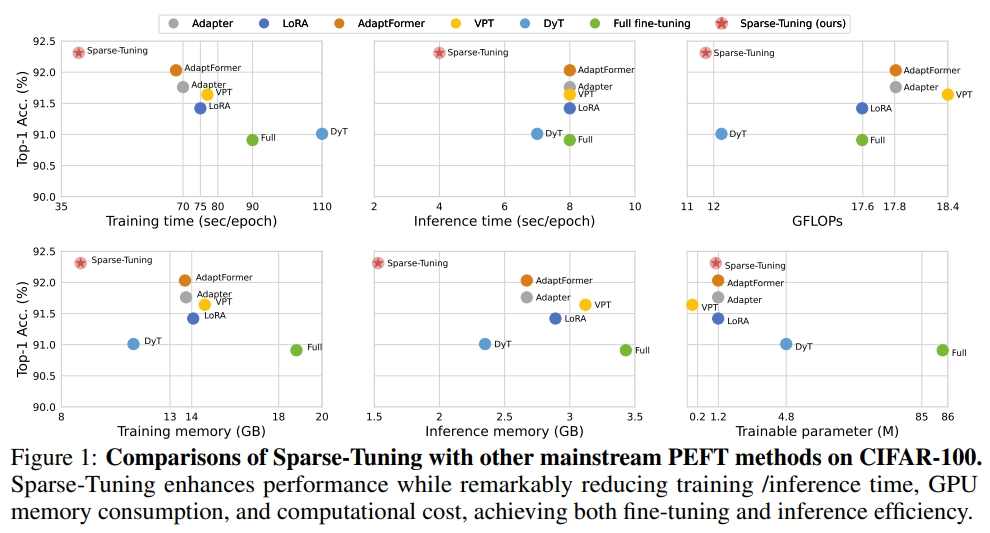

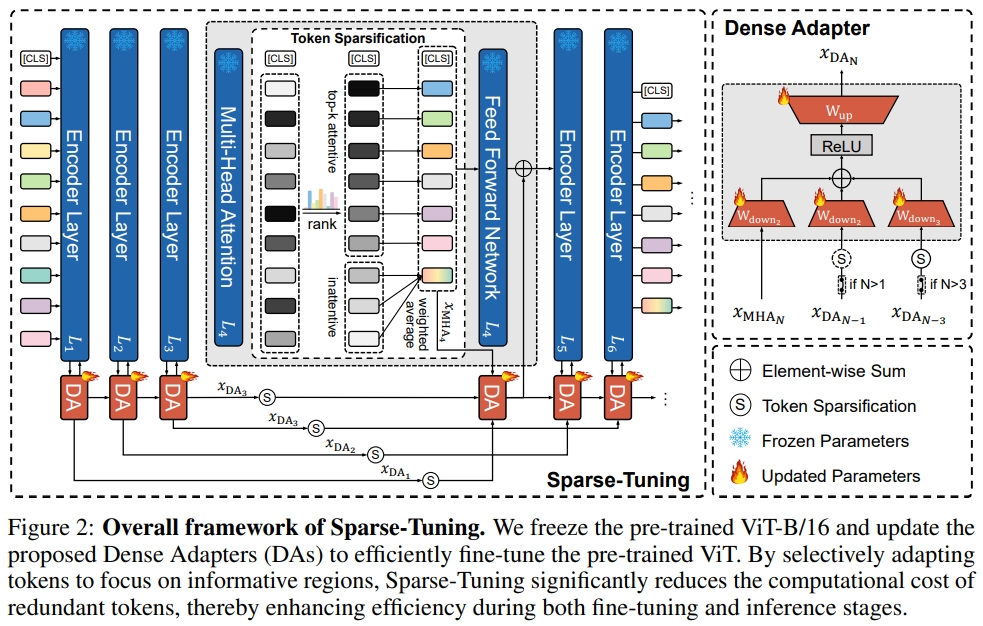

In this paper, we propose Sparse-Tuning, a novel tuning paradigm that substantially enhances both fine-tuning and inference efficiency for pre-trained ViT models. Sparse-Tuning efficiently fine-tunes the pre-trained ViT by sparsely preserving the informative tokens and merging redundant ones, enabling the ViT to focus on the foreground while reducing computational costs on background regions in the images. To accurately distinguish informative tokens from uninformative ones, we introduce a tailored Dense Adapter, which establishes dense connections across different encoder layers in the ViT, thereby enhancing the representational capacity and quality of token sparsification. Empirical results on VTAB-1K, three complete image datasets, and two complete video datasets demonstrate that Sparse-Tuning reduces the GFLOPs to 62%-70% of the original ViT-B while achieving state-of-the-art performance.

📌 We confirm that the relevant code and implementation details will be uploaded recently. Please be patient.

Please consider citing our paper in your publications, if our findings help your research.

@article{liu2024sparse,

title={{Sparse-Tuning}: Adapting Vision Transformers with Efficient Fine-tuning and Inference},

author={Liu, Ting and Liu, Xuyang and Shi, Liangtao and Xu, Zunnan and Huang, Siteng and Xin, Yi and Yin, Quanjun},

journal={arXiv preprint arXiv:2405.14700},

year={2024}

}For any question about our paper or code, please contact Ting Liu or Xuyang Liu.