This is the PyTorch implementation of the Universal Source Separation with Weakly labelled Data [1]. The USS system can automatically detect and separate sound classes from a real recording. The USS system can separate up to hundreds of sound classes sound classes in a hierarchical ontology structure. The USS system is trained on the weakly labelled AudioSet dataset only. Here is a demo:

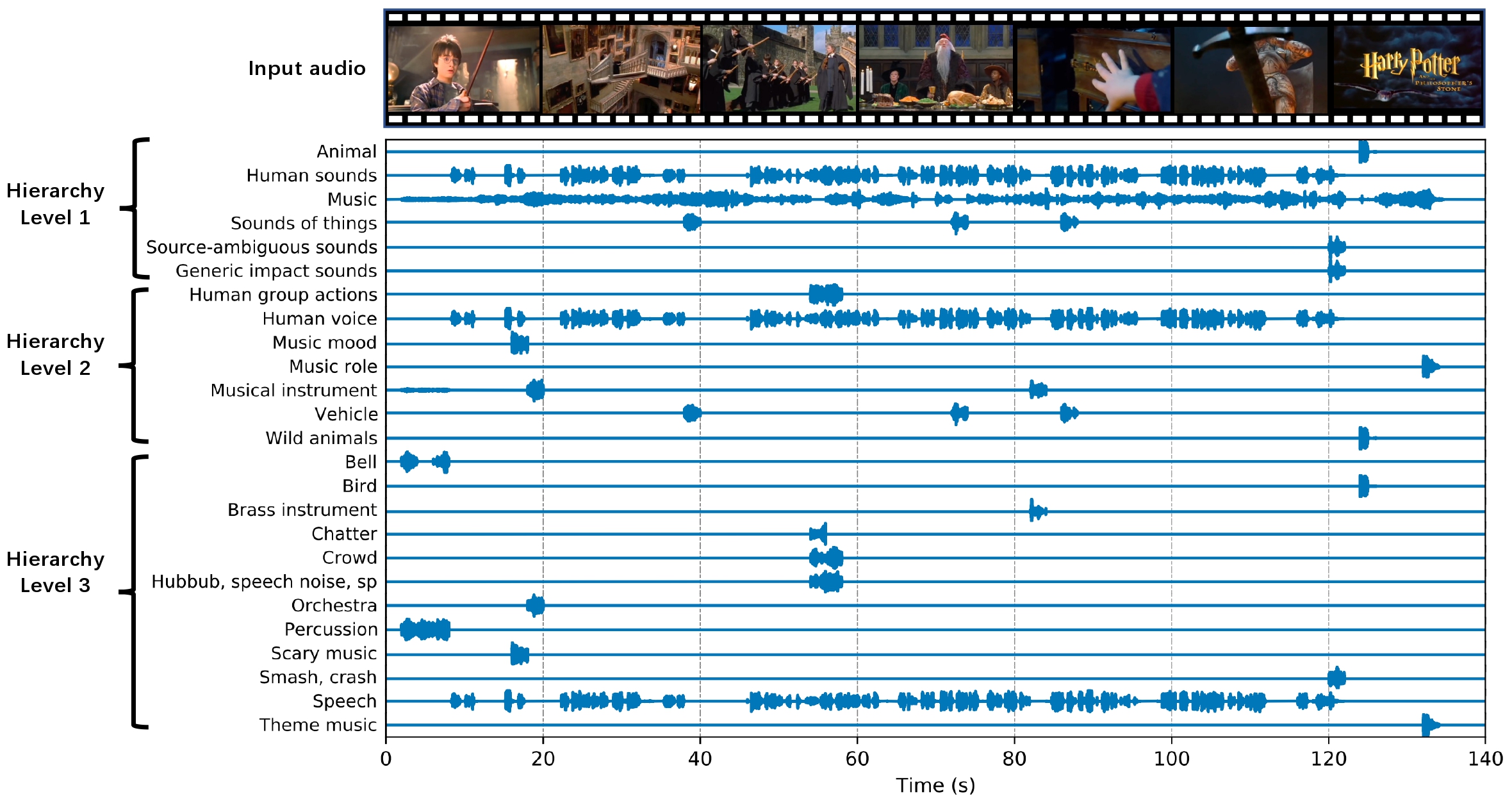

Fig. The hierarchical separation result of the trailer of Harry Potter and the Sorcerer's Stone. Copyright: https://www.youtube.com/watch?v=VyHV0BRtdxo

Fig. The hierarchical separation result of the trailer of Harry Potter and the Sorcerer's Stone. Copyright: https://www.youtube.com/watch?v=VyHV0BRtdxo

Prepare environment (optional)

conda create -n uss python=3.8

conda activate ussInstall the package

pip install usswget -O "harry_potter.flac" "https://huggingface.co/RSNuts/Universal_Source_Separation/resolve/main/uss_material/harry_potter.flac"uss -i "harry_potter.flac"uss -i "harry_potter.flac" --levels 1 2 3uss -i "harry_potter.flac" --class_ids 0 1 2 3 4Download query audios (optional)

wget -O "queries.zip" "https://huggingface.co/RSNuts/Universal_Source_Separation/resolve/main/uss_material/queries.zip"

unzip queries.zipDo separation

uss -i "harry_potter.flac" --queries_dir "queries/speech"Users could also git clone this repo and run the inference in the repo. This will let users to have more flexibility to modify the inference code.

conda create -n uss python=3.8

conda activate uss

pip install -r requirements.txtDownload our pretrained checkpoint:

wget -O "pretrained.ckpt" "https://huggingface.co/RSNuts/Universal_Source_Separation/resolve/main/uss_material/ss_model%3Dresunet30%2Cquerynet%3Dat_soft%2Cdata%3Dfull%2Cdevices%3D8%2Cstep%3D1000000.ckpt"Then perform the inference:

CUDA_VISIBLE_DEVICES=0 python3 uss/inference.py \

--audio_path=./resources/harry_potter.flac \

--levels 1 2 3 \

--config_yaml="./scripts/train/ss_model=resunet30,querynet=at_soft,data=full.yaml" \

--checkpoint_path="pretrained.ckpt"Download the AudioSet dataset from the internet. The total size of AudioSet is around 1.1 TB. For reproducibility, our downloaded dataset can be accessed at: link: https://pan.baidu.com/s/13WnzI1XDSvqXZQTS-Kqujg, password: 0vc2. Users may only download the balanced set (10.36 Gb, 1% of the full set) to train a baseline system.

The downloaded data looks like:

dataset_root

├── audios

│ ├── balanced_train_segments

│ | └── ... (~20550 wavs, the number can be different from time to time)

│ ├── eval_segments

│ | └── ... (~18887 wavs)

│ └── unbalanced_train_segments

│ ├── unbalanced_train_segments_part00

│ | └── ... (~46940 wavs)

│ ...

│ └── unbalanced_train_segments_part40

│ └── ... (~39137 wavs)

└── metadata

├── balanced_train_segments.csv

├── class_labels_indices.csv

├── eval_segments.csv

├── qa_true_counts.csv

└── unbalanced_train_segments.csv

Notice there can be missing files on YouTube, so the numebr of files downloaded by users can be different from time to time. Our downloaded version contains 20550 / 22160 of the balaned training subset, 1913637 / 2041789 of the unbalanced training subset, and 18887 / 20371 of the evaluation subset.

Audio files in a subdirectory will be packed into an hdf5 file. There will be 1 balanced train + 41 unbalanced train + 1 evaluation hdf5 files in total.

./scripts/1_pack_waveforms_to_hdf5s.shThe packed hdf5 files looks like:

workspaces/uss

└── hdf5s

└── waveforms (1.1 TB)

├── balanced_train.h5

├── eval.h5

└── unbalanced_train

├── unbalanced_train_part00.h5

...

└── unbalanced_train_part40.h5

Pack indexes into hdf5 files for balanced training.

./scripts/2_create_indexes.shThe packed indexes files look like:

workspaces/uss

└── hdf5s

└── indexes (3.0 GB)

├── balanced_train.h5

├── eval.h5

└── unbalanced_train

├── unbalanced_train_part00.h5

...

└── unbalanced_train_part40.h5

Create 100 2-second mixture and source pairs to evaluate the separation result of each sound class. There are in total 52,700 2-second pairs for 527 sound classes.

./scripts/3_create_evaluation_data.shThe evaluation data look like:

workspaces/uss

└── evaluation

└── audioset

├── 2s_segments_balanced_train.csv

├── 2s_segments_test.csv

├── 2s_segments_balanced_train

│ ├── class_id=0

│ │ └── ... (100 mixture + 100 clean)

│ │...

│ └── class_id=526

│ └── ... (100 mixture + 100 clean)

└── 2s_segments_test

├── class_id=0

│ └── ... (100 mixture + 100 clean)

│...

└── class_id=526

└── ... (100 mixture + 100 clean)

Train the universal source separation system.

./scripts/4_train.shOr simply execute:

WORKSPACE="workspaces/uss"

CUDA_VISIBLE_DEVICES=0 python3 uss/train.py \

--workspace=$WORKSPACE \

--config_yaml="./scripts/train/ss_model=resunet30,querynet=at_soft,data=balanced.yaml"To appear