The is official PyTorch implementation of paper "Metric3D: Towards Zero-shot Metric 3D Prediction from A Single Image" (Metric 3D)

Authors: Wei Yin1*, Chi Zhang2*, Hao Chen3, Zhipeng Cai3, Gang Yu4, Kaixuan Wang1, Xiaozhi Chen1, Chunhua Shen3

@JUGGHM1,5 will also maintain this project.

The Champion of 2nd Monocular Depth Estimation Challenge in CVPR 2023

- Stronger models and tiny models

- Hugging face

[2023/8/10]Inference codes, pretrained weights, and demo released.

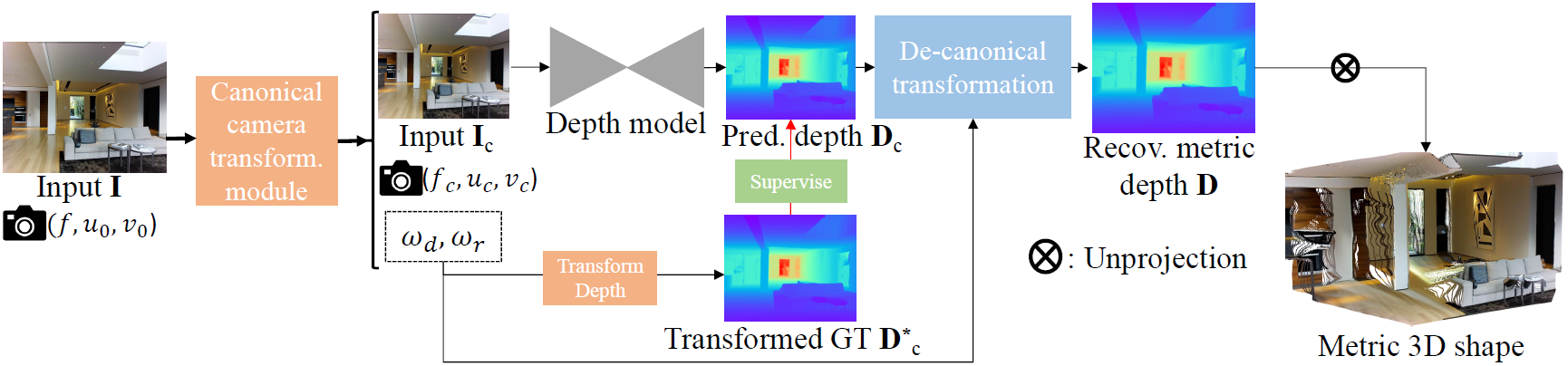

Existing monocular metric depth estimation methods can only handle a single camera model and are unable to perform mixed-data training due to the metric ambiguity. Meanwhile, SOTA monocular methods trained on large mixed datasets achieve zero-shot generalization by learning affine-invariant depths, which cannot recover real-world metrics. In this work, we show that the key to a zero-shot single-view metric depth model lies in the combination of large-scale data training and resolving the metric ambiguity from various camera models. We propose a canonical camera space transformation module, which explicitly addresses the ambiguity problems and can be effortlessly plugged into existing monocular models. Equipped with our module, monocular models can be stably trained over 8 Million images with several Kilo camera models, resulting in zero-shot generalization to in-the-wild images with unseen camera set.

Highlights: The Champion 🏆 of 2nd Monocular Depth Estimation Challenge in CVPR 2023

WITHOUT re-training the models on target datasets, we obtain comparable performance against SoTA supervised methods Adabins and NewCRFs.

| Backbone | KITTI |

KITTI |

KITTI |

KITTI AbsRel ↓ | KITTI RMSE ↓ | KITTI log10 ↓ | NYU |

NYU |

NYU |

NYU AbsRel ↓ | NYU RMSE ↓ | NYU RMSE-log ↓ | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Adabins | Efficient-B5 | 0.964 | 0.995 | 0.999 | 0.058 | 2.360 | 0.088 | 0.903 | 0.984 | 0.997 | 0.103 | 0.0444 | 0.364 |

| NewCRFs | SwinT-L | 0.974 | 0.997 | 0.999 | 0.052 | 2.129 | 0.079 | 0.922 | 0.983 | 0.994 | 0.095 | 0.041 | 0.334 |

| Ours (CSTM_label) | ConvNeXt-L | 0.964 | 0.993 | 0.998 | 0.058 | 2.770 | 0.092 | 0.944 | 0.986 | 0.995 | 0.083 | 0.035 | 0.310 |

| Image | Reconstruction | Pointcloud File | |

|---|---|---|---|

| room |  |

|

Download |

| Colosseum |  |

|

Download |

| chess |  |

|

Download |

All three images are downloaded from unplash and put in the data/wild_demo directory.

Metric3D can also provide scale information for DroidSLAM, help to solve the scale drift problem for better trajectories. (Left: Droid-SLAM (mono). Right: Droid-SLAM with Metric-3D)

| Modality | seq 00 | seq 02 | seq 05 | seq 06 | seq 08 | seq 09 | seq 10 | |

|---|---|---|---|---|---|---|---|---|

| ORB-SLAM2 | Mono | 11.43/0.58 | 10.34/0.26 | 9.04/0.26 | 14.56/0.26 | 11.46/0.28 | 9.3/0.26 | 2.57/0.32 |

| Droid-SLAM | Mono | 33.9/0.29 | 34.88/0.27 | 23.4/0.27 | 17.2/0.26 | 39.6/0.31 | 21.7/0.23 | 7/0.25 |

| Droid+Ours | Mono | 1.44/0.37 | 2.64/0.29 | 1.44/0.25 | 0.6/0.2 | 2.2/0.3 | 1.63/0.22 | 2.73/0.23 |

| ORB-SLAM2 | Stereo | 0.88/0.31 | 0.77/0.28 | 0.62/0.26 | 0.89/0.27 | 1.03/0.31 | 0.86/0.25 | 0.62/0.29 |

Metric3D makes the mono-SLAM scale-aware, like stereo systems.

2011_09_30_drive_0028 / 2011_09_30_drive_0033 / 2011_09_30_drive_0034

videos - Bilibili (TODO)

2011_09_30_drive_0033 / 2011_09_30_drive_0034 / 2011_10_03_drive_0042

2011_09_30_drive_0033 / 2011_09_30_drive_0034 / 2011_10_03_drive_0042

pip install -r requirements.txtOr you could also try:

conda create -n metric3d python=3.7

conda activate metric3d

pip install torch==1.10.0+cu111 torchvision==0.11.0+cu111 torchaudio==0.10.0 -f https://download.pytorch.org/whl/torch_stable.html

pip install -r requirements.txt

pip install -U openmim

mim install mmengine

mim install "mmcv-full==1.3.17"

pip install "mmsegmentation==0.19.0"conda create -n metric3d python=3.8

conda activate metric3d

pip3 install torch torchvision torchaudio

pip install -r requirements.txt

pip install -U openmim

mim install mmengine

mim install "mmcv-full==1.7.1"

pip install "mmsegmentation==0.30.0"

pip install numpy==1.20.0

pip install scikit-image==0.18.0With off-the-shelf depth datasets, we need to generate json annotaions in compatible with this dataset, which is organized by:

dict(

'files':list(

dict(

'rgb': 'data/kitti_demo/rgb/xxx.png',

'depth': 'data/kitti_demo/depth/xxx.png',

'depth_scale': 1000.0 # the depth scale of gt depth img.

'cam_in': [fx, fy, cx, cy],

),

dict(

...

),

...

)

)

To generate such annotations, please refer to the "Inference" section.

In mono/configs we provide different config setups.

Intrinsics of the canonical camera is set bellow:

canonical_space = dict(

focal_length=1000.0,

),

where cx and cy is set to be half of the image size. You do not need to adjust the canonical focal length, otherwise the metric is not accurate.

Inference settings are defined as

crop_size = (512, 1088),

where the images will be resized and padded as the crop_size and then fed into the model.

| Encoder | Decoder | Link | |

|---|---|---|---|

| v1.0 | ConvNeXt-L | Hourglass-Decoder | Download |

More models are on the way...

- put the trained ckpt file

model.pthinweight/. - generate data annotation by following the code

data/gene_annos_kitti_demo.py, which includes 'rgb', (required) 'intrinsic', (required) 'depth', (optional) 'depth_scale'. Note the 'depth_scale' is the scale factor for GT labels. e.g. to keep the precision, the kitti GT depths are scaled with 256. - change the 'test_data_path' in

test_*.shto the*.jsonpath. - run

source test_kitti.shorsource test_nyu.sh.

- put the trained ckpt file

model.pthinweight/. - change the 'test_data_path' in

test.shto the image folder path. - run

source test.sh. Note: if you do not know the intrinsics, we will randomly set the intrinsic parameters. The problem is the predicted depth has no metric. However, if you know the paired focal length, please modify the following code inmono/utils/custom_data.py. Set the 'intrinsic' with the format [fx, fy, cx, cy].

def load_data(path: str):

rgbs = glob.glob(path + '/*.jpg') + glob.glob(path + '/*.png')

data = [{'rgb':i, 'depth':None, 'intrinsic': None, 'filename':os.path.basename(i), 'folder': i.split('/')[-3]} for i in rgbs]

return data

Because the focal length is not properly set! Please find a proper focal length by modifying codes here yourself.

If the intrinsics are correct, please try different resolutions for crop_size = (512, 1088) in the config file. From our experience, you could try (544, 928), (768, 1088), (512, 992), (480, 1216), (1216, 1952), or some resolutions close to them as you like. Generally, larger resolutions are better for driving scenarios and smaller ones for indoors.

Because the images are too large! Use smaller ones instead.

First be sure all black padding regions at image boundaries are cropped out. Besides, metric 3D is not almighty. Some objects (chandeliers, drones...) / camera views (aerial view, bev...) do not occur frequently in the training datasets. We will going deeper into this and release more powerful solutions.

This work is empowered by DJI Automotive1

and collaborators from Tencent2, ZJU3, Intel Labs4, and HKUST5

We appreciate efforts from the contributors of mmcv, all concerning datasets, and NVDS.

@article{yin2023metric,

title={Metric3D: Towards Zero-shot Metric 3D Prediction from A Single Image},

author={Wei Yin, Chi Zhang, Hao Chen, Zhipeng Cai, Gang Yu, Kaixuan Wang, Xiaozhi Chen, Chunhua Shen},

booktitle={ICCV},

year={2023}

}

The Metric 3D code is under a GPLv3 License for non-commercial usage. For further questions, contact Dr. Wei Yin [yvanwy@outlook.com] and Mr. Mu Hu [mhuam@connect.ust.hk].