Unofficial PyTorch implementation of MelGAN vocoder

- MelGAN is lighter, faster, and better at generalizing to unseen speakers than WaveGlow.

- This repository use identical mel-spectrogram function from NVIDIA/tacotron2, so this can be directly used to convert output from NVIDIA's tacotron2 into raw-audio.

- Pretrained model on LJSpeech-1.1 via PyTorch Hub.

Tested on Python 3.6

pip install -r requirements.txt- Download dataset for training. This can be any wav files with sample rate 22050Hz. (e.g. LJSpeech was used in paper)

- preprocess:

python preprocess.py -c config/default.yaml -d [data's root path] - Edit configuration

yamlfile

python trainer.py -c [config yaml file] -n [name of the run]cp config/default.yaml config/config.yamland then editconfig.yaml- Write down the root path of train/validation files to 2nd/3rd line.

- Each path should contain pairs of

*.wavwith corresponding (preprocessed)*.melfile. - The data loader parses list of files within the path recursively.

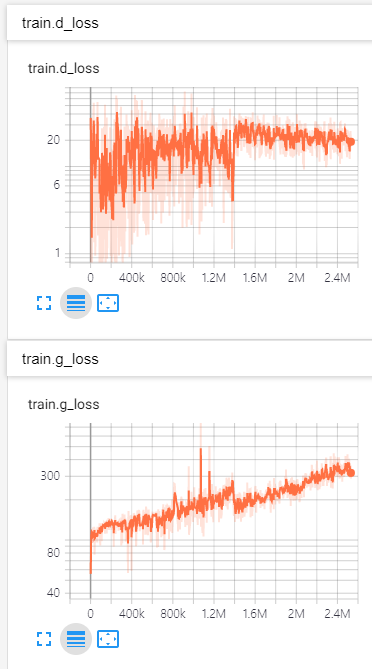

tensorboard --logdir logs/

Try with Google Colab: TODO

import torch

vocoder = torch.hub.load('seungwonpark/melgan', 'melgan')

vocoder.eval()

mel = torch.randn(1, 80, 234) # use your own mel-spectrogram here

if torch.cuda.is_available():

vocoder = vocoder.cuda()

mel = mel.cuda()

with torch.no_grad():

audio = vocoder.inference(mel)python inference.py -p [checkpoint path] -i [input mel path]

See audio samples at: http://swpark.me/melgan/.

- Seungwon Park @ MINDsLab Inc. (yyyyy@snu.ac.kr, swpark@mindslab.ai)

- Myunchul Joe @ MINDsLab Inc.

- Rishikesh @ DeepSync Technologies Pvt Ltd.

BSD 3-Clause License.

- utils/stft.py by Prem Seetharaman (BSD 3-Clause License)

- datasets/mel2samp.py from https://github.com/NVIDIA/waveglow (BSD 3-Clause License)

- utils/hparams.py from https://github.com/HarryVolek/PyTorch_Speaker_Verification (No License specified)