If you find this repository helpful, please consider giving us a star⭐!

This repository contains an simple and unofficial implementation of Animate Anyone. This project is built upon magic-animate and AnimateDiff. This implementation is first developed by Qin Guo and then assisted by Zhenzhi Wang.

The first training phase basic test passed, currently in training and testing the second phase.

Training may be slow due to GPU shortage.😢

It only takes a few days to release the weights.😄

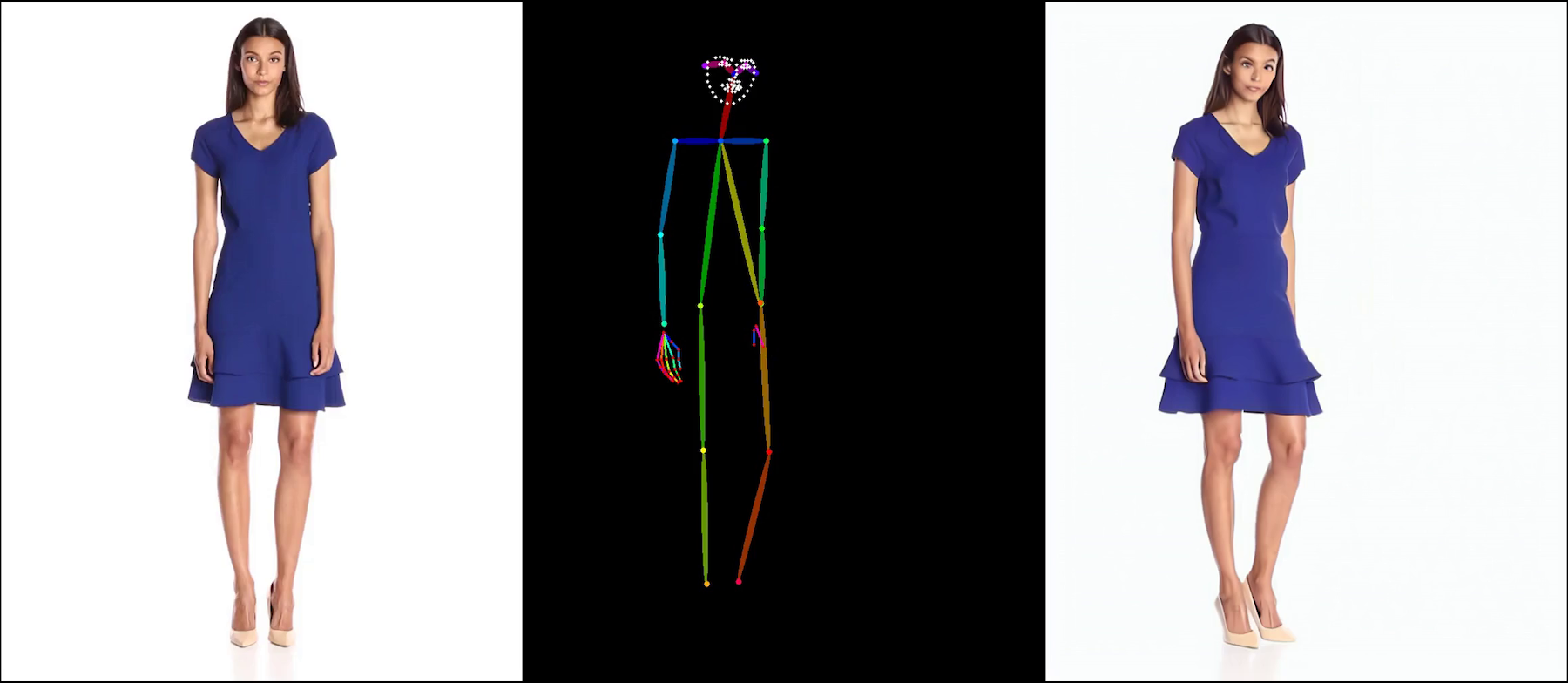

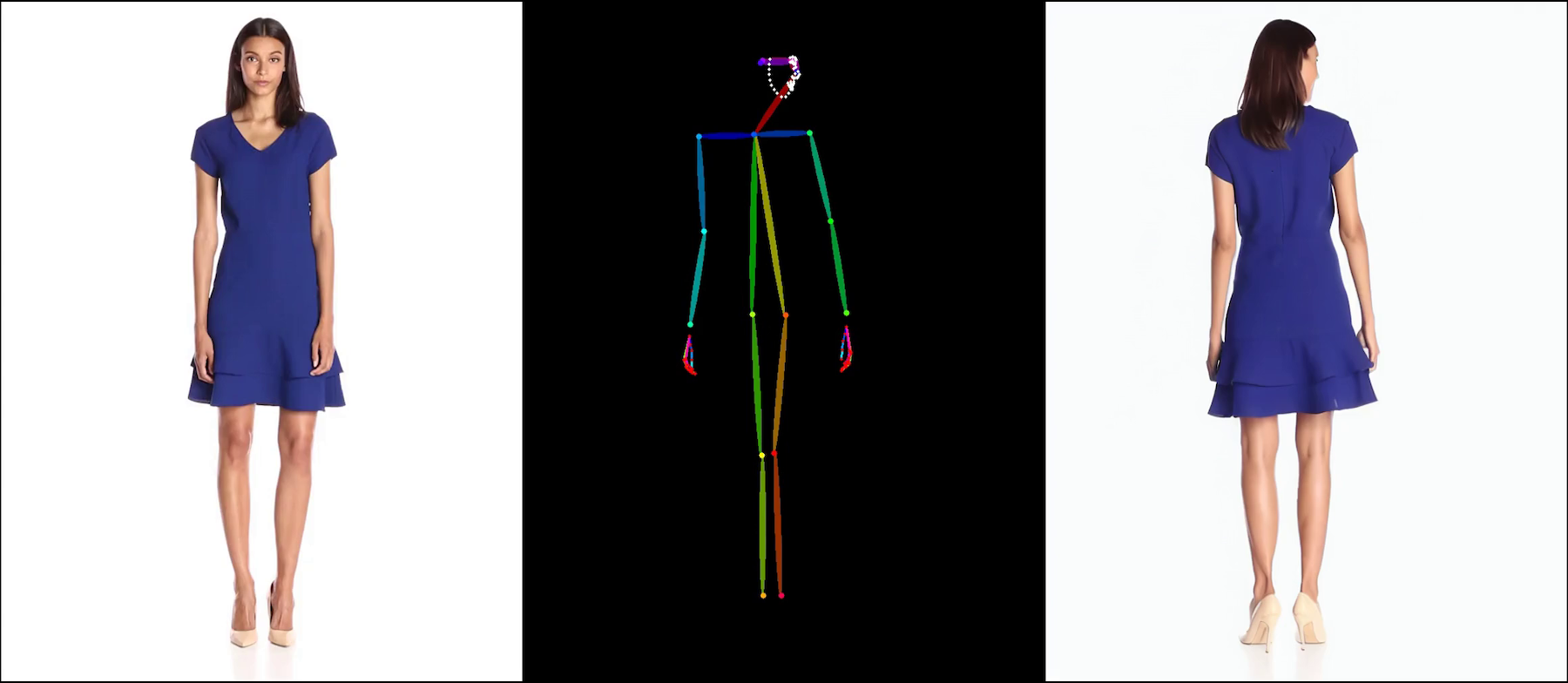

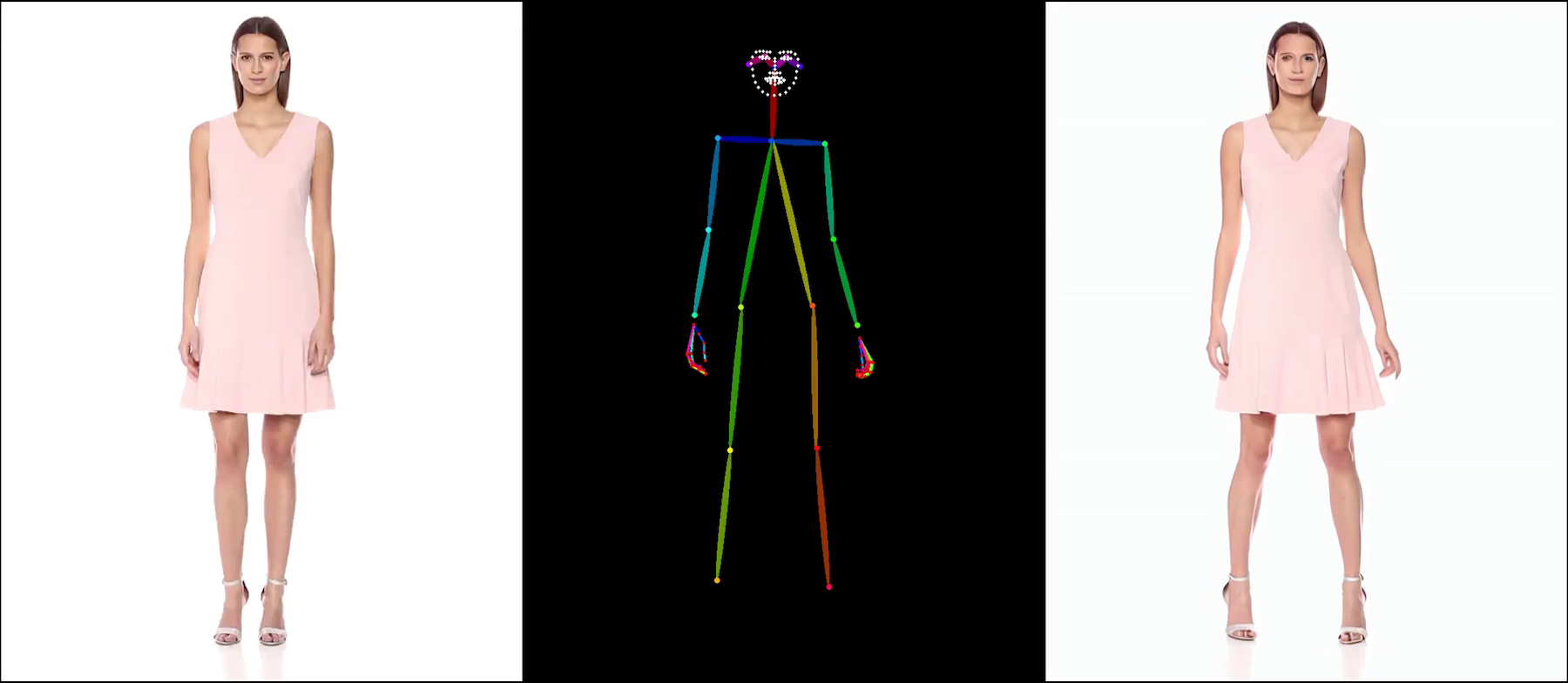

The current version of the face still has some artifacts. This model is trained on the UBC dataset rather than a large-scale dataset.

|  |

|  |

|  |

|  |

|  |

The training of stage2 is challenging due to artifacts in the background. We select one of our best results here, and are still working on it. An important point is to ensure that training and inference resolution is consistent.

|

This project is under continuous development in part-time, there may be bugs in the code, welcome to correct them, I will optimize the code after the pre-trained model is released!

In the current version, we recommend training on 8 or 16 A100,H100 (80G) at 512 or 768 resolution. Low resolution (256,384) does not give good results!!!(VAE is very poor at reconstruction at low resolution.)

- Release Training Code.

- Release Inference Code.

- Release Unofficial Pre-trained Weights. (Note:Train on public datasets instead of large-scale private datasets, just for academic research.🤗)

- Release Gradio Demo.

bash fast_env.shpython3 -m demo.gradio_animateIf you only have a GPU with 24 GB of VRAM, I recommend inference at resolution 512 and below.

Original AnimateAnyone Architecture (It is difficult to control pose when training on a small dataset.)

torchrun --nnodes=8 --nproc_per_node=8 train.py --config configs/training/train_stage_1.yamltorchrun --nnodes=8 --nproc_per_node=8 train.py --config configs/training/train_stage_2.yamltorchrun --nnodes=8 --nproc_per_node=8 train_hack.py --config configs/training/train_stage_1.yamltorchrun --nnodes=8 --nproc_per_node=8 train_hack.py --config configs/training/train_stage_2.yamlSpecial thanks to the original authors of the Animate Anyone project and the contributors to the magic-animate and AnimateDiff repository for their open research and foundational work that inspired this unofficial implementation.