This is the journal version of SCPGabNet

This is the official code of RSCP^2^GAN for denoising tasks.

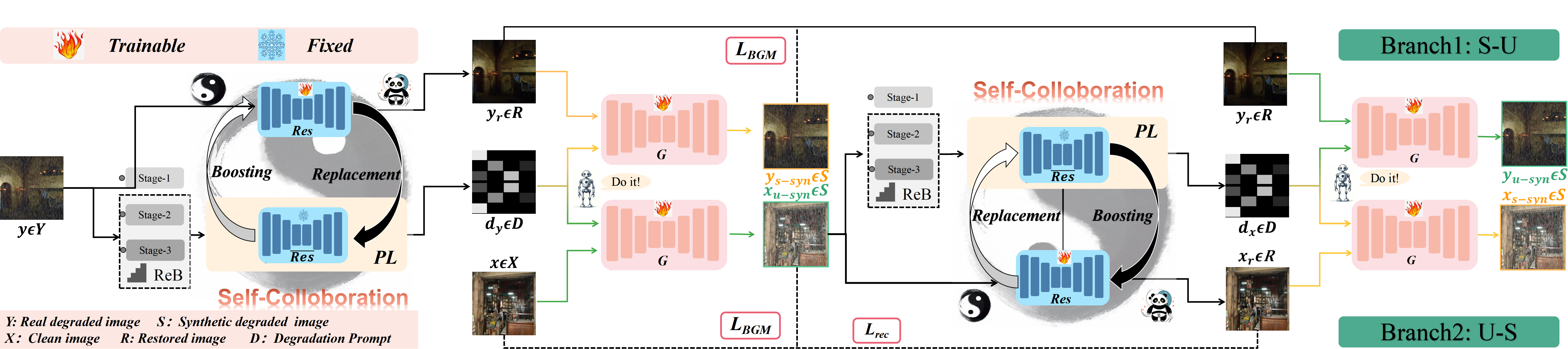

Deep learning methods have shown remarkable performance in image restoration, particularly when trained on large-scale paired datasets. However, acquiring such data in real-world scenarios poses a significant challenge. Although unsupervised restoration approaches based on generative adversarial networks (GANs) offer a promising solution without paired datasets, they are difficult to surpass the performance limitations of conventional unsupervised GAN-based frameworks without significantly modifying existing structures or increasing the computational complexity of the restorer. To address this problem, we propose a self-collaboration (SC) strategy for existing restoration models. It utilizes the information from the previous stage as feedback to guide the subsequent stages and achieves significant performance improvement without increasing the framework's inference complexity. It comprises a prompt learning (PL) module and a restorer (self-synthesis'' and unpaired-synthesis'' constraints. This baseline ensures the effectiveness of the training framework. Extensive experimental results on various restoration tasks demonstrate that the proposed method performs favorably against existing state-of-the-art unsupervised restoration methods.

Our experiments are done with:

- Python 3.7.13

- PyTorch 1.13.0

- numpy 1.21.5

- opencv 4.6.0

- scikit-image 0.19.3

SIDD

Train: https://pan.baidu.com/s/1c1iPIIJvSfq6s6_M7iyjPA 2oe5

Test: https://pan.baidu.com/s/1yltsD684qpJa0SMJ9SdR5w 8qzf

PolyU

https://pan.baidu.com/s/1TUTYkjX230UzCy_VhHEvQA ek2d

SIDD/DND: https://pan.baidu.com/s/1XyovAFFLjOBYgsnLgkrecw uv0m

PolyU: https://pan.baidu.com/s/16yilFMMDJUSM9bEa5719tw j61y

You can get the complete SIDD validation dataset from https://www.eecs.yorku.ca/~kamel/sidd/benchmark.php.

'.mat' files need to be converted to images ('.png').

train and test are both in train_v6.py.

run trainv6.py.

If you have any questions, please contact linxin@stu.scu.edu.cn