MOMA-LRG is a dataset dedicated to multi-object, multi-actor activity parsing.

git clone https://github.com/d1ngn1gefe1/momatools

cd momatools

pip install .

You can install all the dependencies needed for MOMA-LRG by running

pip install -r requirements.txt

Warning

Note that the dependency on

pygraphvizrequires the installation ofgraphviz, which can be installed viasudo apt-get install graphviz graphviz-devon Linux systems andbrew install graphvizvia Homebrew on macOS.

- Python 3.7+

- ffmpeg (only for preprocessing):

pip install ffmpeg-python - jsbeautifier (for better visualization of json files):

pip install jsbeautifier

- distinctipy: a lightweight package for generating visually distinct colors

- Graphviz:

sudo apt-get install graphviz graphviz-dev - PyGraphviz: a Python interface to the Graphviz graph layout and visualization package

- seaborn: a data visualization library based on matplotlib

- Torchvision

| Level | Concept |

Representation |

|---|---|---|

| 1 | Activity | Semantic label |

| 2 | Sub-activity | Temporal boundary and semantic label |

| 3 | Higher-order interaction | Spatial-temporal scene graph |

| ┗━ Entity | Graph node w/ bounding box, instance label, and semantic label | |

| ┣━ Actor | - | |

| ┗━ Object | - | |

| ┗━ Predicate | - | |

| ┗━ Relationship | Directed edge as a triplet (source node, semantic label, and target node) | |

| ┗━ Attribute | Semantic label of a graph node as a pair (source node, semantic label) |

Download the dataset into a directory titled dir_moma with the structure below. The anns directory requires roughly 1.8GB of space and the video directory requires 436 GB.

$ tree dir_moma

.

├── anns

│ ├── anns.json

│ ├── split_std.json

│ ├── split_fs.json

│ ├── clips.json

│ └── taxonomy

└── videos

├── all

├── raw

├── activity_fr

├── activity

├── sub_activity_fr

├── sub_activity

├── interaction

├── interaction_frames

└── interaction_video

tests/run_preproc.py: Pre-process the dataset. Don't run this script since the dataset has been pre-processed.

tests/run_visualize.py: Visualize annotations and dataset statistics.

In this version, we include:

- 148 hours of videos

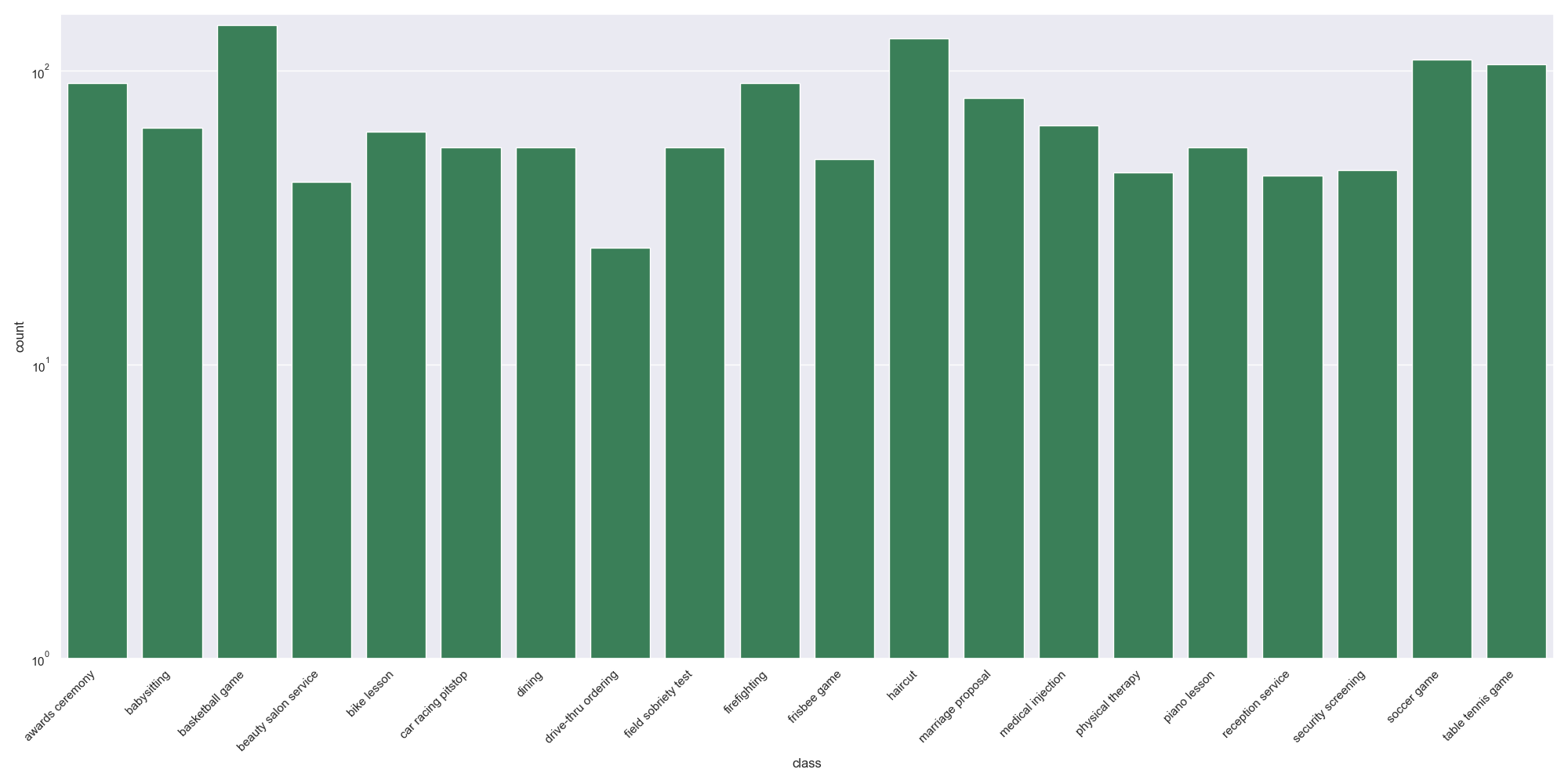

- 1,412 activity instances from 20 activity classes ranging from 31s to 600s and with an average duration of 241s.

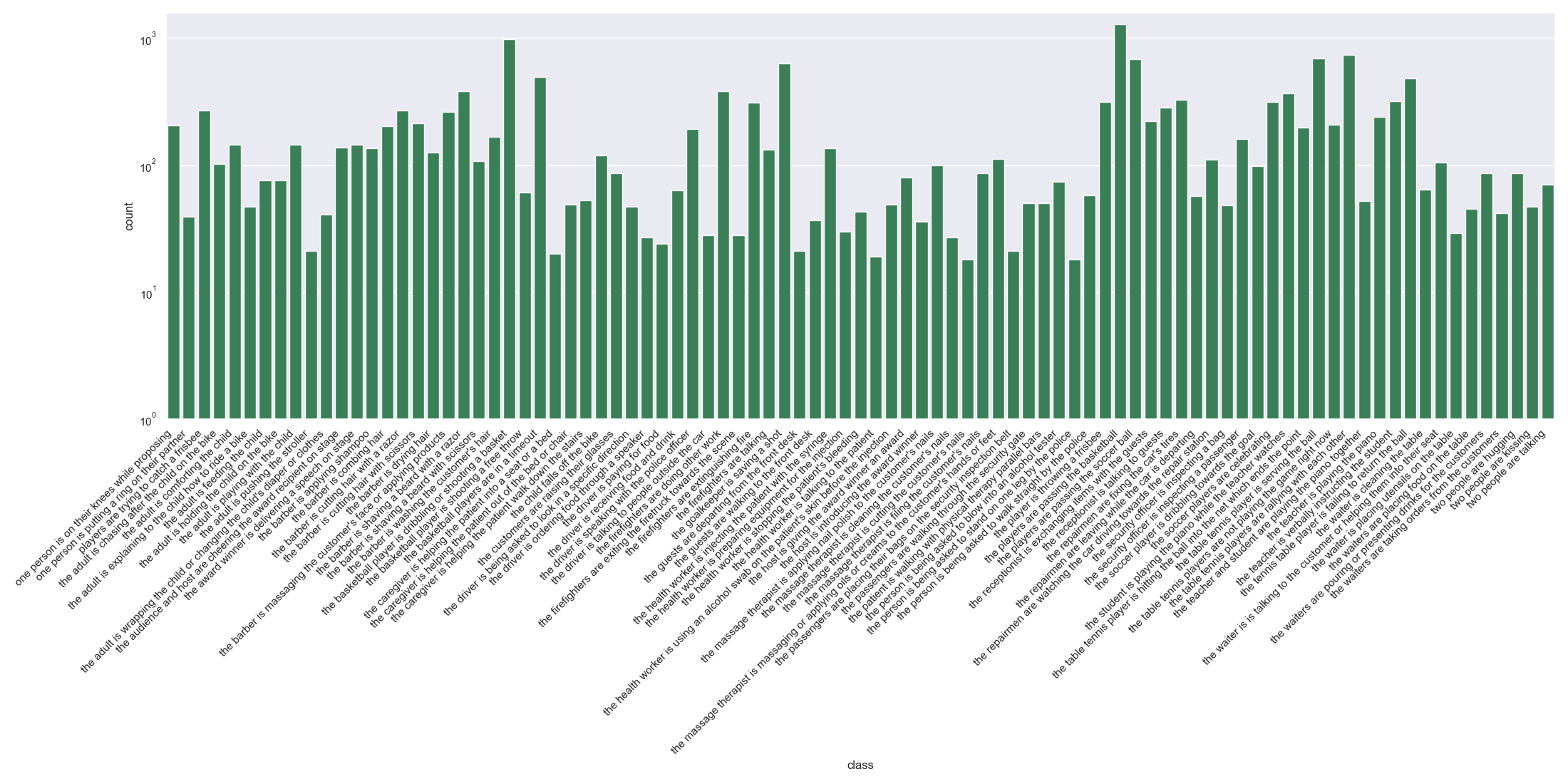

- 15,842 sub-activity instances from 91 sub-activity classes ranging from 3s to 31s and with an average duration of 9s.

- 161,265 higher-order interaction instances.

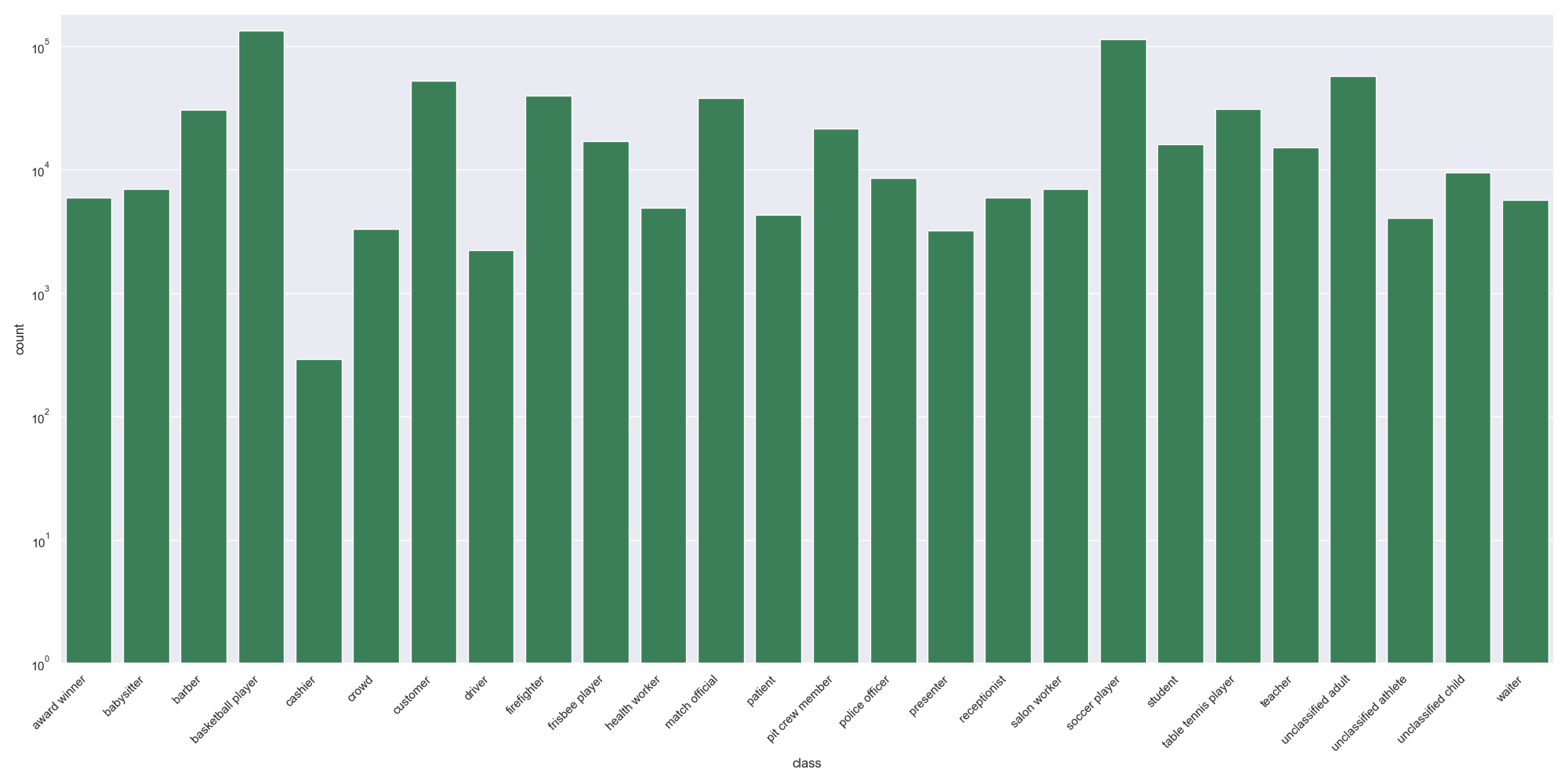

- 636,194 image actor instances and 104,564 video actor instances from 26 classes.

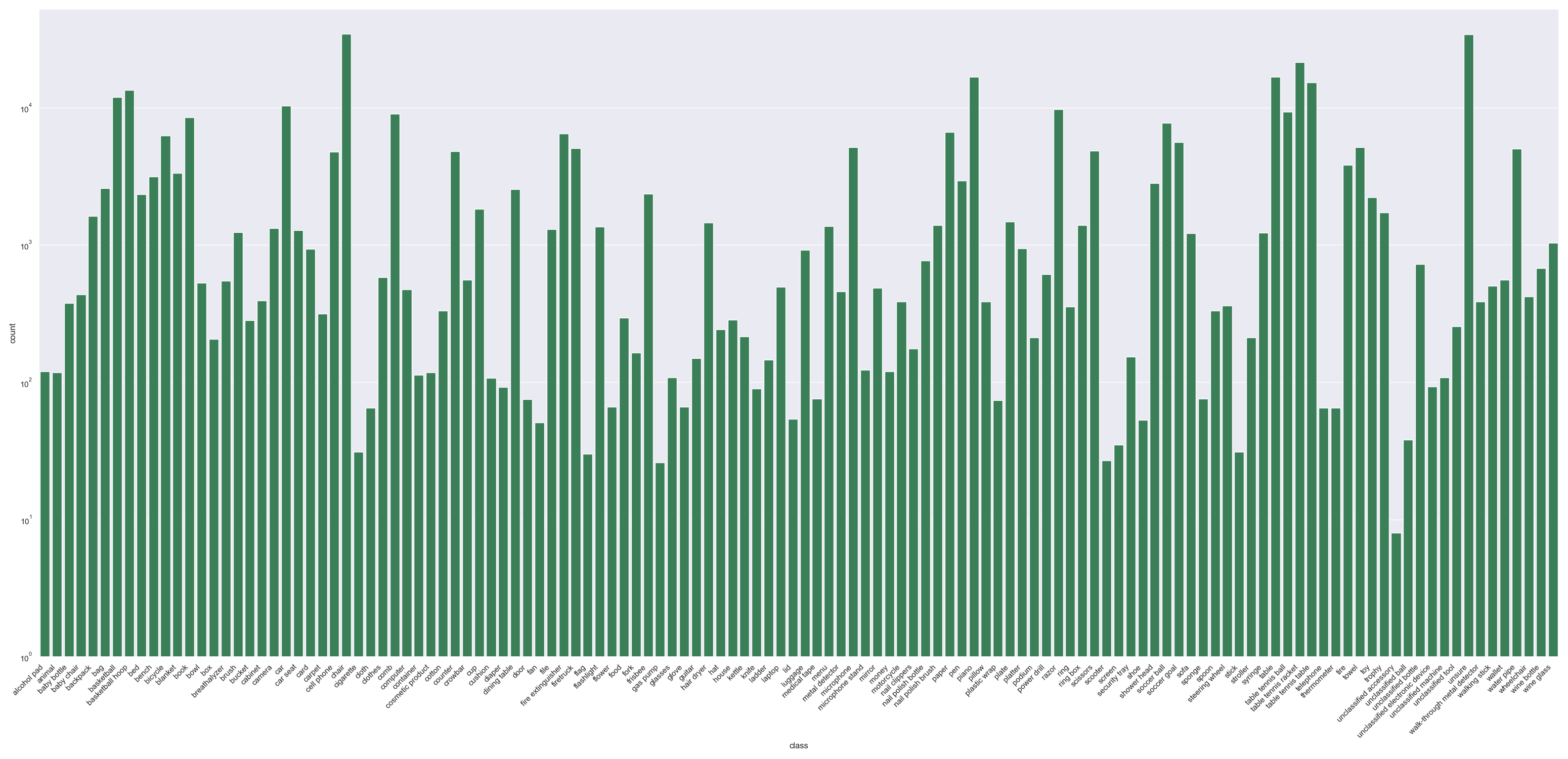

- 349,034 image object instances and 47,494 video object instances from 126 classes.

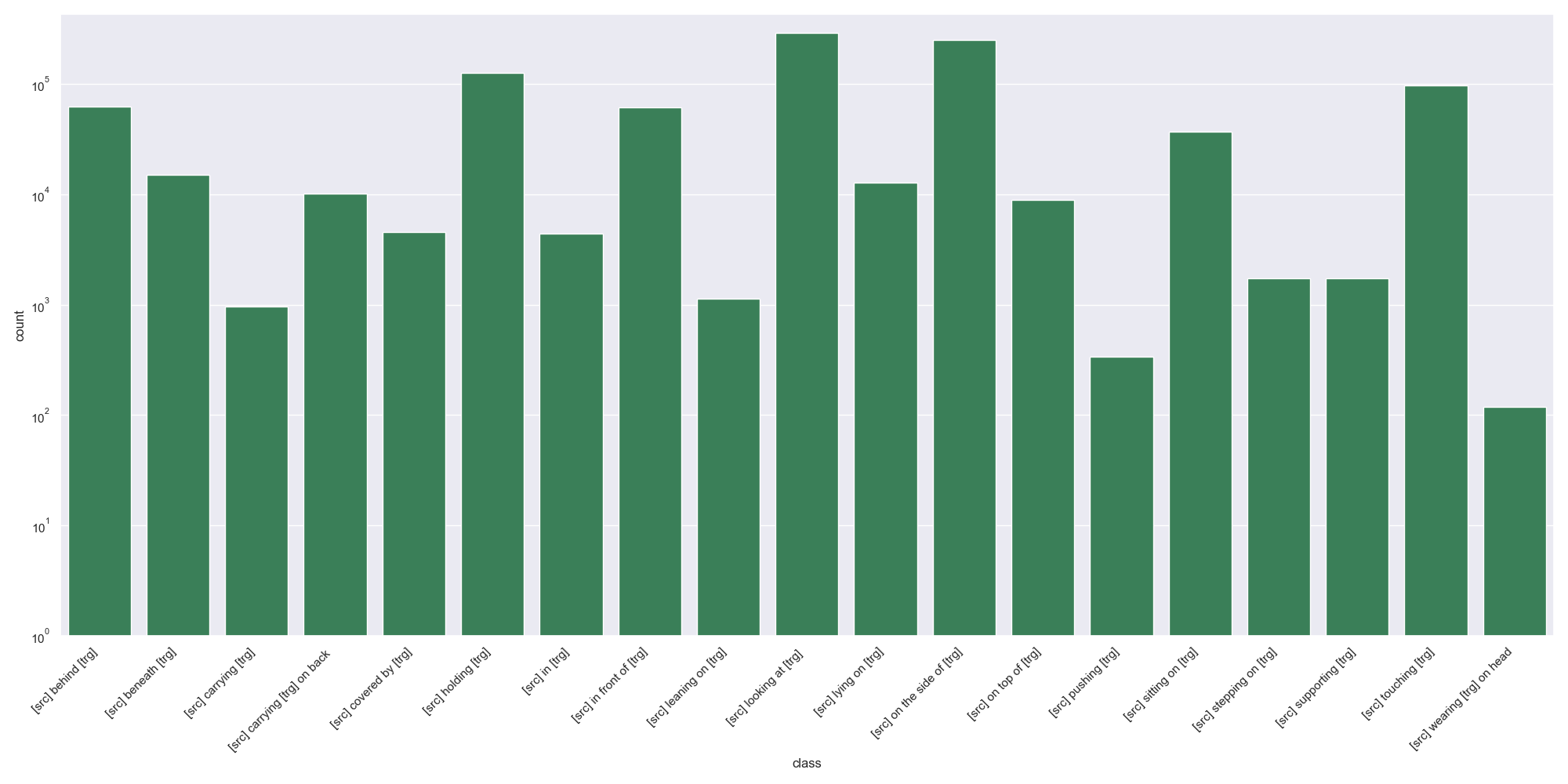

- 984,941 relationship instances from 19 classes.

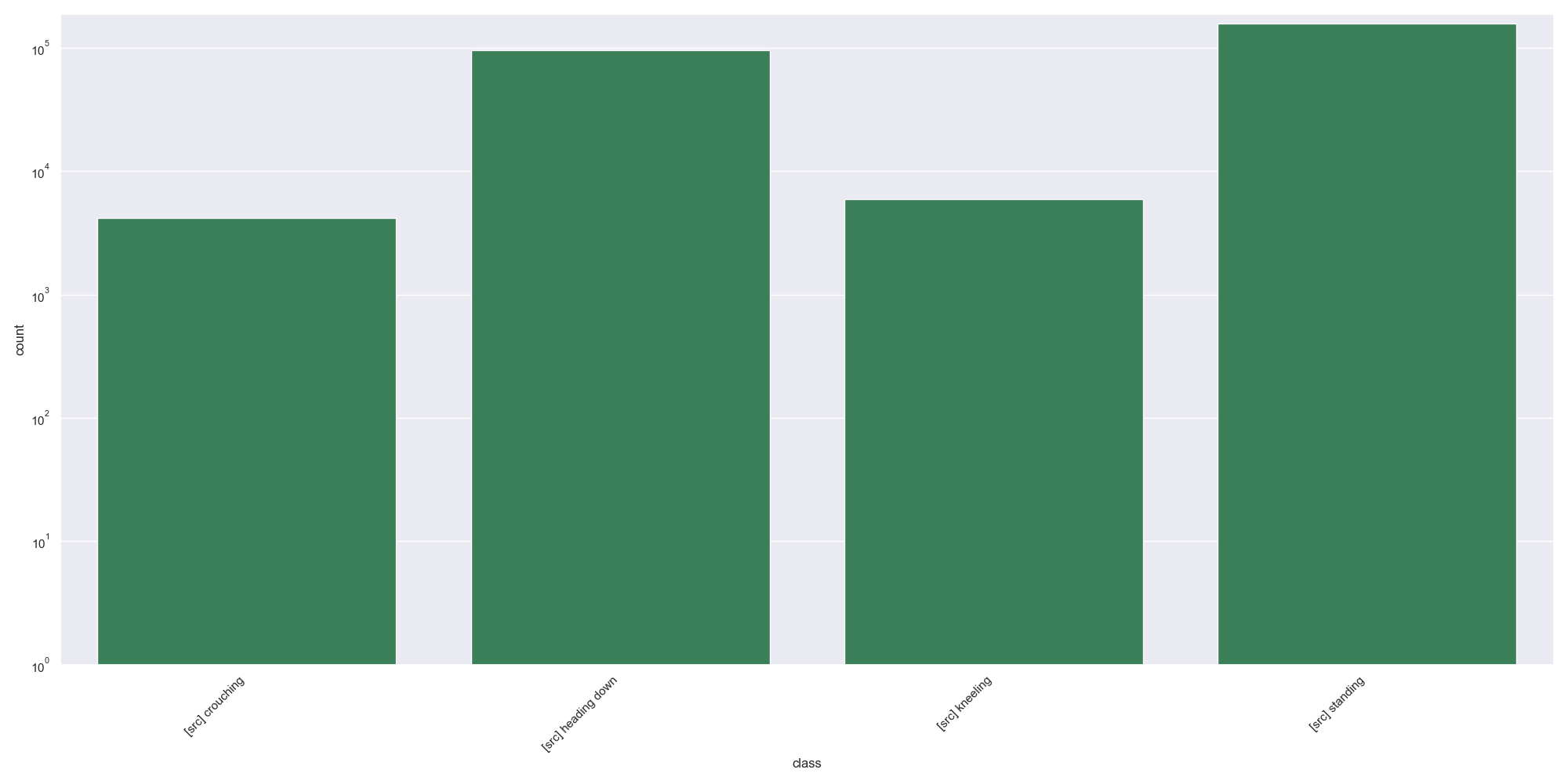

- 261,249 attribute instances from 4 classes.

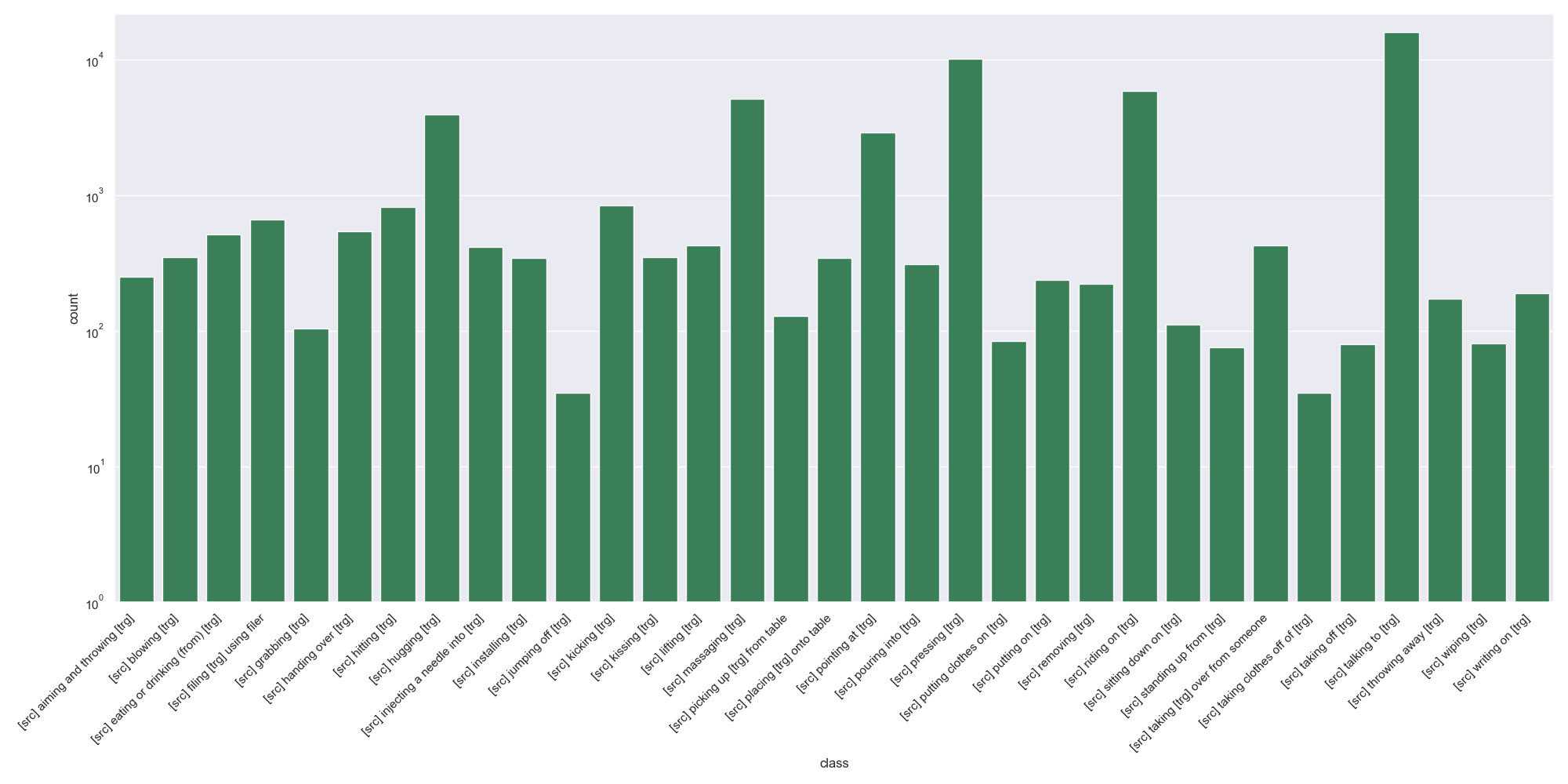

- 52,072 transitive action instances from 33 classes.

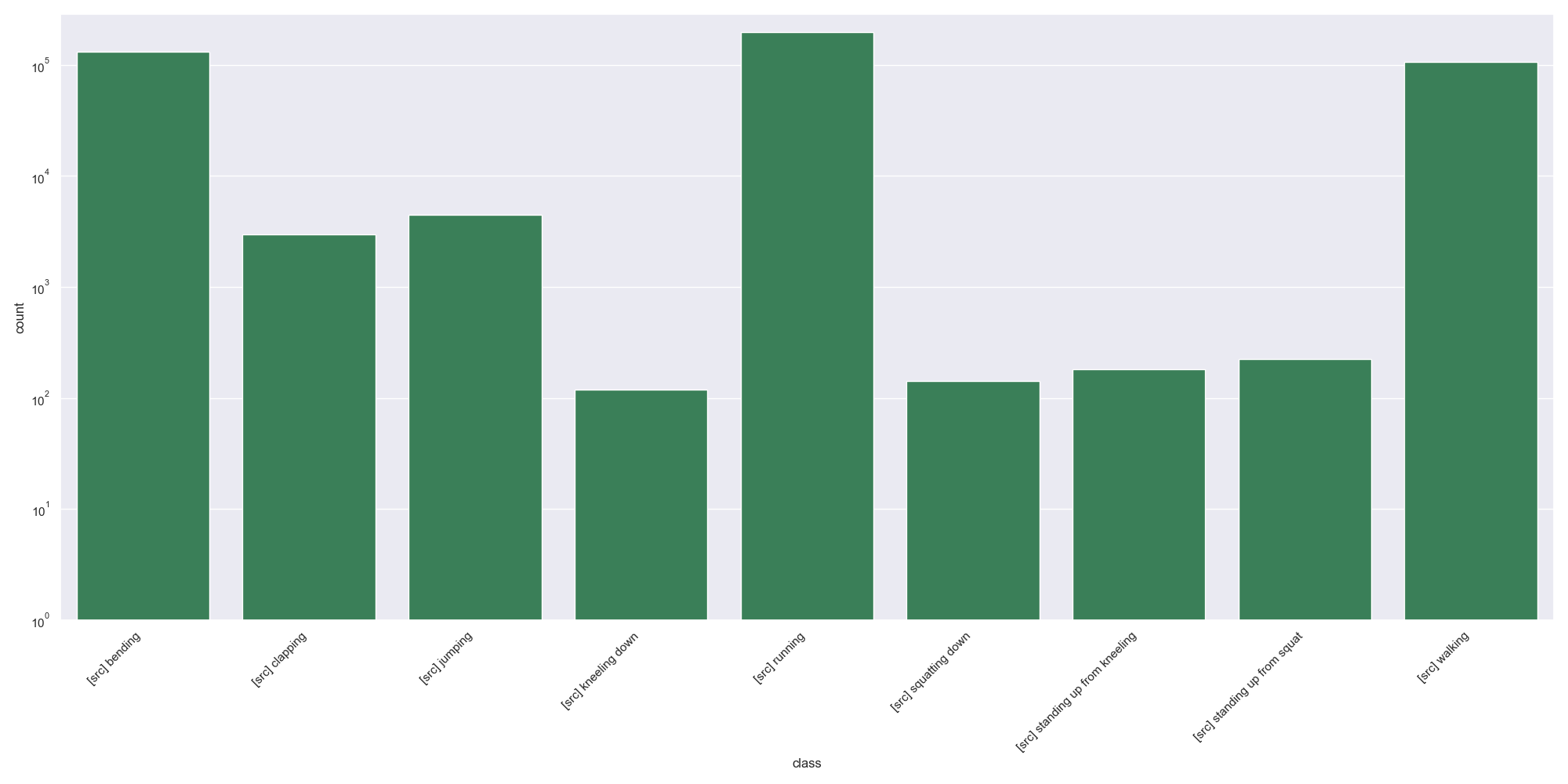

- 442,981 intransitive action instances from 9 classes.

Below, we show the syntax of the MOMA-LRG annotations.

[

{

"file_name": str,

"num_frames": int,

"width": int,

"height": int,

"duration": float,

// an activity

"activity": {

"id": str,

"class_name": str,

"start_time": float,

"end_time": float,

"sub_activities": [

// a sub-activity

{

"id": str,

"class_name": str,

"start_time": float,

"end_time": float,

"higher_order_interactions": [

// a higher-order interaction

{

"id": str,

"time": float,

"actors": [

// an actor

{

"id": str,

"class_name": str,

"bbox": [x, y, width, height]

},

...

],

"objects": [

// an object

{

"id": str,

"class_name": str,

"bbox": [x, y, width, height]

},

...

],

"relationships": [

// a relationship

{

"class_name": str,

"source_id": str,

"target_id": str

},

...

],

"attributes": [

// an attribute

{

"class_name": str,

"source_id": str

},

...

],

"transitive_actions": [

// a transitive action

{

"class_name": str,

"source_id": str,

"target_id": str

},

...

],

"intransitive_actions": [

// an intransitive action

{

"class_name": str,

"source_id": str

},

...

]

}

]

},

...

]

}

},

...

]