mount imagenet11k rbd

make soft link to /workspace/mnt/storage/zhaozhijian/imagenet11k/imagenet11k/

modify the datapath & model_path in train_dist.sh

run bash train_dist.sh

mount the ImageNet-pytorch imagent-1k dataset

make the soft link imagenet11k_train -> train/ imagenet11k_val -> val/

modify the datapath & imagenet22k pretrain model path in train_dist_imagenet1k.sh

run bash train_dist_imagenet1k.sh

Official PyTorch Implementation

Tal Ridnik, Emanuel Ben-Baruch, Asaf Noy, Lihi Zelnik-Manor

DAMO Academy, Alibaba Group

Abstract

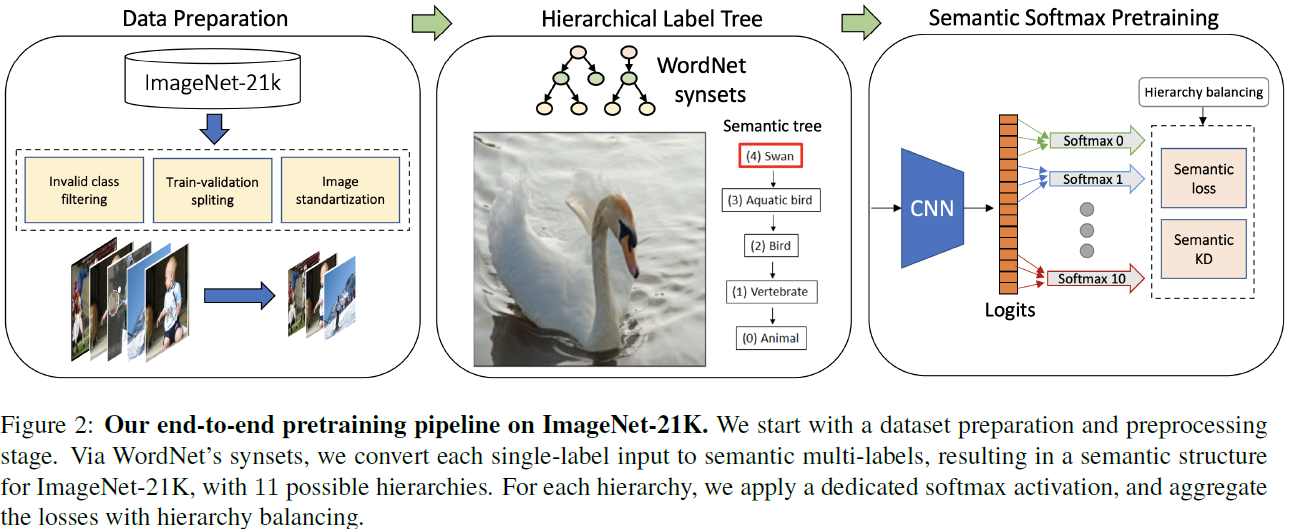

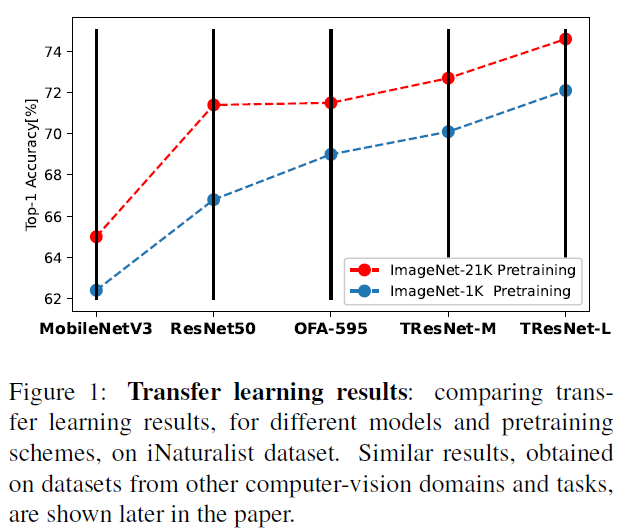

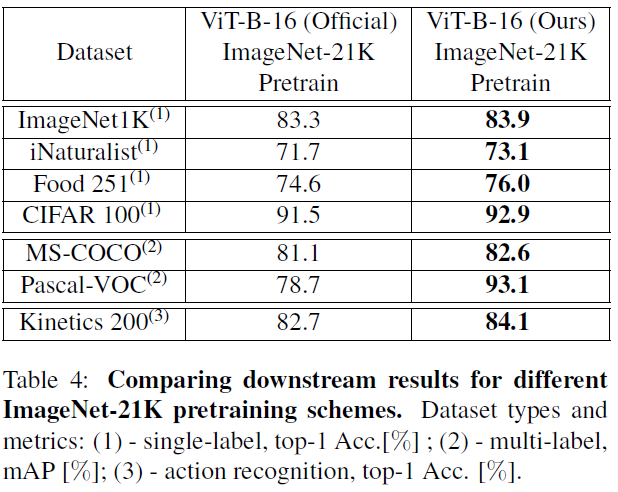

ImageNet-1K serves as the primary dataset for pretraining deep learning models for computer vision tasks. ImageNet-21K dataset, which contains more pictures and classes, is used less frequently for pretraining, mainly due to its complexity, and underestimation of its added value compared to standard ImageNet-1K pretraining. This paper aims to close this gap, and make high-quality efficient pretraining on ImageNet-21K available for everyone. Via a dedicated preprocessing stage, utilizing WordNet hierarchies, and a novel training scheme called semantic softmax, we show that different models, including small mobile-oriented models, significantly benefit from ImageNet-21K pretraining on numerous datasets and tasks. We also show that we outperform previous ImageNet-21K pretraining schemes for prominent new models like ViT. Our proposed pretraining pipeline is efficient, accessible, and leads to SoTA reproducible results, from a publicly available dataset.

|

|

|

| Backbone | ImageNet-21K-P semantic top-1 Accuracy [%] |

ImageNet-1K top-1 Accuracy [%] |

Maximal batch size |

Maximal training speed (img/sec) |

Maximal inference speed (img/sec) |

|---|---|---|---|---|---|

| MobilenetV3_large_100 | 73.1 | 78.0 | 488 | 1210 | 5980 |

| OFA_flops_595m_s | 75.0 | 81.0 | 288 | 500 | 3240 |

| ResNet50 | 75.6 | 82.0 | 320 | 720 | 2760 |

| TResNet-M | 76.4 | 83.1 | 520 | 670 | 2970 |

| TResNet-L (V2) | 76.7 | 83.9 | 240 | 300 | 1460 |

| ViT_base_patch16_224 | 77.6 | 84.4 | 160 | 340 | 1140 |

See this link for more details.

We highly recommend to start working with ImageNet-21K by testing these weights against standard ImageNet-1K pretraining, and comparing results on your relevant downstream tasks.

After you will see a significant improvement (you will), proceed to pretraining new models.

See instructions for obtaining and processing the dataset in here.

To use the traing code, first download ImageNet-21K-P semantic tree to your local ./resources/ folder Example of semantic softmax training:

python train_semantic_softmax.py \

--batch_size=4 \

--data_path=/mnt/datasets/21k \

--model_name=mobilenetv3_large_100 \

--model_path=/mnt/models/mobilenetv3_large_100.pth \

--epochs=80

For shortening the training, we initialize the weights from standard ImageNet-1K. Recommended to use ImageNet-1K weights from this excellent repo.

The results in the article are comparative results, with fixed hyper-parameters. In addition, using our pretrained models, and a dedicated training scheme with adjusted hyper-parameters per dataset (resolution, optimizer, learning rate), we were able to achieve SoTA results on several computer vision dataset - MS-COCO, Pascal-VOC, Stanford Cars and CIFAR-100.

We will share our models' checkpoints to validate our scores.

- KD training code

- Inference code

- Model weights after transferred to ImageNet-1K

- Downstream training code.

- More

@misc{ridnik2021imagenet21k,

title={ImageNet-21K Pretraining for the Masses},

author={Tal Ridnik and Emanuel Ben-Baruch and Asaf Noy and Lihi Zelnik-Manor},

year={2021},

eprint={2104.10972},

archivePrefix={arXiv},

primaryClass={cs.CV}

}